原文链接:https://blog.csdn.net/u013733326/article/details/79862336

Week 1 Quiz - Introduction to deep learning

1.What does the analogy “AI is the new electricity” refer to?

- AI is powering personal devices in our homes and offices, similar to electricity.

- Through the “smart grid”, AI is delivering a new wave of electricity.

- :AI runs on computers and is thus powered by electricity, but it is letting computers do things not possible before.

- Similar to electricity starting about 100 years ago, AI is transforming multiple industries.

解:4

AI是新的电能,作者认为是因为AI带来了类似电能一样的变革。

2.Which of these are reasons for Deep Learning recently taking off? (Check the two options that apply.)

- We have access to a lot more computational power.

- Neural Networks are a brand new field.

- We have access to a lot more data.

- Deep learning has resulted in significant improvements in important applications such as online advertising, speech recognition, and image recognition.

解:1 3

深度学习近些年来快速发展的原因有高计算力和大数据量两点。

2:神经网络不是一个全新的领域,也称联结主义,有着几十年的历史。

4:深度学习有很多应用不是快速发展的原因,是快速发展的结果。

3.Recall this diagram of iterating over different ML ideas. Which of the statements below are true? (Check all that apply.)

- Being able to try out ideas quickly allows deep learning engineers to iterate more quickly.

- Faster computation can help speed up how long a team takes to iterate to a good idea.

- It is faster to train on a big dataset than a small dataset.

- Recent progress in deep learning algorithms has allowed us to train good models faster (even without changing the CPU/GPU hardware).

解:1 2 4

3:在使用同一个模型的前提下,训练数据量越大,训练需要的时间也就越多。

4.When an experienced deep learning engineer works on a new problem, they can usually use insight from previous problems to train a good model on the first try, without needing to iterate multiple times through different models. True/False?

- True

- False

解:2

在模型选择时,是不能直接通过直觉进行直接选择,直觉可能会有所帮助,但是使用不同的模型迭代是非常有必要的。

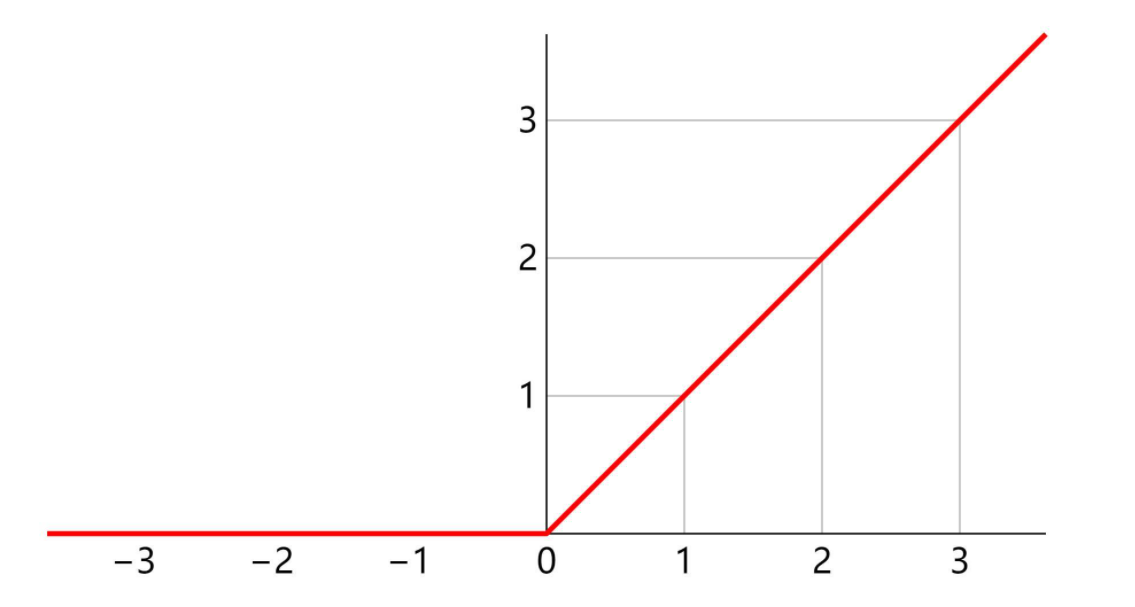

5.Which one of these plots represents a ReLU activation function?

6.Images for cat recognition is an example of “structured” data, because it is represented as a structured array in a computer. True/False?

- True

- False

解:2

图片属于非结构化数据。

7.A demographic dataset with statistics on different cities’ population, GDP per capita, economic growth is an example of “unstructured” data because it contains data coming from different sources. True/False?

- True

- False

解:2

不同城市人口、人均GDP、经济增长的人口统计数据集是结构化的数据。结构化数据与非结构化数据的区别不是因为数据来源。

8.Why is an RNN (Recurrent Neural Network) used for machine translation, say translating English to French? (Check all that apply.)

- It can be trained as a supervised learning problem.

- It is strictly more powerful than a Convolutional Neural Network (CNN).

- It is applicable when the input/output is a sequence (e.g., a sequence of words).

- RNNs represent the recurrent process of Idea->Code->Experiment->Idea->….

解:1 3

因为机器翻译的数据具有时序性的特点,而且是一个有监督学习。

2:不同的神经网络适用范围不同,没有好坏之分。

4:这个递归思想是神经网络训练的思想,不止适用于RNN。

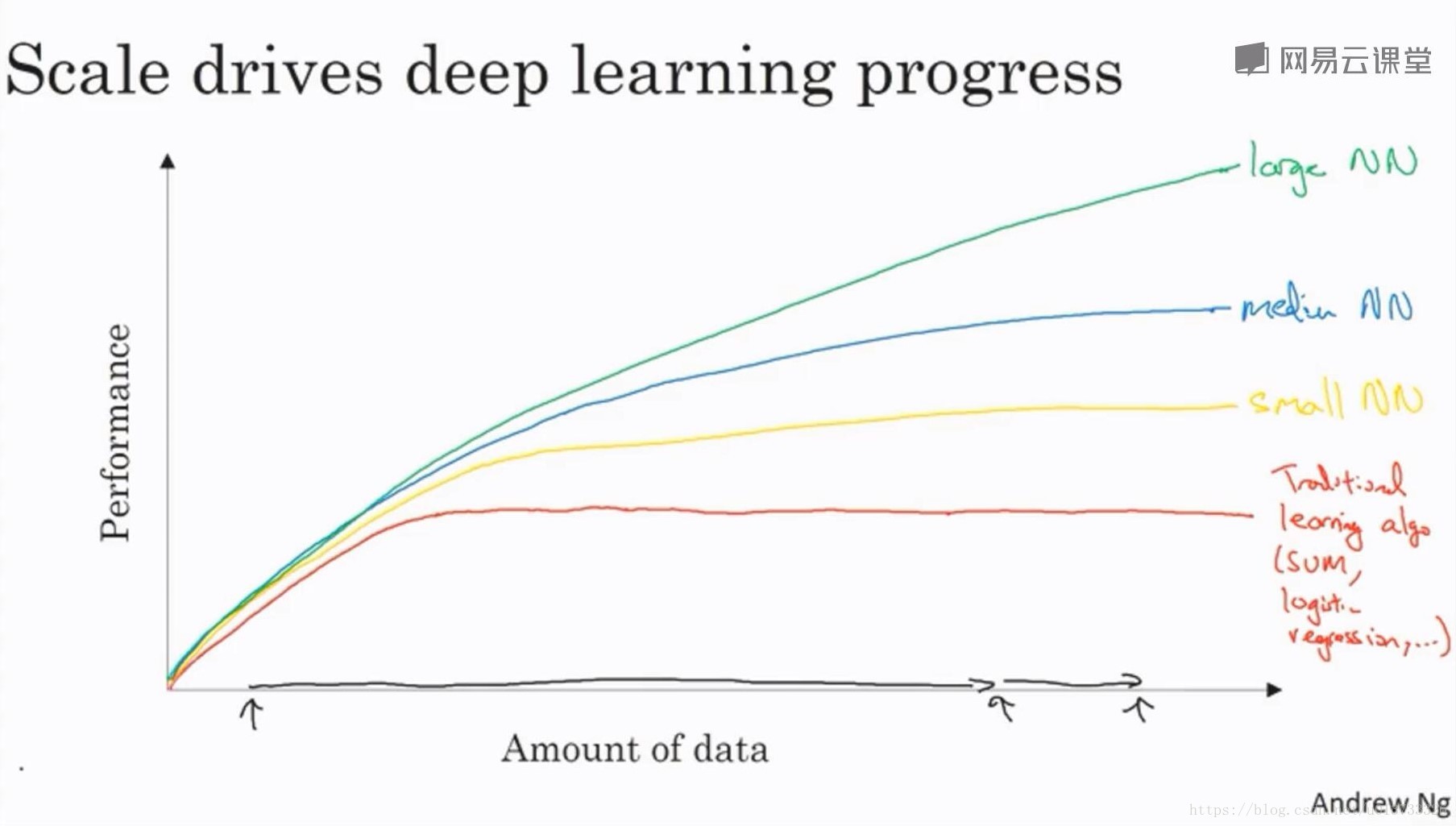

9.In this diagram which we hand-drew in lecture, what do the horizontal axis (x-axis) and vertical axis (y-axis) represent?

x-axis is the amount of data

y-axis (vertical axis) is the performance of the algorithm.

解:X轴代表数据量,Y轴代表算法的性能。

10.Assuming the trends described in the previous question’s figure are accurate (and hoping you got the axis labels right), which of the following are true? (Check all that apply.)

- Increasing the training set size generally does not hurt an algorithm performance, and it may help significantly.

- Increasing the size of a neural network generally does not hurt an algorithm performance, and it may help significantly.

- Decreasing the training set size generally does not hurt an algorithm performance, and it may help significantly.

- Decreasing the size of a neural network generally does not hurt an algorithm performance, and it may help significantly.

解:1 2

模型训练时,增加训练集大小和神经网络的大小通常不会影响算法的性能(后面的平坡),而且可能会提高算法的性能(上坡)。