一、环境准备

1.1 代码准备

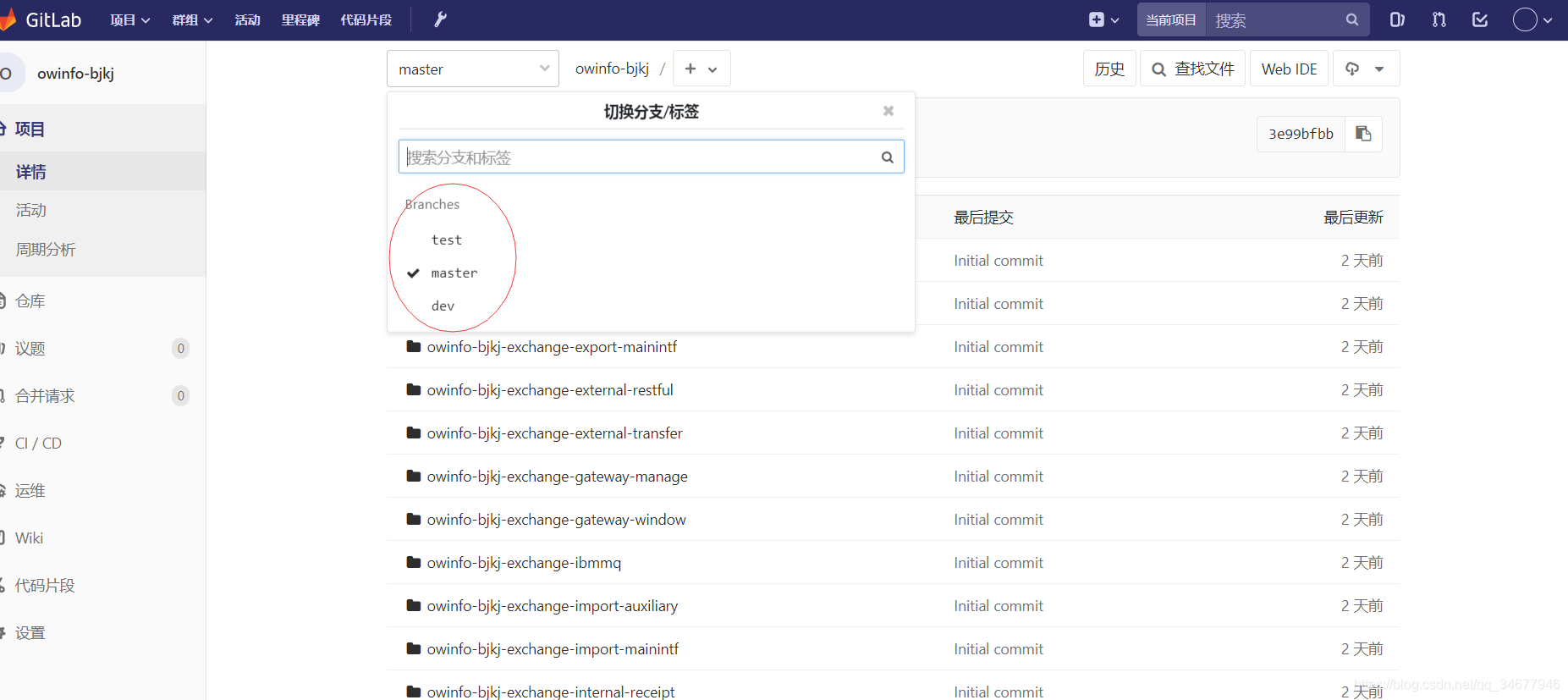

- gitlab准备相关代码,目前三个分支。该代码用来部署

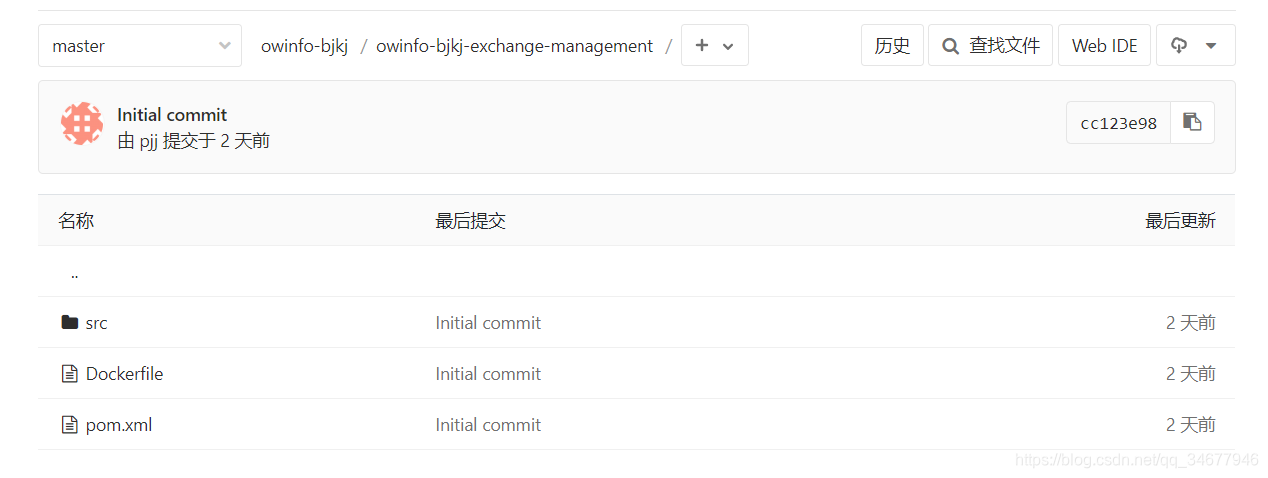

- 因为使用的容器部署,所以每个项目下一个目录有一个Dockerfile文件,如下

保证服务名和jar包名称固定,jar包和服务有一样的名字,pom.xml可以设置finalName属性

FROM lizhenliang/java:8-jdk-alpine

LABEL maintainer pengjunjie

ENV JAVA_ARGS="-Dfile.encoding=UTF8 -Duser.timezone=GMT+08"

COPY ./target/owinfo-bjkj-exchange-management.jar ./

EXPOSE 5006

CMD java -jar $JAVA_ARGS $JAVA_OPTS /owinfo-bjkj-exchange-management.jar

- 保证在父pom.xml目录能够成功编译打包

1.2 harbor准备

- 准备好部署服务的 chart模板放在template项目下,用helm进行部署会使用该模板,如下:

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: {

{

.Values.image.name }}namespace: {

{

.Release.Namespace }}

spec:replicas: {

{

.Values.replicaCount }}selector:matchLabels:project: {

{

.Values.project }}service: {

{

.Values.image.name }}template:metadata:labels:project: {

{

.Values.project}}service: {

{

.Values.image.name }}spec:imagePullSecrets:- name: registry-pull-secretcontainers:- name: {

{

.Values.image.name }}image: "{

{ .Values.image.repository }}:{

{ .Values.image.tag }}"imagePullPolicy: Alwaysports:- protocol: TCPcontainerPort: {

{

.Values.service.targetPort }}env:- name: JAVA_OPTSvalue: "-Xmx2g"resources:requests:cpu: 0.5memory: 256Milimits:cpu: 1memory: 2GireadinessProbe:tcpSocket:port: {

{

.Values.service.targetPort }}initialDelaySeconds: 60periodSeconds: 10livenessProbe:tcpSocket:port: {

{

.Values.service.targetPort }}initialDelaySeconds: 60periodSeconds: 10

1.2 jenkins准备

- 准备jenkins-slave镜像,保证有jdk、maven、docker、helm、kubectl等环境,保证正确的时区设置

FROM centos:7

LABEL maintainer pengjunjieRUN yum install -y java-1.8.0-openjdk maven curl git libtool-ltdl-devel && \yum clean all && \rm -rf /var/cache/yum/* && \mkdir -p /usr/share/jenkinsCOPY slave.jar /usr/share/jenkins/slave.jar

COPY jenkins-slave /usr/bin/jenkins-slave

COPY settings.xml /etc/maven/settings.xml

RUN chmod +x /usr/bin/jenkins-slave

COPY helm kubectl /usr/bin/RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

RUN echo 'Asia/Shanghai' >/etc/timezoneENTRYPOINT ["jenkins-slave"]

- jenkins确保下面插件已经安装

kubernetes、pipeline、git、git parameter、Extended Choice Parameter、Pipeline unit steps、config file provider等插件

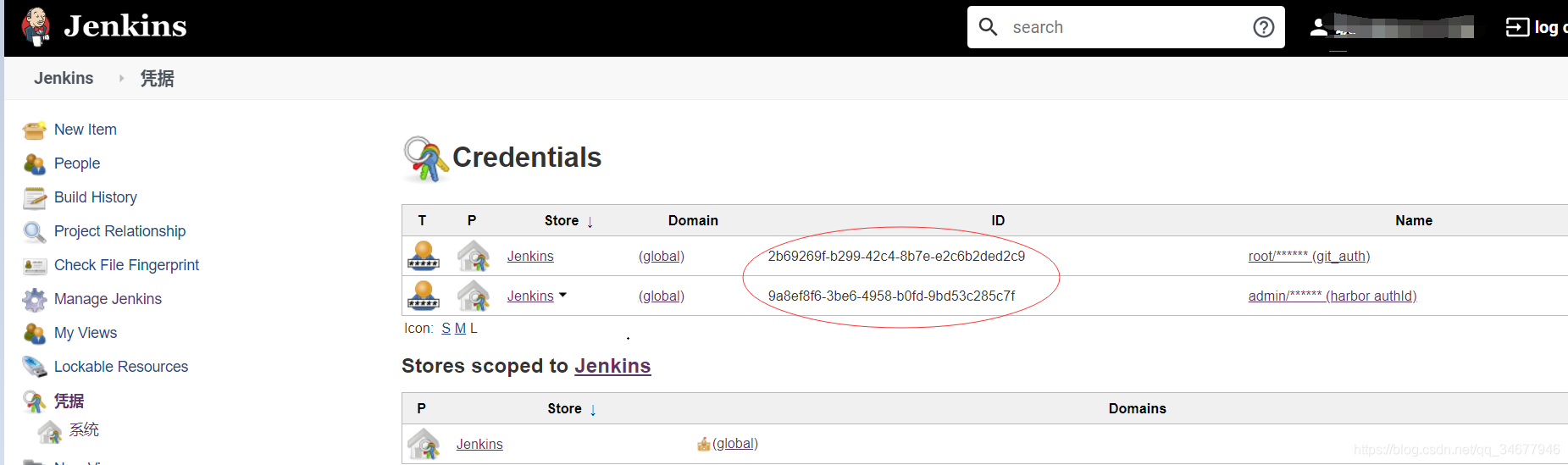

- 创建jenkins连接gitlab、harbor的认证凭据

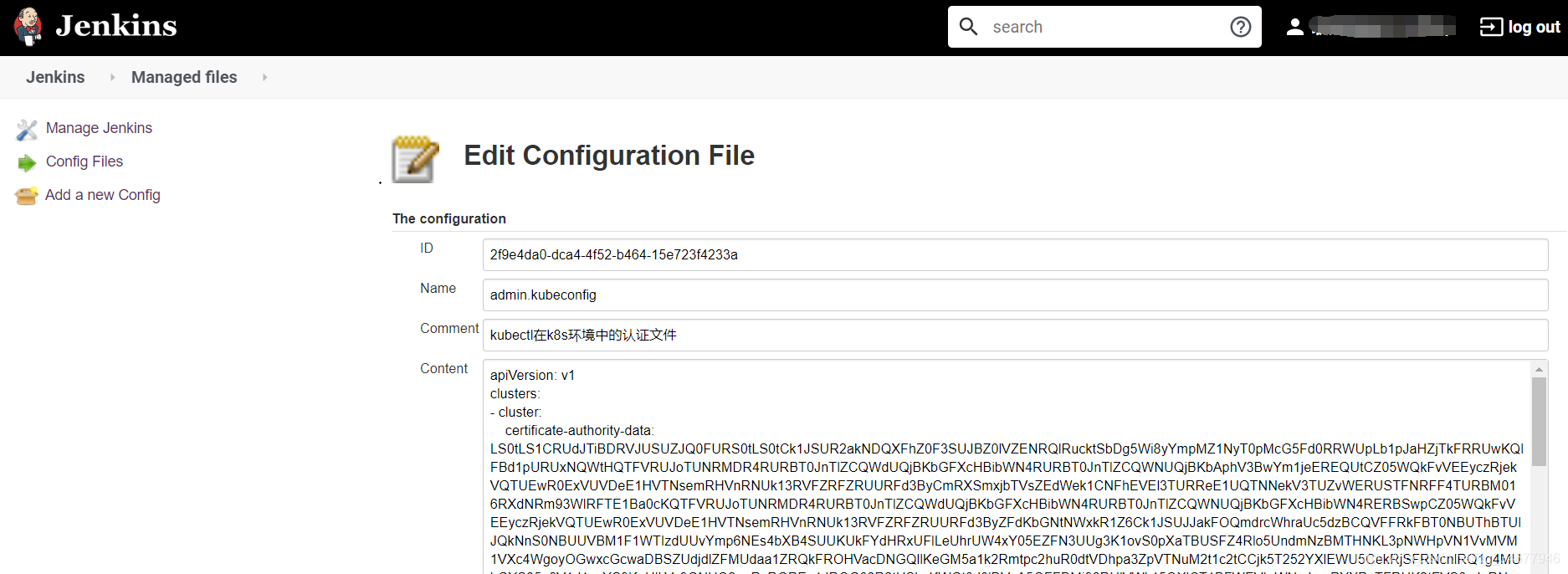

- 创建jenkins-slave中 kubectl连接k8s集群的认证文件

admin.kubeconfig生成方式如下

保证目录下面有下面4个文件

ca.pem,ca-key.pem,ca-config.json,admin.json

ca-config.json 定义新的ca的过期时间等属性,如下

{

"signing": {

"default": {

"expiry": "87600h"},"profiles": {

"kubernetes": {

"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}

}

admin.json 定义了ca的基本属性,如下

{

"CN": "admin","hosts": [],"key": {

"algo": "rsa","size": 2048},"names": [{

"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:masters","OU": "System"}]

}

生成admin证书

# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

生成admin用户并绑定集群权限

# export KUBE_APISERVER="https://192.168.217.140:6443"# 设置集群参数

# kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=${KUBE_APISERVER} --kubeconfig=admin.kubeconfig# 设置客户端认证参数

# kubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=admin.kubeconfig# 设置上下文参数

# kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=admin.kubeconfig# 设置默认上下文

# kubectl config use-context kubernetes --kubeconfig=admin.kubeconfig

最后把admin.kubeconfig的内容复制到jenkins里面,生成认证id即可

# ls

admin.csr admin.json admin-key.pem admin.kubeconfig admin.pem ca-config.json ca-key.pem ca.pem

二 pipeline + CICD实战

2.1 pipeline微服务模板

jenkins创建一个pipeline项目,pipeline文件如下

// 1、更改git_url项目地址

// 2、更改要发布的服务列表

// 3、通过Namespace隔离,更改Namespace列表,这里需要创建harbor 仓库的名字为${Namespace}, k8s集群中也需要创建${Namespace}

// ${Namespace} 规定取包含项目和分支含义且唯一的名称,比如 owinfo-bjkj, owinfo-bjkj-test, owinfo-bjkj-dev

// 4、保证http://${registry}/chartrepo/template下面有服务部署需要的chart模板// 项目gitlab地址、gitlab认证ID

def git_url = "http://192.168.217.141:880/root/owinfo-bjkj.git"

def git_authId = "2b69269f-b299-42c4-8b7e-e2c6b2ded2c9"// 项目harbor仓库地址、项目harbor中的项目仓库名称、harbor认证ID

def registry = "192.168.217.142"

def harbor_authId = "9a8ef8f6-3be6-4958-b0fd-9bd53c285c7f"// jenkins slave中kubectl认证

def k8s_authId="2f9e4da0-dca4-4f52-b464-15e723f4233a"// image_pull_secret

def image_pull_secret="registry-pull-secret"pipeline {agent {kubernetes {label 'jenkins-slave'yaml """

apiVersion: v1

kind: Pod

metadata:labels:name: jenkins-slave

spec: containers:- name: jnlpimage: "${registry}/library/jenkins-slave:jdk1.8"imagePullPolicy: IfNotPresentvolumeMounts:- name: docker-cmdmountPath: /usr/bin/docker- name: docker-sockmountPath: /var/run/docker.sock- name: maven-cachemountPath: /root/.m2volumes:- name: docker-cmdhostPath:path: /usr/bin/docker- name: docker-sockhostPath:path: /var/run/docker.sock- name: maven-cachehostPath:path: /root/.m2

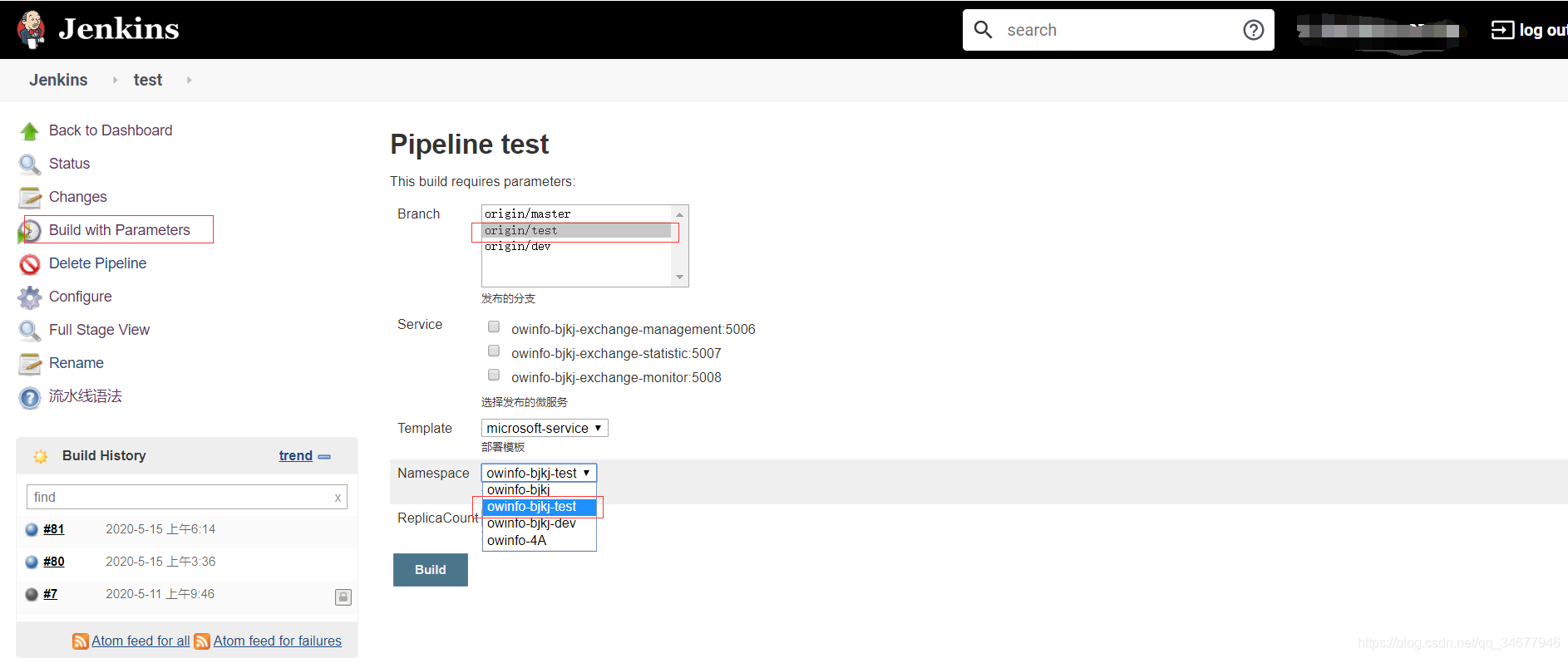

"""}}parameters {gitParameter branch: '',branchFilter: '.*',defaultValue: '',description: '发布的分支',name: 'Branch',quickFilterEnabled: false,selectedValue: 'NONE',sortMode: 'NONE',tagFilter: '*',type: 'PT_BRANCH'extendedChoice defaultValue: 'none', description: '选择发布的微服务', \multiSelectDelimiter: ',', name: 'Service', type: 'PT_CHECKBOX', \value: 'owinfo-bjkj-exchange-management:5006,owinfo-bjkj-exchange-statistic:5007,owinfo-bjkj-exchange-monitor:5008'choice (choices: ['microsoft-service'], description: '部署模板', name: 'Template')choice (choices: ['owinfo-bjkj', 'owinfo-bjkj-test', 'owinfo-bjkj-dev', 'owinfo-4A'], description: '命名空间', name: 'Namespace')choice (choices: ['1', '3', '5', '7'], description: '副本数目', name: 'ReplicaCount')} stages {stage('拉取代码') {steps {checkout([$class: 'GitSCM', branches: [[name: "${params.Branch}"]], doGenerateSubmoduleConfigurations: false, extensions: [], gitTool: 'Default', submoduleCfg: [], userRemoteConfigs: [[url: "${git_url}",credentialsId: "${git_authId}"]]])}}stage('代码编译') {steps {sh """mvn clean package -Dmaven.test.skip=true"""}}stage('构建镜像') {steps {withCredentials([usernamePassword(credentialsId: "${harbor_authId}", passwordVariable: 'dockerPassword', usernameVariable: 'dockerUser')]) {sh """docker login -u ${dockerUser} -p '${dockerPassword}' ${registry}for service in \$(echo ${Service} |sed 's/,/ /g');doservice_name=\${service%:*}image_name=${registry}/${Namespace}/\${service_name}:${BUILD_NUMBER}cd \${service_name}docker build -t \${image_name} .docker push \${image_name}cd ${WORKSPACE}done"""configFileProvider([configFile(fileId: "${k8s_authId}", targetLocation: "admin.kubeconfig")]) {sh """# 创建imagePullSecret、helm拉取镜像时使用kubectl create secret docker-registry ${image_pull_secret} \--docker-username=${dockerUser} --docker-password=${dockerPassword} \--docker-server=${registry} -n ${Namespace} --kubeconfig admin.kubeconfig |true# 创建chart仓库地址名称为template,该空间下面可以定义多个模板化的charthelm repo add --username ${dockerUser} --password ${dockerPassword} template http://${registry}/chartrepo/template"""}}} }stage('Helm部署到K8S') {steps {sh """common_args="-n ${Namespace} --kubeconfig admin.kubeconfig"for service in \$(echo ${Service} |sed 's/,/ /g');doservice_name=\${service%:*}service_port=\${service#*:}image=${registry}/${Namespace}/\${service_name}tag=${BUILD_NUMBER}helm_args="\${service_name} --set image.repository=\${image} --setimage.tag=\${tag} --set replicaCount=${ReplicaCount} --setimage.name=\${service_name} --set project=${Namespace} --setimagePullSecrets[0].name=${image_pull_secret} --setservice.targetPort=\${service_port} template/${Template}"# 判断是否为新部署if helm history \${service_name} \${common_args} &>/dev/null;thenaction=upgradeelseaction=installfihelm \${action} \${helm_args} \${common_args}done# 查看pod状态sleep 30kubectl get pods -o wide \${common_args}"""}}}

}

上面的模板是统一部署服务的模板,可以重复使用,需要注意下面事项:

-

保证构建速度,CICD跳过单元测试,将单元测试和代码检查的工作剥离出来

-

涉及到的数据库、缓存、队列环境未在集群中部署,因此需要现有的环境

-

构建前,上面需要修改的地址必须要修改好

-

必须保证harbor和k8s事先有这个${Namespace}

-

必须保证参数化构建时,代码的url和namespace对应上,比如test分支,我们部署到owinfo-bjkj-test

目前chart只使用deployment,服务部署完成后,需要选择Ingress-controller、istio、或者Kong等网关暴露服务。同样项目容器的日志可以统一按照 /服务名/xxx/*.log的方式挂载到nfs,通过filebeat进行统一收集。

harbor作为镜像仓库,如下:

2.2 还需要什么?

- 统一挂载服务日志到nfs,基于文件的方式用filebeat收集并存储到es,kibana展示。动态jenkins-slave pod中的maven缓存挂载到的本地/root/.m2,同样可以使用nfs挂载

- k8s部署Kong网关,进行服务暴露

- 剥离单元测试、代码检查,利用其它办法集成sonarqube工具

- 利用prometheus进行k8s集群内部监控,实现HPA等功能,grafana展示