昨天为公司多个部门的小伙伴们做了关于TiDB的科普,把写的讲义直接贴在下面吧。

(Markdown直接渲染成网页真的比做PPT简便太多了

TiDB 101

Part I - Introduction

What is TiDB?

- TiDB is an open-source, distributed, relational database

- It is NewSQL -- taking advantage of both traditional RDBMS and NoSQL

- With native OLTP and optional OLAP workload support

- With high compatibility against MySQL ecosystem

Key Features

- Horizontally scaling out/scaling in flexibly [Computing & storage separation]

- High availability [Multi-Raft consensus]

- ACID guarantee [Percolator distributed transaction]

- HTAP ready [Row-based engine for OLTP, column-based engine for OLAP]

- Rich series of data migration tools for transferring data

- 90% compatible with MySQL 5.7 environment

- See https://docs.pingcap.com/tidb/stable/mysql-compatibility for compatibility issues

- See https://docs.pingcap.com/tidb/stable/tidb-limitations for limits

Typical Use Cases

- Financial industry scenarios with high requirements for consistency, reliability, and fault tolerance

- Massive data and high concurrency scenarios with high requirements for capacity, scalability, and concurrency

- Scattered data collection and secondary processing scenarios

Disadvantages?

- ...

Part II - Architecture Overview

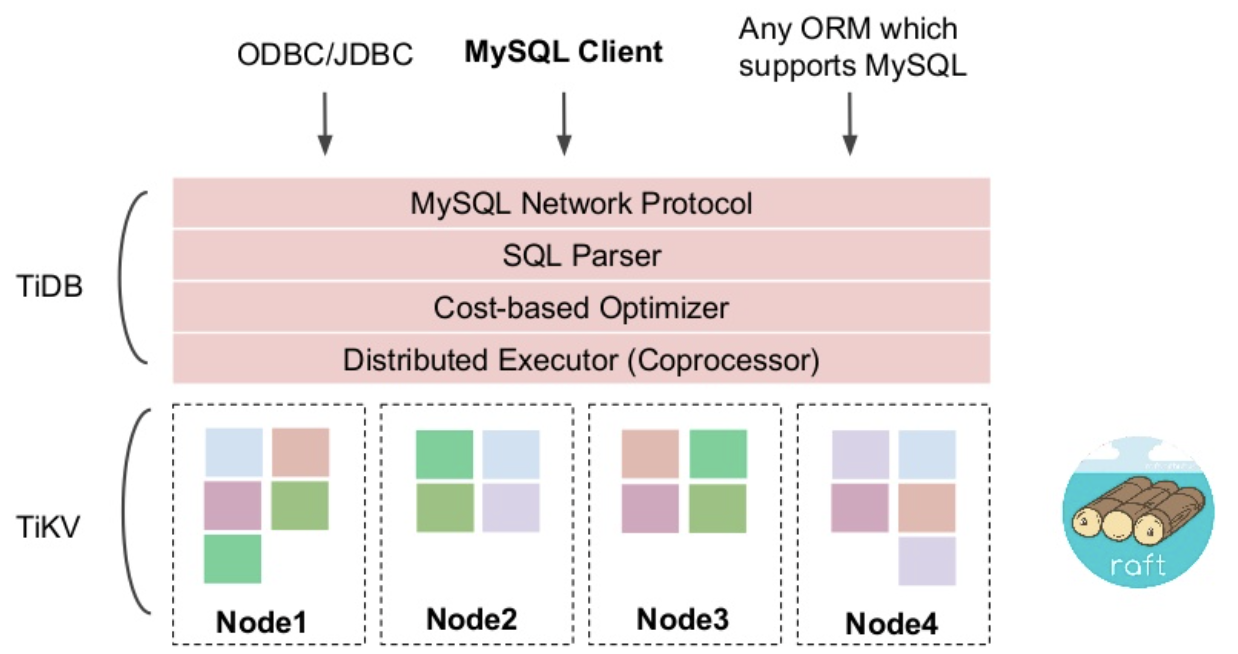

TiKV - Storage

- Storage layer for OLTP

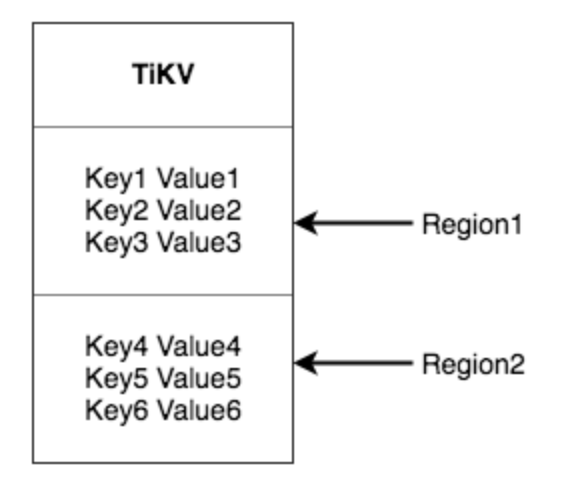

- A distributed transactional key-value engine

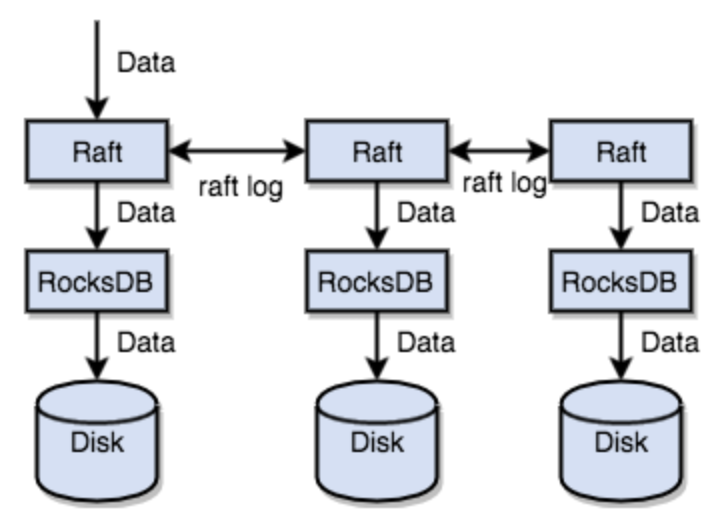

- TiKV stores are built on top of RocksDB (LSMT-based local-embedded KV system)

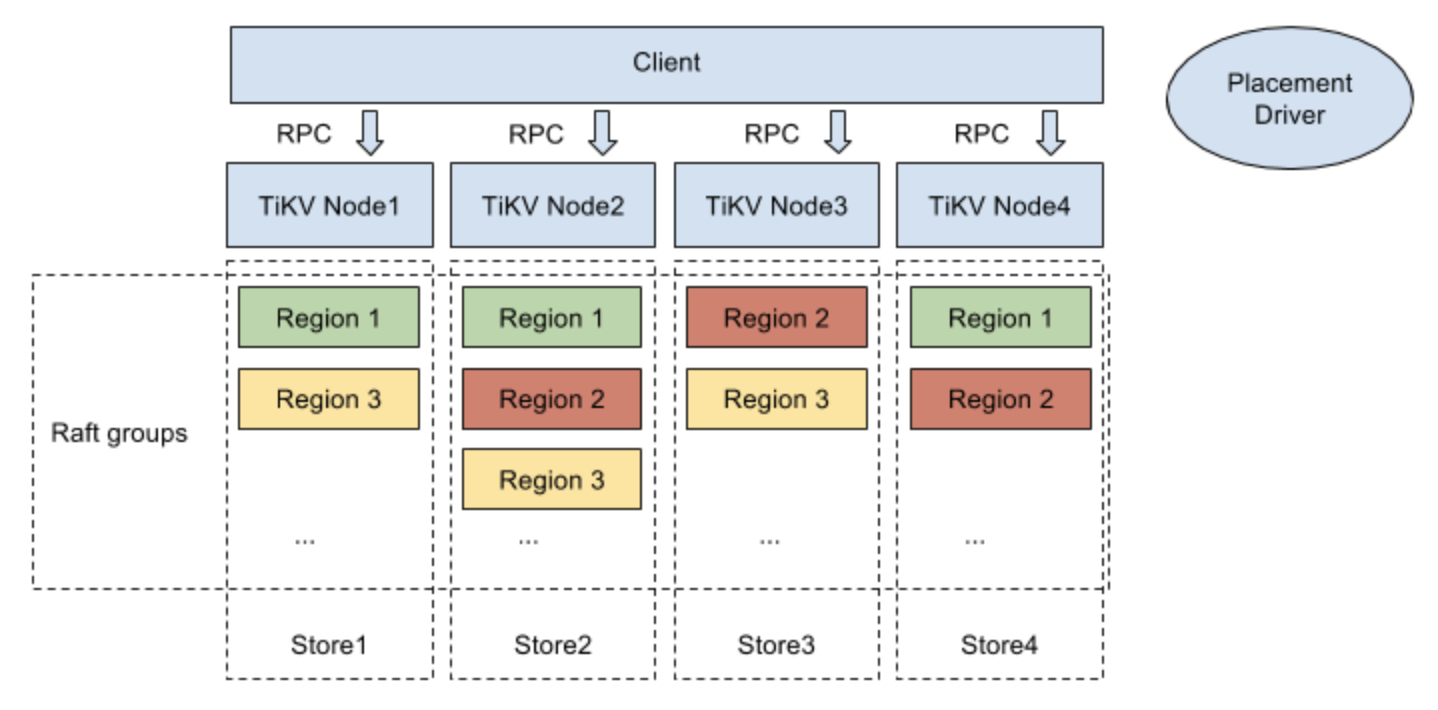

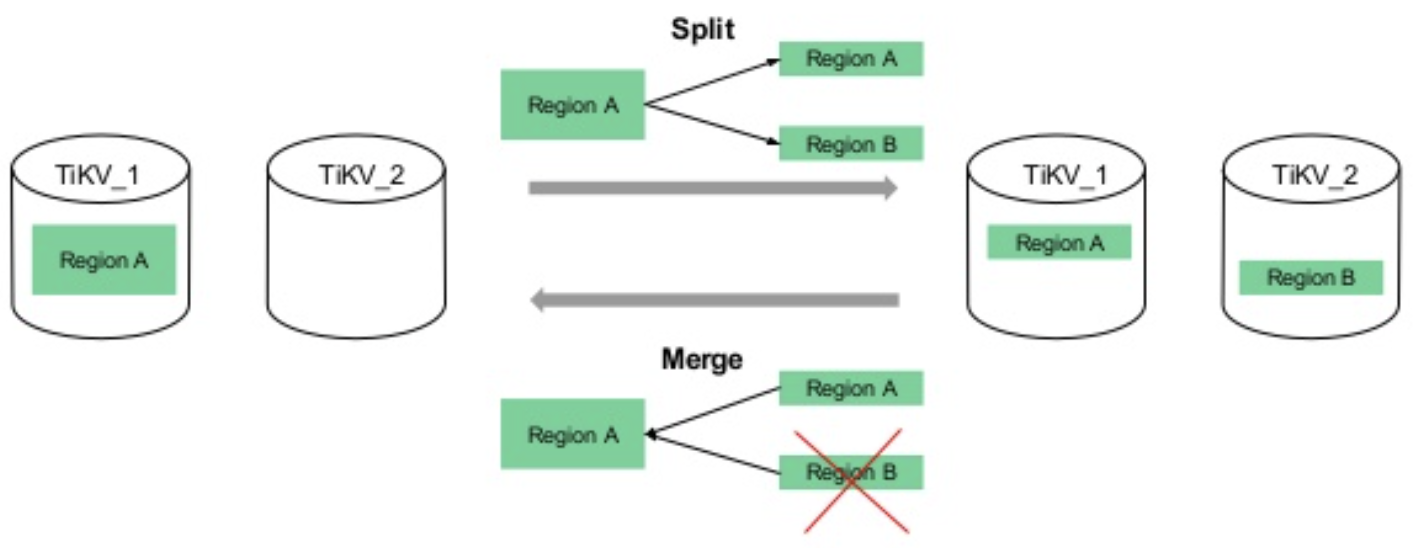

- Each store is divided into regions -- the basic unit of organizing data

- A region contains a continuous, sorted key range of

[start_key, end_key), like an SST - Regions are maintained in multiple replicas (default 3) as Raft groups, having a cap size, and can be merged & split

- Raft provides safe leader election / log replication / membership changing

- A region contains a continuous, sorted key range of

- Table data are mapped to TiKV with rules

- Row:

t{tableID}_r{rowID} -> [colValue1, colValue2, colValue3, ...] - Unique index:

t{tableID}_i{indexID}_{colValue} -> [rowID] - Ordinary index:

t{tableID}_i{indexID}_{colValue}_{rowID} -> [null]

- Row:

- Single-row (Per-KV) transaction is natively supported with snapshot isolation (SI) level

- Distributed transaction enabled by implementing Percolator protocol

- Almost decentralized 2PC

- MVCC timestamps stored in different RocksDB column families

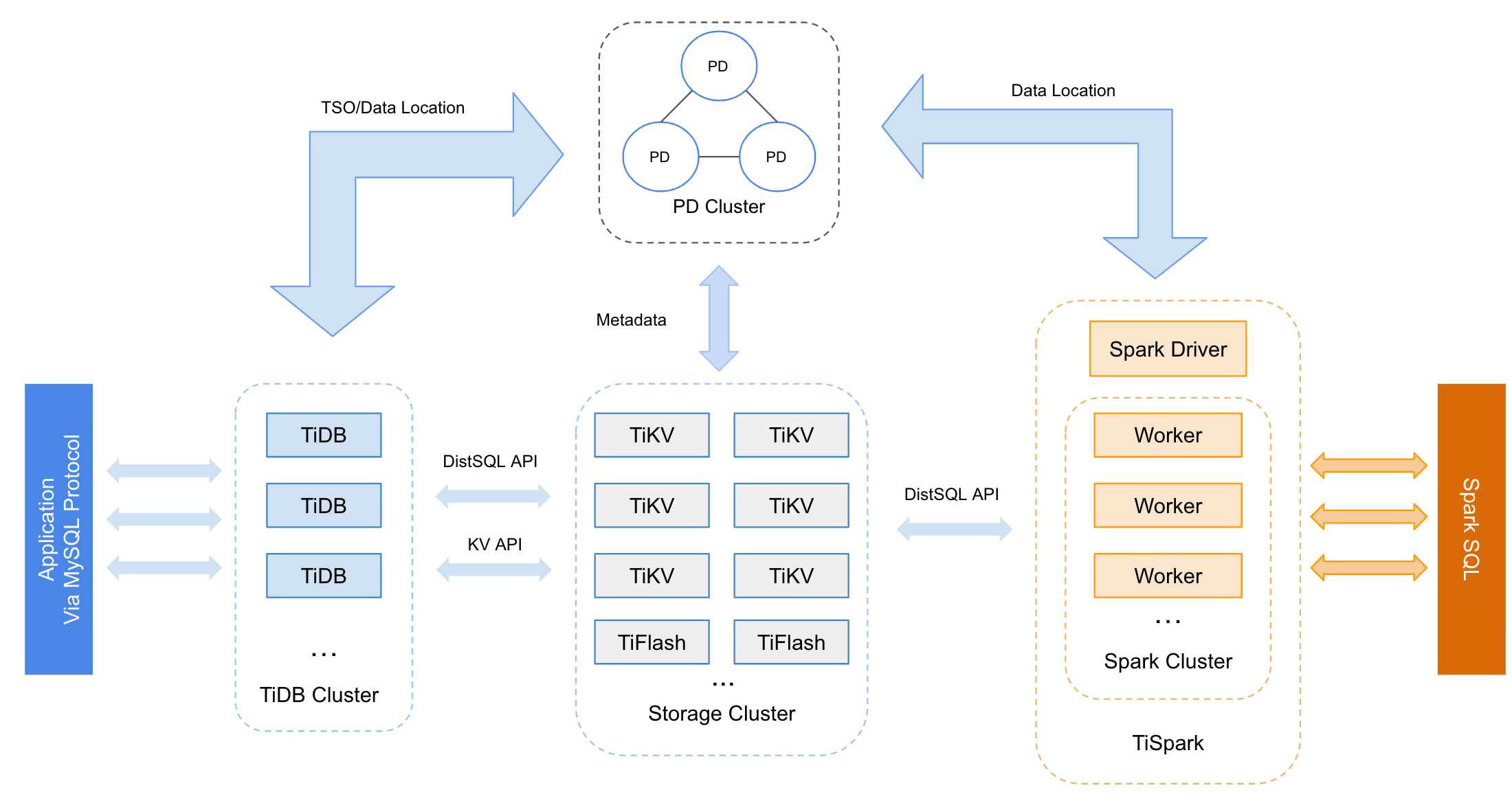

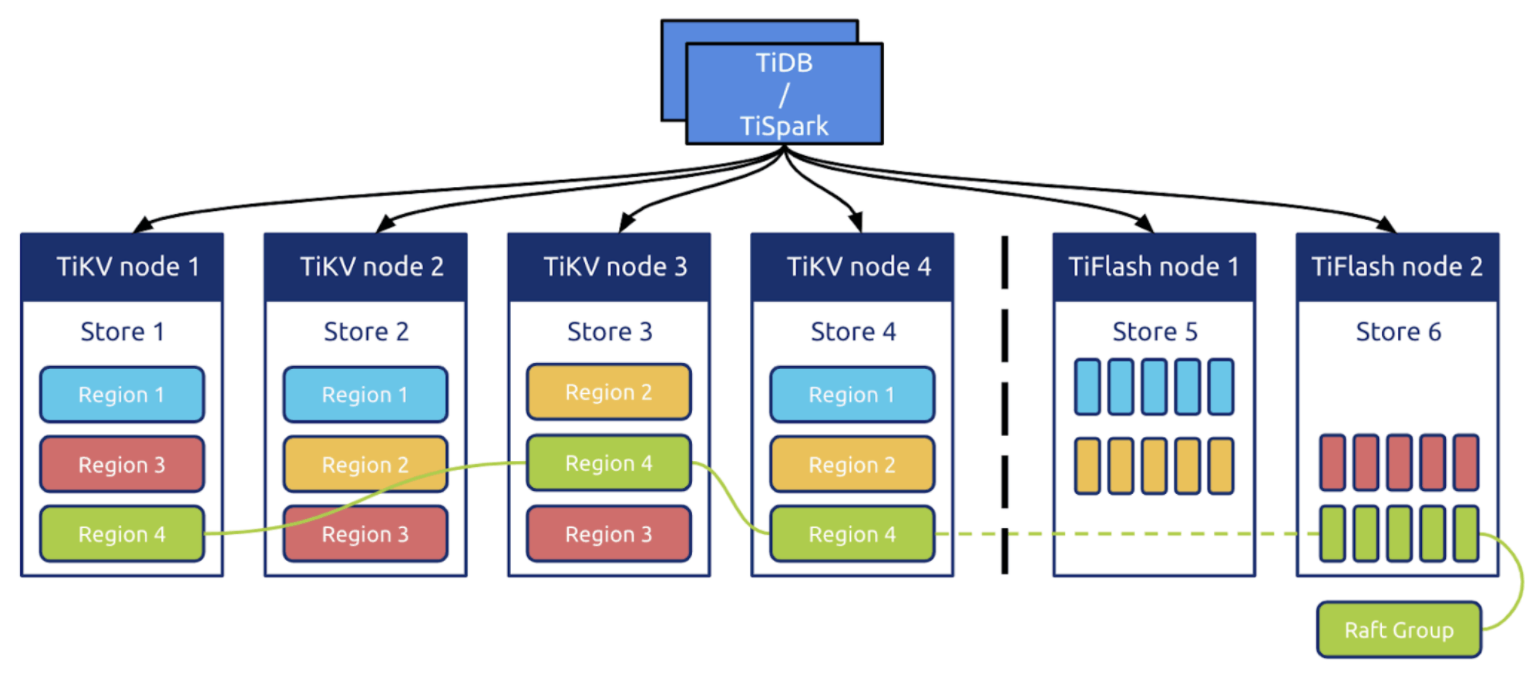

TiDB - Computation

- Stateless SQL layer

- Expose MySQL-flavored endpoint to client connections

- Can provide unified interface through load balancing components (HAProxy, LVS, Aliyun SLB, etc.)

- Perform SQL parsing & optimization

- Generate distributed execution plan

- Execute through DistSQL API (with TiKV coprocessors computing at lower level) or raw KV API

- Transmit data request/response to/from storage nodes

- e.g. A simple query showing TiDB & TiKV working together

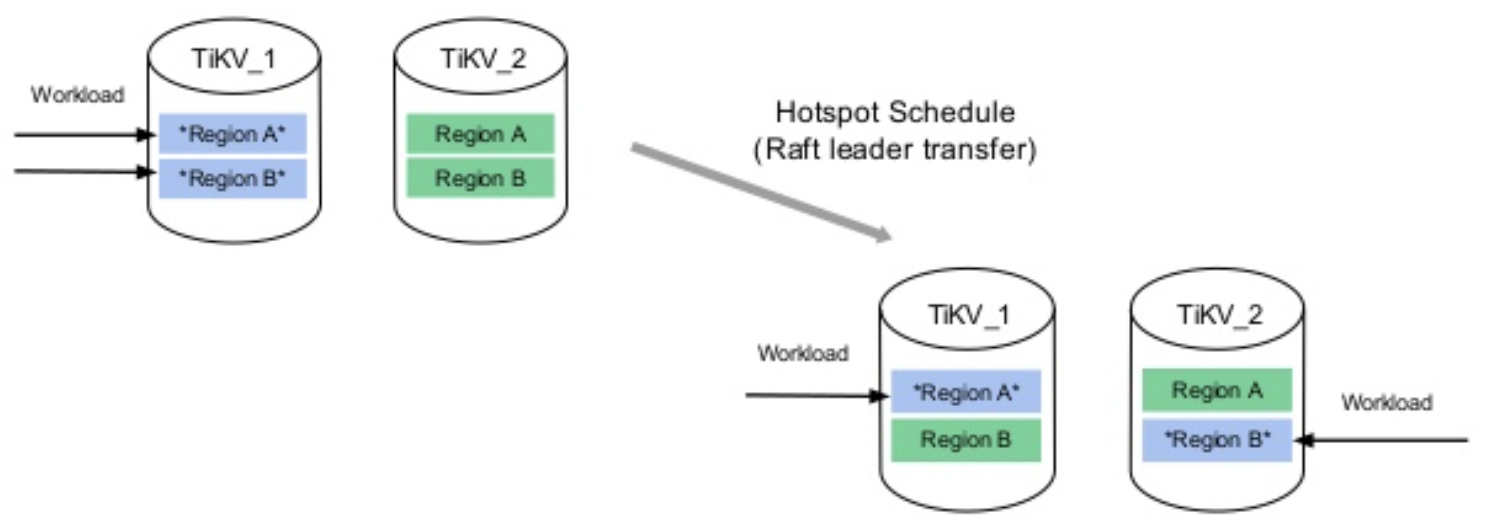

PD ("Placement Driver") - Scheduling

- Hold metadata (data location) and schedule regions in the cluster

- Receive heartbeats (state information) from TiKV peers and region leaders

- Act as timestamp oracle (TSO) for transactions

- Provide guarantee for following strategies

- The number of replicas of a Region needs to be correct

- Replicas of a Region need to be at different positions

- Replicas and their leaders need to be balanced between stores

- Hot-spots and storage size need to be balanced between stores

- e.g. Region split & merge

- e.g. Hot-spot removal

TiSpark - OLAP Extension [Optional]

- Traditional OLAP solution

- Shim layer built for runnning Spark on TiKV cluster

- Taking advantage of Spark SQL Catalyst

TiFlash - OLAP Extension [Optional]

- Brand-new OLAP solution

- Columnar storage with a layer of coprocessors efficiently implemented by ClickHouse

- Data are replicated from TiKV to TiFlash asynchronously as Raft learner

- Somewhat expensive, and we've already got a real ClickHouse cluster running for 8 months =.=

Part III - Peripheral Tools

TiUP

- A command line tool that manages components in the TiDB cluster

- Deploy / config / start / stop / scale / rolling upgrade with simple commands and YAML files

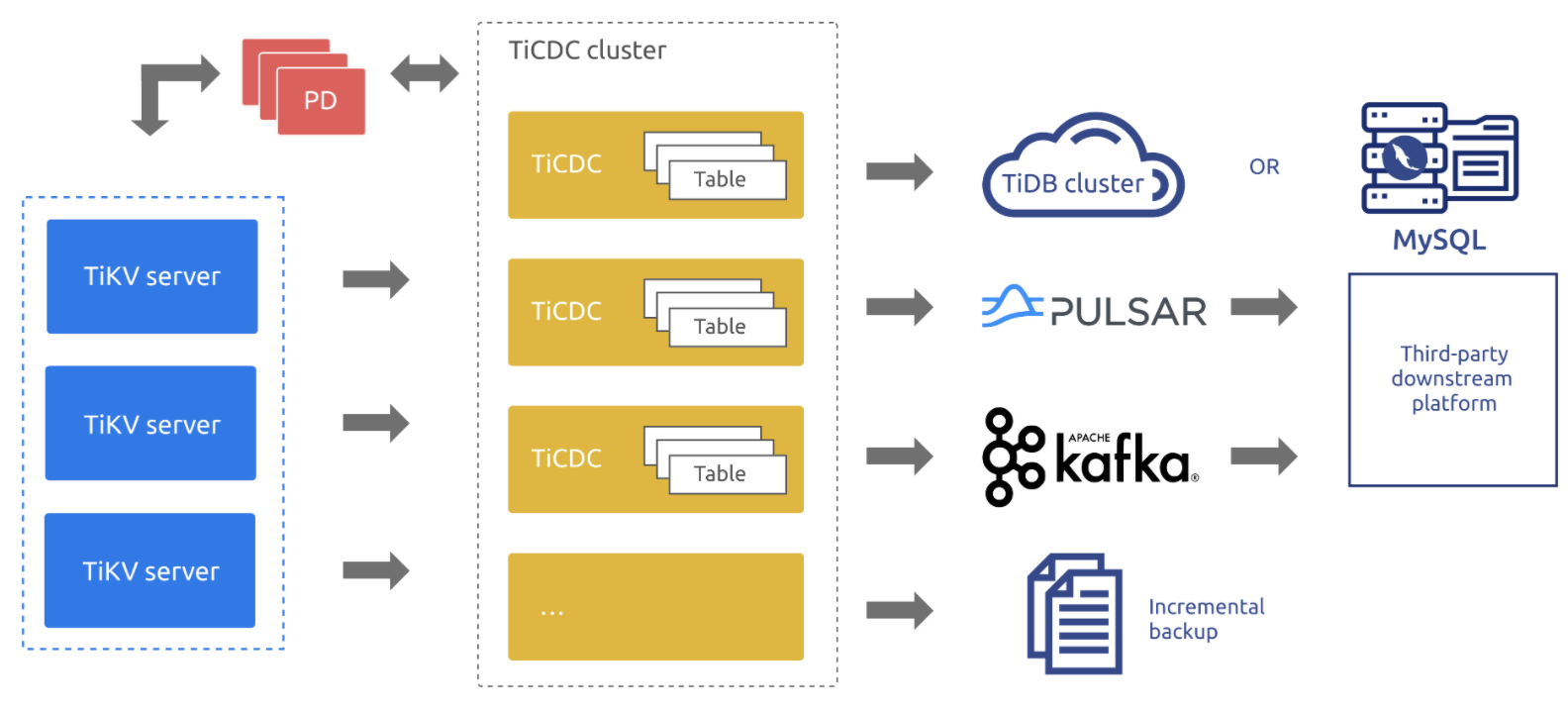

TiCDC

- A tool for replicating incremental data (change data capture) of TiDB

- Support other downstream systems to subscribe to TiDB binlog

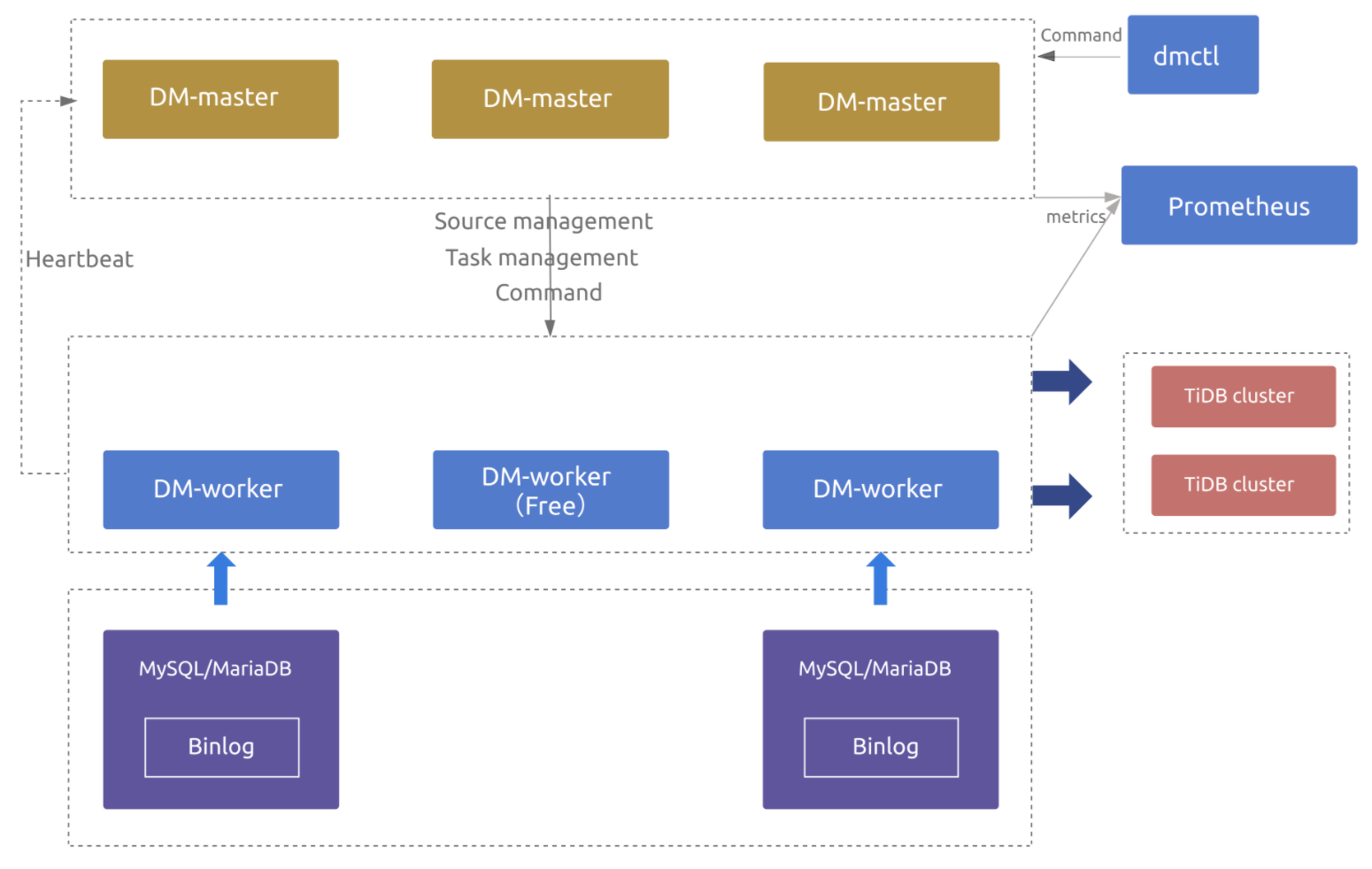

DM (Data Migration)

- An integrated data migration task platform that supports full/incremental data syncing from MySQL/MariaDB into TiDB

- Master-worker style

- Workers act as MySQL slaves to keep track of binlog from sources

- Supports table routing, black/white list, binlog filter & online DDLs

- Supports merging from sharded databases/tables

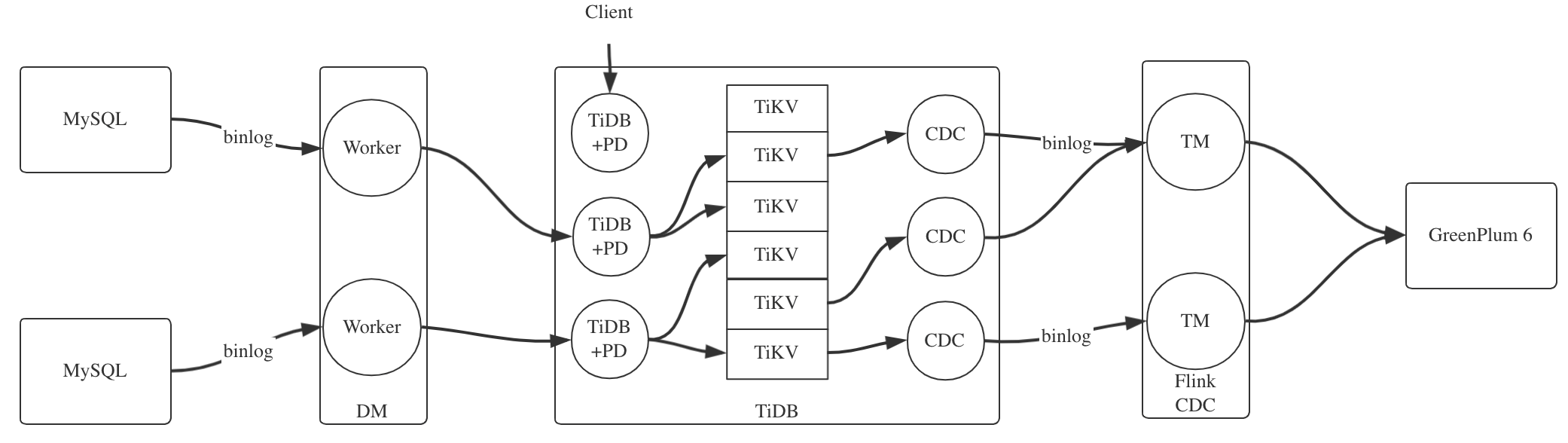

- Our proposed shard merging & CDC tracking route by now

Part IV - Cluster Configuration

- All servers are Aliyun ECSs (type g6e), CentOS 7.3+, 10Gbps network interfaces

- Regular parameter tuning for big data servers (THP, swap, CPU policy, NTP, etc...)

- Use TiUP for deployment

- See https://docs.pingcap.com/tidb/stable/hardware-and-software-requirements for detailed requirements

TiDB & PD

- TiDB & PD can be deployed on the same server

- TiDB requires CPU & memory while PD requires fast I/O

- Minimum 3 instances

- 3 * ecs.g6e.8xlarge [32vCPU / 128GB] & 2 * 1TB SSD

TiKV

- Multiple TiKVs can be deployed on the same server server

- Requires CPU & memory with very fast I/O

- Minimum 3 instances (Raft requires at least

n / 2 + 1nodes functioning) - 6 * ecs.g6e.13xlarge [52vCPU / 192GB] & 3 * 2TB ESSD PL2

- 3TiKVs per server = 18 instances total

- See https://docs.pingcap.com/tidb/stable/hybrid-deployment-topology#key-parameters for crucial parameters

- Assign a different disk to each TiKV

TiCDC

- Minimum 2 instances

- 3 * ecs.g6e.4xlarge [16vCPU / 64GB] & 1TB SSD

DM

- DM master & worker can be deployed on the same server

- 3 * ecs.g6e.4xlarge [16vCPU / 64GB] & 1TB HDD

- When dumping data, make sure that the disk has enough free space to hold them

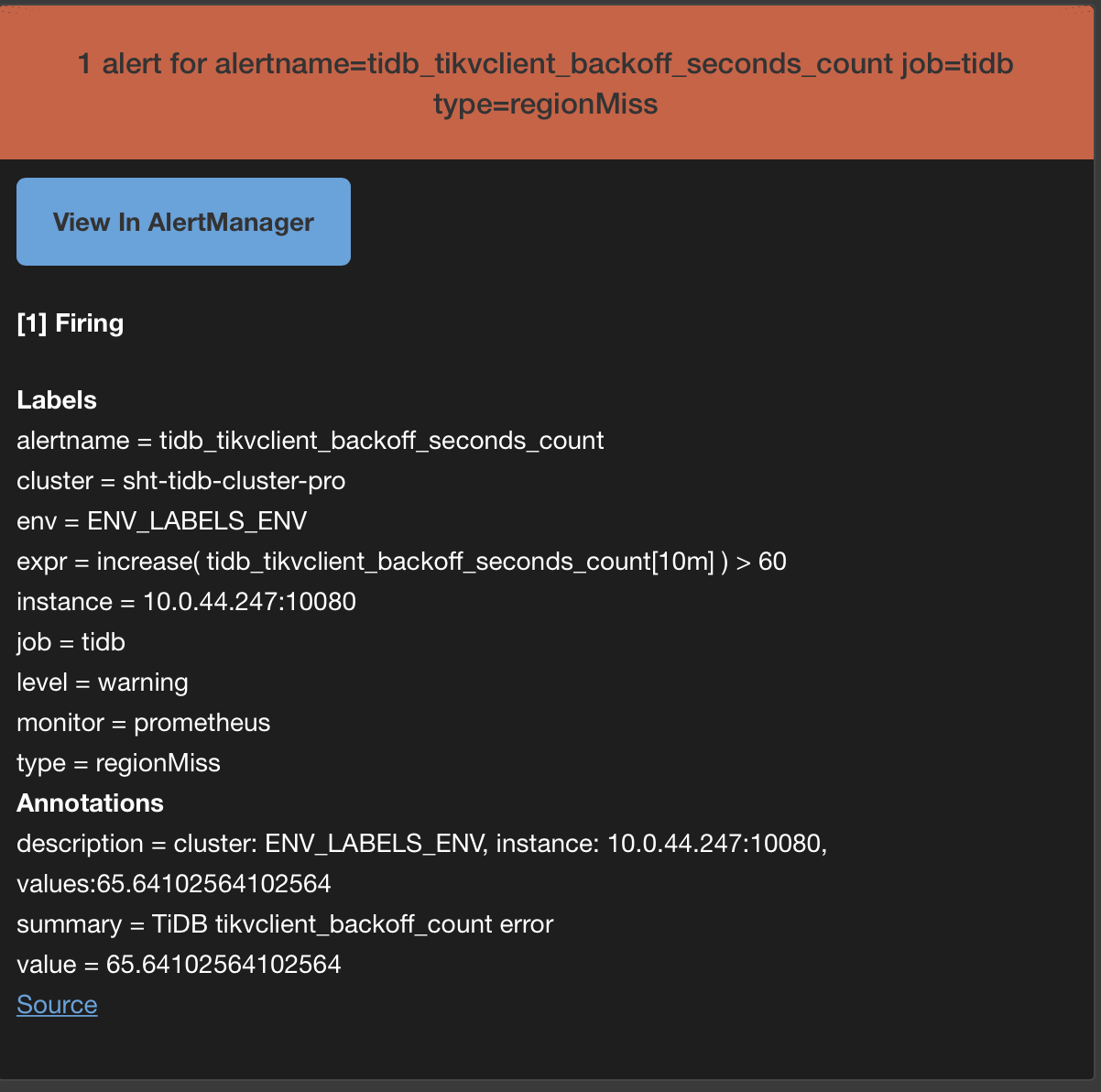

Monitoring

- Including Prometheus, Grafana & AlertManager

- 1 * ecs.g6e.4xlarge [16vCPU / 64GB] & 500GB SSD

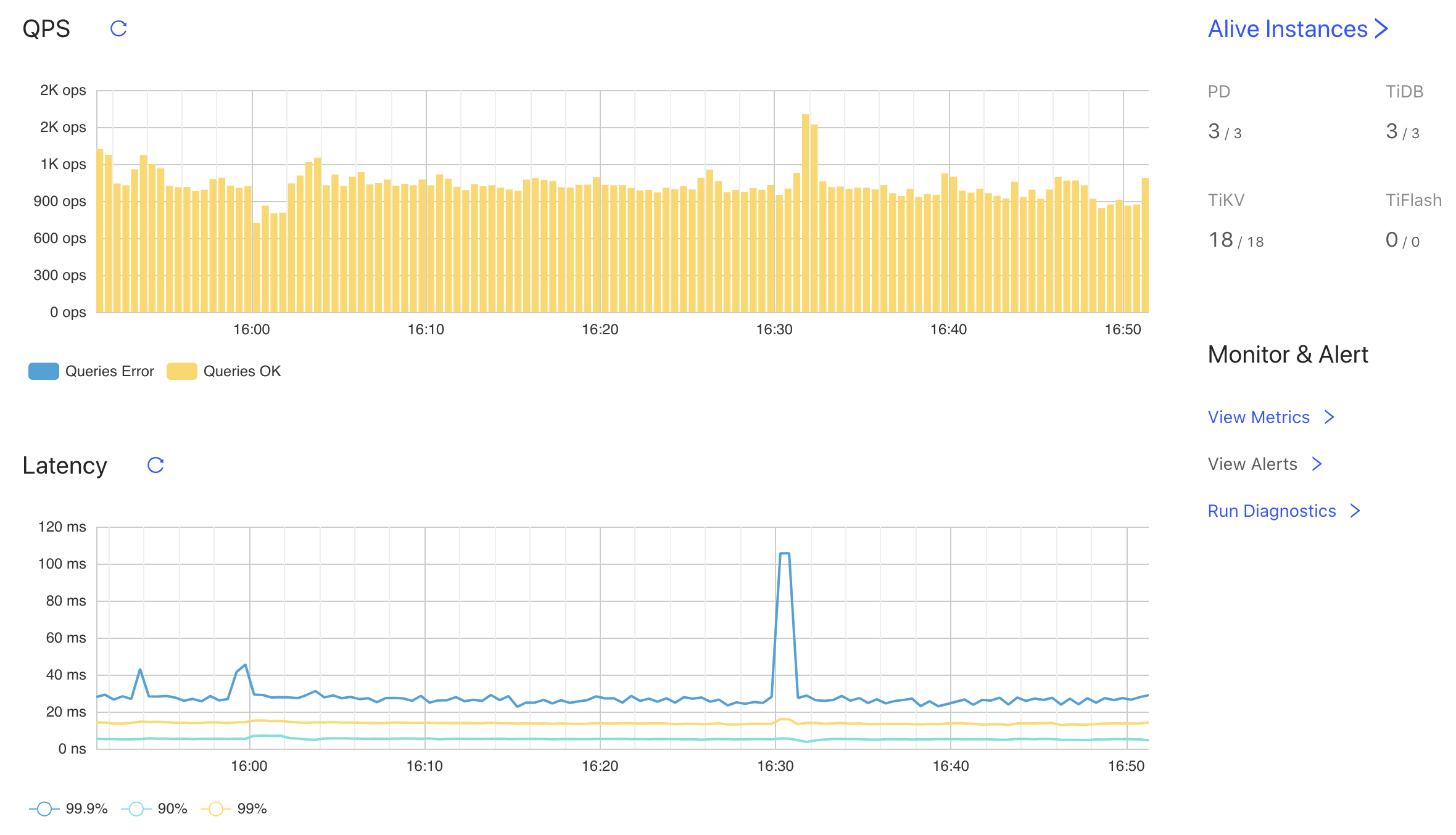

Part V - Operation & Monitoring

TiDB Dashboard

- Overview

- Query summary

【此处涉及机密数据,故略去】

- Query details

【此处涉及机密数据,故略去】

- Key visualization

【此处涉及机密数据,故略去】

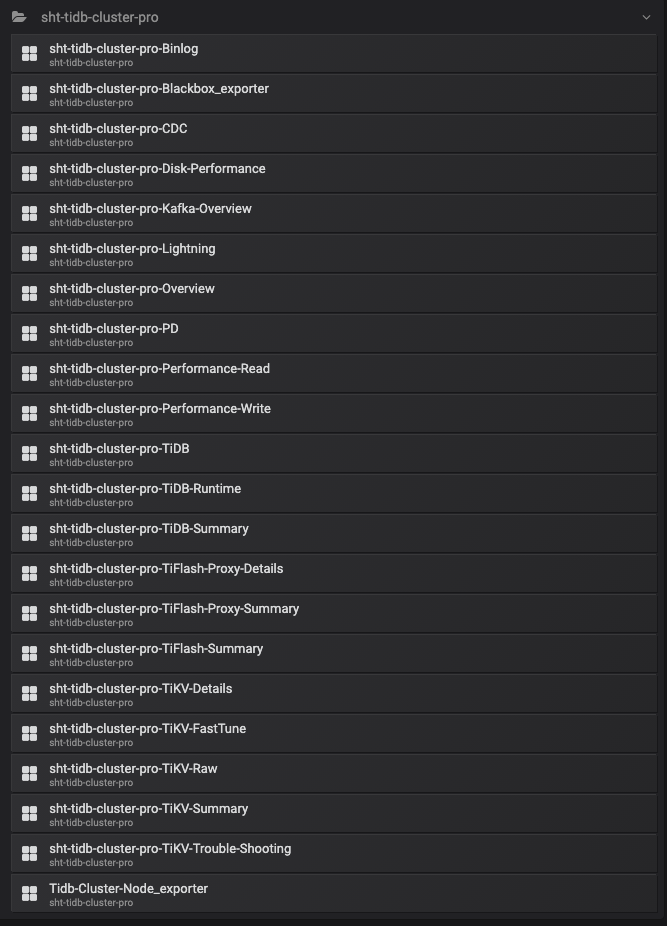

Grafana Dashboards (w/ Prometheus)

Alerting (by Mail)

Part VI - Examples, Q&A

- Client connection

- Dashboards

- Monitoring

- DM tasks

- ...

The End