12. 稠密连接网络(DenseNet)

ResNet中的跨层连接设计引申出了数个后续工作,稠密连接网络(DenseNet)就是其中之一。

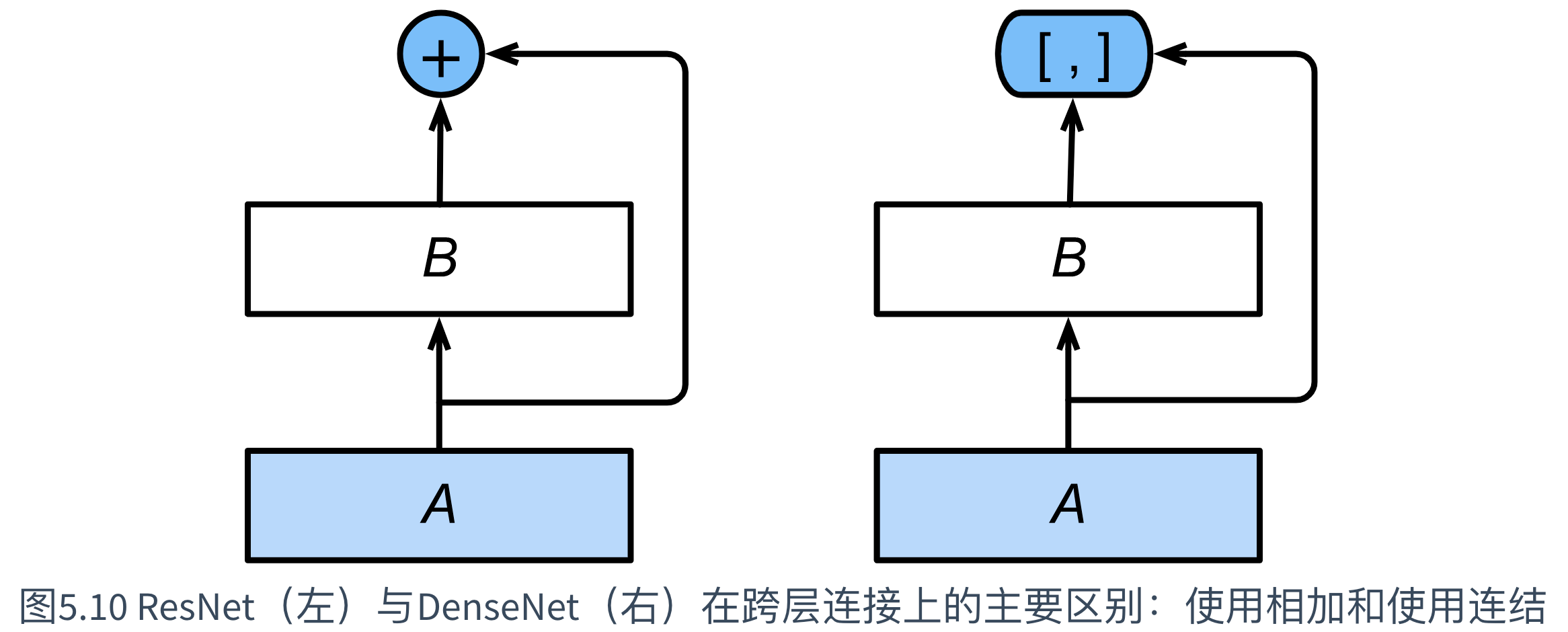

DenseNet与ResNet的主要区别,如下图所示:

图中将部分前后相邻的运算抽象为模块A和模块B。

与ResNet的主要区别在于:

DenseNet里模块B的输出,并非是ResNet那样和模块A的输出相加,而是在通道维上连结。

由此,模块A的输出可以直接传入模块B后面的层。

在这个设计里,模块A直接跟模块B后面的所有层连接在了一起。这也是它被称为“稠密连接”的原因。

DenseNet的主要构建模块是稠密块(dense block)和过渡层(transition layer)。

前者定义了输入和输出是如何连结的,后者则用来控制通道数,使之不过大。

12.1 稠密块

DenseNet使用了ResNet改良版的“批量归一化、激活和卷积”结构,该结构的实现在BottleNeck函数中:

class BottleNeck(Layer):def __init__(self, growth_rate, drop_rate):super(BottleNeck, self).__init__()self.bn1 = BatchNormalization()self.conv1 = Conv2D(filters=4 * growth_rate, kernel_size=1, padding="same", strides=1)self.bn2 = BatchNormalization()self.conv2 = Conv2D(filters=growth_rate, kernel_size=3, padding="same", strides=1)self.dropout = Dropout(rate=drop_rate)self.listLayers = [self.bn1,Activation("relu"),self.conv1,self.bn2,Activation("relu"),self.conv2,self.dropout]def call(self, x):y = xfor layer in self.listLayers.layers:y = layer(y)y = concatenate([x,y], axis=-1)return y

其中,在前向计算时,将每块的输入和输出在通道维上连结。

稠密块由多个BottleNeck组成,每块使用相同的输出通道数:

class DenseBlock(Layer):def __init__(self, num_layers, growth_rate, drop_rate=0.5):super(DenseBlock, self).__init__()self.num_layers = num_layersself.growth_rate = growth_rateself.drop_rate = drop_rateself.listLayers = []for _ in range(num_layers):self.listLayers.append(BottleNeck(growth_rate=self.growth_rate, drop_rate=self.drop_rate))def call(self, x):for layer in self.listLayers.layers:x = layer(x)return x

示例:定义一个有2个输出通道数为10的卷积块。

使用通道数为3的输入时,将得到通道数为3+2×10=23的输出。

卷积块的通道数控制了输出通道数相对于输入通道数的增长,因此也被称为增长率(growth rate)。

blk = DenseBlock(2, 10)

X = tf.random.uniform((4, 8, 8, 3))

Y = blk(X)

print(Y.shape)

(4, 8, 8, 23)

12.2 过渡层

由于每个稠密块都会带来通道数的增加,使用过多则会带来过于复杂的模型。

过渡层用来控制模型复杂度。

它通过1×1卷积层来减小通道数,并使用步幅为2的平均池化层减半高和宽,从而进一步降低模型复杂度。

class TransitionLayer(Layer):def __init__(self, out_channels):super(TransitionLayer, self).__init__()self.bn = BatchNormalization()self.conv = Conv2D(filters=out_channels, kernel_size=1, padding="same", strides=1)self.pool = MaxPool2D(pool_size=(2, 2), padding="same", strides=2)def call(self, inputs):x = self.bn(inputs)x = relu(x)x = self.conv(x)x = self.pool(x)return x

对上一个例子中稠密块的输出使用通道数为10的过渡层。

此时输出的通道数减为10,高和宽均减半。

blk = TransitionLayer(10)

blk(Y).shape

TensorShape([4, 4, 4, 10])

12.3 DenseNet模型

DenseNet首先使用同ResNet的单卷积层和最大池化层。

类似于ResNet接下来使用的4个残差块,DenseNet使用的是4个稠密块。

同ResNet,可以设置每个稠密块使用多少个卷积层。这里设为4,从而与上一节的ResNet-18保持一致。

稠密块里的卷积层通道数(即增长率)设为32,所以每个稠密块将增加4*32=128个通道。

ResNet里通过步幅为2的残差块在每个模块之间减小高和宽。使用过渡层来减半高和宽,并减半通道数。

class DenseNet(Model):def __init__(self, num_init_features, growth_rate, block_layers, compression_rate, drop_rate):super(DenseNet, self).__init__()self.conv = Conv2D(filters=num_init_features, kernel_size=7, padding="same", strides=2)self.bn = BatchNormalization()self.pool = MaxPool2D(pool_size=(3, 3), padding="same", strides=2)self.num_channels = num_init_featuresself.dense_block_1 = DenseBlock(num_layers=block_layers[0], growth_rate=growth_rate, drop_rate=drop_rate)self.num_channels += growth_rate * block_layers[0]self.num_channels = compression_rate * self.num_channelsself.transition_1 = TransitionLayer(out_channels=int(self.num_channels))self.dense_block_2 = DenseBlock(num_layers=block_layers[1], growth_rate=growth_rate, drop_rate=drop_rate)self.num_channels += growth_rate * block_layers[1]self.num_channels = compression_rate * self.num_channelsself.transition_2 = TransitionLayer(out_channels=int(self.num_channels))self.dense_block_3 = DenseBlock(num_layers=block_layers[2], growth_rate=growth_rate, drop_rate=drop_rate)self.num_channels += growth_rate * block_layers[2]self.num_channels = compression_rate * self.num_channelsself.transition_3 = TransitionLayer(out_channels=int(self.num_channels))self.dense_block_4 = DenseBlock(num_layers=block_layers[3], growth_rate=growth_rate, drop_rate=drop_rate)self.avgpool = GlobalAvgPool2D()self.fc = Dense(units=10, activation=softmax)def call(self, inputs):x = self.conv(inputs)x = self.bn(x)x = relu(x)x = self.pool(x)x = self.dense_block_1(x)x = self.transition_1(x)x = self.dense_block_2(x)x = self.transition_2(x)x = self.dense_block_3(x)x = self.transition_3(x,)x = self.dense_block_4(x)x = self.avgpool(x)x = self.fc(x)return x

def densenet():return DenseNet(num_init_features=64, growth_rate=32, block_layers=[4,4,4,4], compression_rate=0.5, drop_rate=0.5)mynet=densenet()

每个子模块的输出维度:

X = tf.random.uniform(shape=(1, 96, 96 , 1))

for layer in mynet.layers:X = layer(X)print(layer.name, 'output shape: ', X.shape)

conv2d_81 output shape: (1, 48, 48, 64)

batch_normalization_81 output shape: (1, 48, 48, 64)

max_pooling2d_9 output shape: (1, 24, 24, 64)

dense_block_10 output shape: (1, 24, 24, 192)

transition_layer_7 output shape: (1, 12, 12, 96)

dense_block_11 output shape: (1, 12, 12, 224)

transition_layer_8 output shape: (1, 6, 6, 112)

dense_block_12 output shape: (1, 6, 6, 240)

transition_layer_9 output shape: (1, 3, 3, 120)

dense_block_13 output shape: (1, 3, 3, 248)

global_average_pooling2d output shape: (1, 248)

dense output shape: (1, 10)

参考

《动手学深度学习》(TF2.0版)

A. Krizhevsky, I. Sutskever, and G. Hinton. Imagenet classification with deep convolutional neural networks. In NIPS, 2012.