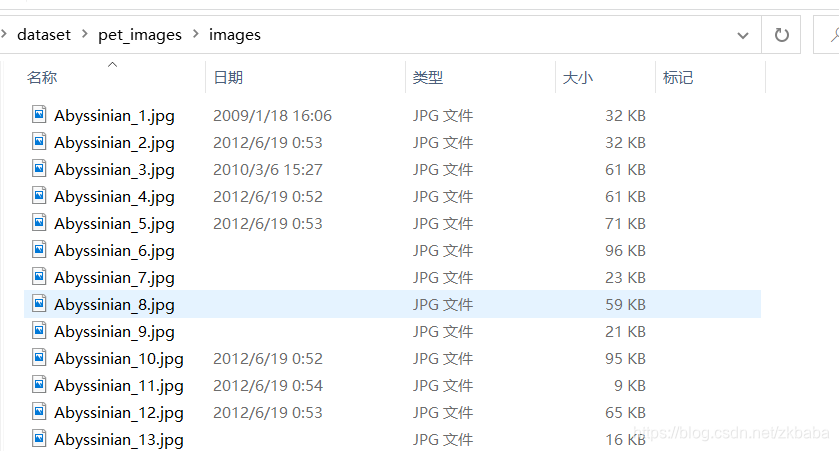

数据集的路径:

定义模型

这里用到的模型是一个改版的 U-Net。U-Net 由一个编码器(下采样器(downsampler))和一个解码器(上采样器(upsampler))组成。为了学习到鲁棒的特征,同时减少可训练参数的数量,这里可以使用一个预训练模型作为编码器。因此,这项任务中的编码器将使用一个预训练的 MobileNetV2 模型,它的中间输出值将被使用。解码器将使用在 TensorFlow Examples 中的 Pix2pix tutorial 里实施过的升频取样模块。

输出信道数量为 3 是因为每个像素有三种可能的标签。把这想象成一个多类别分类,每个像素都将被分到三个类别当中。

训练模型

现在,要做的只剩下编译和训练模型了。这里用到的损失函数是 losses.sparse_categorical_crossentropy。使用这个损失函数是因为神经网络试图给每一个像素分配一个标签,和多类别预测是一样的。在正确的分割掩码中,每个像素点的值是 {0,1,2} 中的一个。同时神经网络也输出三个信道。本质上,每个信道都在尝试学习预测一个类别,而 losses.sparse_categorical_crossentropy 正是这一情形下推荐使用的损失函数。根据神经网络的输出值,分配给每个像素的标签为输出值最高的信道所表示的那一类。这就是 create_mask 函数所做的工作。

# -*- coding: utf-8 -*-

""" Created on Thu Jul 30 18:51:35 2020@author: YZK """import tensorflow as tf

print(tf.__version__)

import numpy as np

import os

from IPython.display import clear_output

from PIL import Image

import matplotlib.pyplot as plt

import pix2pix

samples_all_number=1000save_dir='./log'

count=0

if not os.path.exists(save_dir): os.mkdir(save_dir)#从磁盘上加载原始图片

def load_tensor_from_file(img_file):img = Image.open(img_file)sample_image = np.array(img)#样本图片规格不一致,需要做通道转换,否则抛异常if len(sample_image.shape) != 3 or sample_image.shape[2] == 4:img = img.convert("RGB")sample_image = np.array(img)sample_image = tf.image.resize(sample_image,[128, 128])return sample_image#从磁盘上加载标记图片

def load_ann_from_file(img_file):sample_image = tf.image.decode_image(tf.io.read_file(img_file))#样本图片规格不一致,需要做通道转换,否则抛异常if sample_image.shape[2] != 1:img = Image.open(img_file)img = img.convert("L")#转为灰度图sample_image = np.array(img)sample_image = tf.image.resize(sample_image,[128, 128])return sample_image#加载图片并转换为训练集和验证集,同时输出训练集、验证集样本数量

def load(img_path):trainImageList = []valImageList = []path = img_path + "/images"files = os.listdir(path)cnt = 0for imgFile in files:if os.path.isdir(imgFile):continuefile = path + "/" + imgFileprint("load image ", file)cnt += 1img = load_tensor_from_file(file)img = tf.squeeze(img)#每8张图片中抽取一个样本作为验证集if cnt % 8 == 0:valImageList.append(img)else:trainImageList.append(img)#加载1000张样本,机器配置有限,样本过多,报00M错误if cnt > samples_all_number:breaktrainAnnList = []valAnnList = []path = img_path + "/ann"files = os.listdir(path)cnt = 0for imgFile in files:if os.path.isdir(imgFile):continuefile = path + "/" + imgFileprint("load image ", file)img = load_ann_from_file(file)cnt+=1if cnt % 8 == 0:valAnnList.append(img)else:trainAnnList.append(img)#加载1000张样本,机器配置有限,样本过多,报00M错误if cnt > samples_all_number:breaktrain_num = len(trainImageList)val_num = len(valImageList)x = tf.convert_to_tensor(trainImageList, dtype=tf.float32)y = tf.convert_to_tensor(trainAnnList, dtype=tf.float32)dataset_train = tf.data.Dataset.from_tensor_slices((x, y))x_val = tf.convert_to_tensor(valImageList, dtype=tf.float32)y_val = tf.convert_to_tensor(valAnnList, dtype=tf.float32)dataset_val = tf.data.Dataset.from_tensor_slices((x_val, y_val))return dataset_train, train_num, dataset_val, val_numtrain_dataset, train_num, val_dataset, val_num = load("D:/dataset/pet_images")def normalize(input_image, input_mask):input_image = tf.cast(input_image, tf.float32) / 128.0 - 1#mask图像数据需根据标记数据做具体转换,使用labelme工具标记的图像,需要将图像颜色转换为类别标签索引,否则loss=naninput_mask -= 1return input_image, input_mask@tf.function

def load_image_train(x, y):input_image = tf.image.resize(x, (128, 128))input_mask = tf.image.resize(y, (128,128))if tf.random.uniform(()) > 0.5:input_image = tf.image.flip_left_right(input_image)input_mask = tf.image.flip_left_right(input_mask)input_image, input_mask = normalize(input_image, input_mask)return input_image, input_maskdef load_image_test(x, y):input_image = tf.image.resize(x, (128, 128))input_mask = tf.image.resize(y, (128,128))input_image, input_mask = normalize(input_image, input_mask)return input_image, input_maskTRAIN_LENGTH = train_num

#根据GPU性能调节BATCH_SIZE大小

BATCH_SIZE = 16#64

BUFFER_SIZE = 1000

STEPS_PER_EPOCH= TRAIN_LENGTHtrain = train_dataset.map(load_image_train, num_parallel_calls=tf.data.experimental.AUTOTUNE)

val_dataset = val_dataset.map(load_image_test)train_dataset = train.cache().shuffle(BUFFER_SIZE).batch(BATCH_SIZE).repeat()

train_dataset = train_dataset.prefetch(buffer_size=tf.data.experimental.AUTOTUNE)

val_dataset = val_dataset.batch(BATCH_SIZE)def display(display_list):global countcount=count+1plt.figure(figsize=(15,15))title = ['Input Image', 'True Mask', 'Predicted Mask']for i in range(len(display_list)):plt.subplot(1, len(display_list), i+1)plt.title(title[i])plt.imshow(tf.keras.preprocessing.image.array_to_img(display_list[i]))plt.axis('off')save_file = save_dir+"/count_%d.jpg" %countplt.savefig(save_file) plt.show()np.set_printoptions(threshold=128*128)

for image, mask in train.take(1):sample_image, sample_mask = image, maskprint(tf.reduce_min(mask), tf.reduce_max(mask), tf.reduce_mean(mask))display([sample_image,sample_mask])OUTPUT_CHANNELS = 3base_model = tf.keras.applications.MobileNetV2(input_shape=[128,128,3], include_top=False)layer_names = ['block_1_expand_relu', #64x64'block_3_expand_relu', #32x32'block_6_expand_relu', #16x16'block_13_expand_relu',#8x8'block_16_project', #4x4

]layers = [base_model.get_layer(name).output for name in layer_names]

#创建特征提取模型

down_stack = tf.keras.Model(inputs=base_model.input, outputs=layers)

down_stack.trainable = Falseup_stack =[pix2pix.upsample(512, 3),#4x4 -> 8x8pix2pix.upsample(256, 3),#8x8 -> 16x16pix2pix.upsample(128, 3),#16x16 -> 32x32pix2pix.upsample(64, 3), #32x32 -> 64x64

]def unet_model(output_channels):last = tf.keras.layers.Conv2DTranspose(output_channels, 3, strides=2,padding='same', activation='softmax')inputs = tf.keras.layers.Input(shape=[128,128,3])x = inputs#降频采样skips = down_stack(x)x = skips[-1]#取最后一次输出skips=reversed(skips[:-1])#升频采样for up, skip in zip(up_stack, skips):x = up(x)concat = tf.keras.layers.Concatenate()x = concat([x, skip])x = last(x)return tf.keras.Model(inputs=inputs, outputs=x)model = unet_model(OUTPUT_CHANNELS)adam = tf.keras.optimizers.Adam(lr=1e-3)

#optimizer = optimizers.Adam(lr=1e-3)

model.compile(adam, loss='sparse_categorical_crossentropy', metrics=['accuracy'])def create_mask(pred_mask):pred_mask = tf.argmax(pred_mask, axis=-1)pred_mask = pred_mask[..., tf.newaxis]return pred_mask[0]def show_predictions(dataset=None, num=1):if dataset:for image, mask in dataset.take(num):pred_mask = model.predict(image)display([image[0], mask[0], create_mask(pred_mask)])else:display([sample_image, sample_mask, create_mask(model.predict(sample_image[tf.newaxis, ...]))])show_predictions()class DisplayCallback(tf.keras.callbacks.Callback):def on_epoch_end(self, epoch, logs=None):clear_output(wait=True)show_predictions()print ('\nSample Prediction after epoch {}\n'.format(epoch+1))EPOCHS = 2

VAL_SUBSPLITS = 5

VALIDATION_STEPS = val_num//BATCH_SIZE//VAL_SUBSPLITSmodel_history = model.fit(train_dataset, epochs=EPOCHS,steps_per_epoch=STEPS_PER_EPOCH,validation_steps=VALIDATION_STEPS,validation_data=val_dataset,callbacks=[DisplayCallback()])model.save("poker.h5")

loss = model_history.history['loss']

val_loss = model_history.history['val_loss']

epochs = range(EPOCHS)

plt.figure()

plt.plot(epochs, loss, 'r', label='Training loss')

plt.plot(epochs, val_loss, 'bo', label='Validation loss')

plt.title('Training and Validation Loss')

plt.xlabel('Epoch')

plt.ylabel('Loss Value')

plt.ylim([0, 1])

plt.legend()

plt.savefig(save_dir+'Training.png')

plt.show()