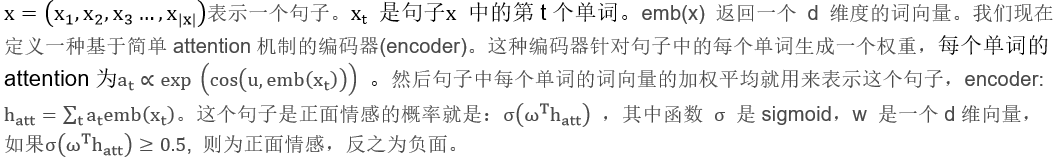

文本情感二分类(加权平均模型(Attention Weighted word averaging) )

# -*- coding:utf-8 -*-

import random

from collections import defaultdict, Counter

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

def set_random_seed(seed):random.seed(seed)np.random.seed(seed)torch.manual_seed(seed)torch.cuda.manual_seed(seed)torch.cuda.manual_seed_all(seed)torch.backends.cudnn.benchmark = Falsetorch.backends.cudnn.deterministic = Trueset_random_seed(6688)

device = torch.device('cuda' if torch.cuda.is_available else 'cpu')

data_path = ''

设计词典

from collections import Counterdef build_vocab(sents, max_words=50000):word_counts = Counter()for word in sents:word_counts[word] += 1itos = [w for w, c in word_counts.most_common(max_words)]itos = itos + ["UNK"]stoi = {w:i for i, w in enumerate(itos)}return itos,stoitokenize = lambda x: x.split()

text = open(data_path +'senti.train.tsv').read()

vob = tokenize(text.lower())

itos,stoi = build_vocab(vob)

简单检验一下词典

itos[0:5]

['1', '0', 'the', ',', 'a']

stoi['the']

2

设计数据集

class Corpus:def __init__(self, data_path, sort_by_len=False):self.vocab = vobself.sort_by_len = sort_by_lenself.train_data,self.train_label = self.tokenize(data_path + 'train.tsv')self.valid_data,self.valid_label = self.tokenize(data_path + 'dev.tsv')self.test_data,self.test_label = self.tokenize(data_path + 'test.tsv')def tokenize(self, text_path):with open(text_path) as f:index_data = [] # 索引数据,存储每个样本的单词索引列表labels = []for line in f.readlines():sentence, label = line.split('\t')index_data.append(self.sentence_to_index(sentence.lower()))labels.append(int(label[0])) if self.sort_by_len: # 为了提升训练速度,可以考虑将样本按照长度排序,这样可以减少paddingindex_data = sorted(index_data, key=lambda x: len(x), reverse=True)return index_data,labelsdef sentence_to_index(self, s):a = []for w in s.split():if w in stoi.keys():a.append(stoi[w])else:a.append(stoi["UNK"])return adef index_to_sentence(self, x):return ' '.join([itos[i] for i in x])corpus = Corpus(data_path, sort_by_len=False)

简单检验一下词典训练数据和测试数据

corpus.train_data[0:5]

[[4459, 94, 12436, 37, 2, 7263, 9002],[2905, 62, 327, 3, 90, 1970, 549],[11, 1770, 18, 55, 5, 5990, 97, 185, 267, 35, 179, 622],[593, 673, 5991, 8, 1971, 2, 290, 696],[25, 2, 255, 5127, 261, 2, 360, 118, 4753, 54]]

corpus.train_label[0:5]

[0, 0, 1, 0, 0]

设计最小batch

def get_minibatches(text_idx, labels, batch_size=64, sort=True):if sort:text_idx_and_labels = sorted(list(zip(text_idx, labels)), key=lambda x: len(x[0]))else:text_idx_and_labels = (list(zip(text_idx, labels)))text_idx_batches = []label_batches = []for i in range(0, len(text_idx), batch_size):text_batch = [t for t, l in text_idx_and_labels[i:i + batch_size]]label_batch = [l for t, l in text_idx_and_labels[i:i + batch_size]]text_idx_batches.append(text_batch)label_batches.append(label_batch)return text_idx_batches, label_batchesBATCH_SIZE = 64

train_batches, train_label_batches = get_minibatches(corpus.train_data, corpus.train_label, BATCH_SIZE)

dev_batches, dev_label_batches = get_minibatches(corpus.valid_data,corpus.valid_label,BATCH_SIZE)

test_batches, test_label_batches = get_minibatches(corpus.test_data,corpus.test_label, BATCH_SIZE)

简单测试一下train_batches, train_label_batches

print(train_batches[0][0:5]) #由于我们是按 评论长度从大到小排序,第0个batch 从0到5 每句话的长度为0

print(train_label_batches[0][0:5])

[[2782], [5996], [4464], [2907], [3796]]

[1, 1, 0, 1, 0]

词向量平均(word averaging)二分类模型

class WordAVGModel(nn.Module):def __init__(self, vocab_size, embedding_size, output_size, dropout_p=0.5):super(WordAVGModel, self).__init__()self.embed = nn.Embedding(vocab_size, embedding_size)initrange = 0.1self.embed.weight.data.uniform_(-initrange, initrange)self.u = nn.Parameter(torch.rand(embedding_size))self.linear = nn.Linear(embedding_size, output_size)self.dropout = nn.Dropout(dropout_p) # 这个参数经常拿来调节def forward(self, text, mask):# text: [batch_size * max_seq_len]# mask: [batch_size * max_seq_len]embedded = self.embed(text) # [batch_size, max_seq_len, embedding_size]embedded = self.dropout(embedded) #self.u.view(1,-1).size(),embedded.view(-1,embedded.shape[2]).size()a = torch.exp(torch.cosine_similarity(self.u.view(1,-1).to(device), embedded.view(-1,embedded.shape[2]), dim=1)).view(embedded.shape[0],embedded.shape[1])embedded = ((a.unsqueeze(2).repeat(1, 1, embedded.size(2))) * embedded) # embedded:[batch_size * max_seq_len * embedding_size]embedded = self.dropout(embedded)# 1 represents word, 0 represents paddingmask = mask.float().unsqueeze(2) # [batch_size, seq_len, 1]embedded = embedded * mask # [batch_size, seq_len, embedding_size]# 求平均 sent_embed = embedded.sum(1) # 防止mask.sum为0,那么不能除以零。return self.linear(sent_embed)def attention(self, text):embedded = self.embed(text) # [ seq_len, embedding_size]embedded = self.dropout(embedded) a = torch.cosine_similarity(self.u.view(1,-1),embedded)a_weight = F.softmax(a, dim=-1) return a_weight.cpu().numpy()

初始化模型

VOCAB_SIZE = len(itos)

EMBEDDING_SIZE = 200

OUTPUT_SIZE = 1

model = WordAVGModel(vocab_size=VOCAB_SIZE,embedding_size=EMBEDDING_SIZE,output_size=OUTPUT_SIZE,dropout_p=0.5)

使用Adam优化器 测试词向量平均二分类模型

optimizer = torch.optim.Adam(model.parameters())

crit = nn.BCEWithLogitsLoss()

model = model.to(device)

设计计算准确率函数

def binary_accuracy(preds, y):rounded_preds = torch.round(torch.sigmoid(preds))correct = (rounded_preds == y).float()acc = correct.sum() / len(correct)return acc

设计模型训练函数

def train(model, text_idxs, labels, optimizer, crit):epoch_loss, epoch_acc = 0., 0.model.train()total_len = 0.for text, label in zip(text_idxs, labels):text = [torch.tensor(x).long().to(device) for x in (text)]label = [torch.tensor(label).long().to(device)]lengths = torch.tensor([len(x) for x in text]).long().to(device)text = nn.utils.rnn.pad_sequence(text, batch_first=True)mask = (text != 0).float().to(device)# 在之后的训练中因为还要进行pack_padded_sequence操作,所以在这里按照长度降序排列lengths, perm_index = lengths.sort(descending=True)text = text[perm_index]label = label[0][perm_index]preds = model(text, mask).squeeze() # [batch_size, sent_length]loss = crit(preds, label.float())acc = binary_accuracy(preds, label)optimizer.zero_grad()loss.backward()optimizer.step()epoch_loss += loss.item() * len(label)epoch_acc += acc.item() * len(label)total_len += len(label)return epoch_loss / total_len, epoch_acc / total_len

设计模型评估函数

def evaluate(model, text_idxs, labels, crit):epoch_loss, epoch_acc = 0., 0.model.eval()total_len = 0.for text, label in zip(text_idxs, labels):text = [torch.tensor(x).long().to(device) for x in (text)]label = [torch.tensor(label).long().to(device)]lengths = torch.tensor([len(x) for x in text]).long().to(device)text = nn.utils.rnn.pad_sequence(text, batch_first=True)mask = (text != 0).float().to(device)# 在之后的训练中因为还要进行pack_padded_sequence操作,所以在这里按照长度降序排列lengths, perm_index = lengths.sort(descending=True)text = text[perm_index]label = label[0][perm_index]with torch.no_grad():preds = model(text, mask).squeeze() # [batch_size, sent_length]loss = crit(preds, label.float())acc = binary_accuracy(preds, label)epoch_loss += loss.item() * len(label)epoch_acc += acc.item() * len(label)total_len += len(label)model.train()return epoch_loss / total_len, epoch_acc / total_len

训练模型

N_EPOCHS = 5

best_valid_acc = 0.

for epoch in range(N_EPOCHS):train_loss, train_acc = train(model, train_batches, train_label_batches, optimizer, crit)valid_loss, valid_acc = evaluate(model, dev_batches, dev_label_batches, crit)if valid_acc > best_valid_acc:best_valid_acc = valid_acctorch.save(model.state_dict(), "wordavg-model-Adam.pth")print("Epoch", epoch, "Train Loss", train_loss, "Train Acc", train_acc)print("Epoch", epoch, "Valid Loss", valid_loss, "Valid Acc", valid_acc)

Epoch 0 Train Loss 0.421612024540009 Train Acc 0.7994625013027427

Epoch 0 Valid Loss 0.46337967454840284 Valid Acc 0.8096330269761042

Epoch 1 Train Loss 0.26165380607506367 Train Acc 0.8996718585323913

Epoch 1 Valid Loss 0.5158094452061784 Valid Acc 0.8130733950422444

Epoch 2 Train Loss 0.21914521883101462 Train Acc 0.9156928833416801

Epoch 2 Valid Loss 0.5733908010185311 Valid Acc 0.8130733950422444

Epoch 3 Train Loss 0.19722330785677017 Train Acc 0.9246610937094599

Epoch 3 Valid Loss 0.6202372706264531 Valid Acc 0.8130733939485812

Epoch 4 Train Loss 0.18302929015256092 Train Acc 0.9310160507217569

Epoch 4 Valid Loss 0.6619091307351349 Valid Acc 0.807339450088116

取出正确率最高的模型预测测试集上的数据

model.load_state_dict(torch.load("wordavg-model-Adam.pth"))

test_loss, test_acc = evaluate(model, test_batches, test_label_batches, crit)

print("Test Loss", test_loss, "Test Acc", test_acc)

Test Loss 0.45551196396972765 Test Acc 0.8220757820133584

找出最具有情感色彩的词

分析词向量和Attention向量。用在DEV取得最好成绩的模型,计算向量u和所有单词词向量的cosine similarity。展示cosine similarity最高的15个单词, 以及cosine similarity最低的15个单词。

首先去掉词典中的标点符号以及标签

import re

text = re.sub("[\s+\.\!\/_,$%^*(+\"\']+|[+——!,。?、~@#¥%……&*()]+", " ",text)

vob = tokenize(text.lower())

vob.remove('0')

vob.remove('1')

vob= set(vob)

vob = [stoi[e] for e in vob if e in itos]

len(vob)

14690

model.load_state_dict(torch.load("wordavg-model-Adam.pth"))

# embedding 字典向量

embedded = torch.tensor(list(vob)).to(device)

#得到cosine_similarity列表

Cosin_List = torch.exp(torch.cosine_similarity(model.u.view(1,-1).to(device), model.embed(embedded), dim=1))

#在列表中取出L2 norm最大的15个数

Biggest15_nu = Cosin_List.sort(descending=True)[1][0:15]

#把one hot 编码转换成为单词

Biggest15 = [itos[e] for e in Biggest15_nu]

#在列表中取出L2 norm最小的15个数

Smallest15_nu = Cosin_List.sort(descending=False)[1][0:15]

#把one hot 编码转换成为单词

Smallest15 = [itos[e] for e in Smallest15_nu]

print("余弦相似度最大的15个单词:",Biggest15)

print("余弦相似度最小的15个单词:",Smallest15)

# 训练次数比较多的单词,以及出频率比较高的单词余弦相似度最小。 训练中出现次数比较少的单词余弦相似度最大。 余弦相似度最大的15个单词: ['march', 'finely', 'hilary', 'enthusiasm', 'nonbelievers', 'again', 'blowing', 'expressionistic', 'cannibal', 'direction', 'therapeutic', 'painterly', 'stunning', 'hardy', 'cineasts']

余弦相似度最小的15个单词: ['chapter', 'discipline', 'profane', 'deblois', 'ear-splitting', 'rating', 'entree', 'unfulfilling', 'under-inspired', 'swipe', 'period-piece', 'distinctions', 'drama/character', 'freezers', 'instructive']

你发现了什么特征?你发现了什么特征?

from collections import Counter

from itertools import chain

train_data = list(chain.from_iterable(corpus.train_data))

train_data = dict(Counter(train_data))

count = 0

for e in Smallest15_nu: #print(count)count += 1if e.item() in stoi.values() and e.item() in train_data.keys():print(count,itos[e.item()],train_data[e.item()]) print("有",100*count/15,"%的L2_norm 最小词在训练集里面")

1 chapter 3

2 discipline 17

3 profane 21

4 deblois 3

5 ear-splitting 2

6 rating 28

7 entree 5

8 unfulfilling 29

9 under-inspired 12

10 swipe 8

11 period-piece 5

12 distinctions 7

13 drama/character 2

14 freezers 7

15 instructive 10

有 100.0 %的L2_norm 最小词在训练集里面

count = 0

for e in Biggest15_nu: #print(count)count += 1if e.item() in stoi.values() and e.item() in train_data.keys():print(count,itos[e.item()],train_data[e.item()]) print("有",100*count/15,"%的L2_norm最大词在训练集里面")

1 march 3

2 finely 32

3 hilary 9

4 enthusiasm 44

5 nonbelievers 3

6 again 247

7 blowing 9

8 expressionistic 7

9 cannibal 8

10 direction 293

11 therapeutic 4

12 painterly 11

13 stunning 66

14 hardy 7

15 cineasts 1

有 100.0 %的L2_norm最大词在训练集里面

观察结果可以发现,最小的15个L2 norm单词都是在训练集中反复出现的可以判断感情色彩的形容词。最大的15个 L2 norm单词都是一些没有感情色彩的词。

分析相同单词在不同语境下attention的变化。使用在DEV上取得最好成绩的模型,计算训练数据TRAIN当中每个单词的attention权重(softmax归一化之后)。找出至少在TRAIN中出现100次的单词,计算这些单词在不同语境下attention权重的平均值和标准差。

from collections import Counter

word_attention_list = []

word_index_list = []

with torch.no_grad():for text in (corpus.train_data):word_index_list.extend(text)text = torch.tensor(text).long().to(device)a_weight= model.attention(text) word_attention_list.extend(a_weight)

len(word_attention_list)

633698

from collections import defaultdict

import numpy as npword_att_dict = defaultdict(list)

for i in range(len(word_index_list)):word = word_index_list[i]att = word_attention_list[i]word_att_dict[word].append(att)

# 单词出现次数大于100次 且每个单词的 attention权重的平均值 和 标准差

word_att_processed = [( (itos[k],np.mean(v)),np.std(v) )for k,v in word_att_dict.items() if len(v)>100]print('len(word_att_processed) : ',len(word_att_processed))

len(word_att_processed) : 660

30个标准差最大的单词

max_std_topK = 30

#(word,mean,std)

word_att_processed = sorted(word_att_processed,key=lambda x:x[1],reverse=True)[:max_std_topK]print("\tmean\t\tstd\tword\t")

print("-"*60)

for i,(word_mean,std) in enumerate(word_att_processed):print("{}\t{}\t\t{}\t{}".format(i+1,'%.2f'%word_mean[1],'%.2f'%std,word_mean[0]))

mean std word

------------------------------------------------------------

1 0.24 0.20 stupid

2 0.23 0.19 terrific

3 0.24 0.19 unfunny

4 0.21 0.19 watchable

5 0.19 0.19 tedious

6 0.20 0.19 painful

7 0.20 0.19 bland

8 0.23 0.19 awful

9 0.21 0.18 inventive

10 0.25 0.18 mess

11 0.20 0.18 flat

12 0.19 0.18 gorgeous

13 0.18 0.18 worse

14 0.20 0.18 excellent

15 0.17 0.18 worthy

16 0.20 0.18 appealing

17 0.20 0.18 remarkable

18 0.21 0.17 beautifully

19 0.21 0.17 waste

20 0.20 0.17 intriguing

21 0.21 0.17 provocative

22 0.16 0.17 cool

23 0.17 0.17 hackneyed

24 0.19 0.17 slow

25 0.18 0.17 lacking

26 0.19 0.17 decent

27 0.18 0.17 boring

28 0.20 0.17 wonderful

29 0.22 0.17 engrossing

30 0.17 0.17 perfectly

情感色彩越鲜明的词汇均值越高