文章目录

- 1. map 函数

- 2. flatMap 函数

- 3. mapPartition 函数

- 4.filter 函数

- 5. reduce 函数

- 6. reduceGroup

- 7. Aggregate

- 8. minBy 和 maxBy

- 9. distinct 去重

- 10. Join

- 11. LeftOuterJoin

- 12. RightOuterJoin

- 13. fullOuterJoin

- 14 .cross 交叉操作

- 15. Union

- 16. Rebalance

- 17 .First

1. map 函数

参考代码

import org.apache.flink.api.scala.ExecutionEnvironment/*** 需求:* 使用 map 操作, 将以下数据* "1,张三", "2,李四", "3,王五", "4,赵六"* 转换为一个 scala 的样例类。*/

object BatchMapDemo {//3.创建样例类case class user(id:Int,name:String)def main(args: Array[String]): Unit = {//1.创建执行环境val env = ExecutionEnvironment.getExecutionEnvironment//2.构建数据集import org.apache.flink.api.scala._val sourceDataSet: DataSet[String] = env.fromElements("1,张三", "2,李四", "3,王五", "4,赵六")//4.数据转换处理val userDataSet: DataSet[user] = sourceDataSet.map(item => {val itemsArr: Array[String] = item.split(",")user(itemsArr(0).toInt, itemsArr(1))})//5.打印输出userDataSet.print()}

}

2. flatMap 函数

代码实现

import org.apache.flink.api.scala.ExecutionEnvironment

import scala.collection.mutableobject BatchFlatMapDemo {def main(args: Array[String]): Unit = {val env: ExecutionEnvironment = ExecutionEnvironment.getExecutionEnvironmentimport org.apache.flink.api.scala._val sourceDatSet: DataSet[String] = env.fromCollection(List("张三,中国,江西省,南昌市","李四,中国,河北省,石家庄市","Tom,America,NewYork,Manhattan"))val resultDataSet: DataSet[(String, String)] = sourceDatSet.flatMap(item => {val itemsArr: mutable.ArrayOps[String] = item.split(",")List((itemsArr(0), itemsArr(1)),(itemsArr(0), itemsArr(1) + itemsArr(2)),(itemsArr(0), itemsArr(1) + itemsArr(2) + itemsArr(3)))})resultDataSet.print()}

}

3. mapPartition 函数

代码实现

package com.czxy.flink.batch.transfromation

import org.apache.flink.api.scala.ExecutionEnvironment/*** 需求:* 使用 mapPartition 操作, 将以下数据* "1,张三", "2,李四", "3,王五", "4,赵六"* 转换为一个 scala 的样例类。*/

object BatchMapPartitionDemo {case class user(id:Int,name:String)def main(args: Array[String]): Unit = {//1.创建执行环境val env: ExecutionEnvironment = ExecutionEnvironment.getExecutionEnvironment//2.构建数据集import org.apache.flink.api.scala._val sourceDataSet: DataSet[String] = env.fromElements("1,张三", "2,李四", "3,王五", "4,赵六")//3数据处理val userDataSet: DataSet[user] = sourceDataSet.mapPartition(itemPartition => {itemPartition.map(item => {val itemsArr: Array[String] = item.split(",")user(itemsArr(0).toInt, itemsArr(1))})})//4.打印数据userDataSet.print()}

}

4.filter 函数

代码实现

import org.apache.flink.api.scala.ExecutionEnvironment

import org.apache.flink.api.scala._

/*** 过滤出来以下以 h 开头的单词。 * "hadoop", "hive", "spark", "flink" */object BatchFilterDemo {

def main(args: Array[String]): Unit = {val env = ExecutionEnvironment.getExecutionEnvironmentval textDataSet: DataSet[String] = env.fromElements("hadoop","hive", "spark", "flink")val filterDataSet: DataSet[String] = textDataSet.filter(x=>x.startsWith("h"))filterDataSet.print()

}

}

5. reduce 函数

代码实现

import org.apache.flink.api.scala.ExecutionEnvironment

import org.apache.flink.api.scala._/*** 请将以下元组数据, * 使用 reduce 操作聚合成一个最终结果 ("java" , 1) , ("java", 1) ,("java" , 1) * * 将上传元素数据转换为 ("java",3) */object BatchReduceDemo {

def main(args: Array[String]): Unit = {val env = ExecutionEnvironment.getExecutionEnvironmentval textDataSet: DataSet[(String, Int)] = env.fromCollection(List(("java" , 1),("java", 1),("java" , 1)))val groupedDataSet: GroupedDataSet[(String, Int)] = textDataSet.groupBy(0)val reduceDataSet: DataSet[(String, Int)] = groupedDataSet.reduce((v1, v2)=>(v1._1,v1._2+v2._2))reduceDataSet.print()

}

}

6. reduceGroup

可以对一个 dataset 或者一个 group 来进行聚合计算,最终聚合成一个元素 reduce 和 reduceGroup 的 区别

代码实现

import org.apache.flink.api.scala.{DataSet, ExecutionEnvironment}

import org.apache.flink.api.scala._/*** 请将以下元组数据,先按照单词使用 groupBy 进行分组, 再使用 reduceGroup 操作进行单词计数 ("java" , 1) , ("java", 1) ,("scala" , 1) */ object BatchReduceGroupDemo {

def main(args: Array[String]): Unit = {val env: ExecutionEnvironment = ExecutionEnvironment.getExecutionEnvironmentval textDataSet: DataSet[(String, Int)] = env.fromCollection(List(("java" , 1),("java", 1),("scala" , 1)))val groupedDataSet: GroupedDataSet[(String, Int)] = textDataSet.groupBy(0)val reduceGroupDataSet: DataSet[(String, Int)] = groupedDataSet.reduceGroup(group => { group.reduce((v1, v2) => { (v1._1, v1._2 + v2._2)

})

})

reduceGroupDataSet.print()

}

}

7. Aggregate

代码实现

import org.apache.flink.api.java.aggregation.Aggregations

import org.apache.flink.api.scala._/*** 请将以下元组数据,使用 aggregate 操作进行单词统计 * ("java" , 1) , ("java", 1) ,("scala" , 1) * */object BatchAggregateDemo {

def main(args: Array[String]): Unit = {val env = ExecutionEnvironment.getExecutionEnvironmentval textDataSet = env.fromCollection(List(("java" , 1) , ("java", 1)val grouped = textDataSet.groupBy(0)val aggDataSet: AggregateDataSet[(String, Int)] = grouped.aggregate(Aggregations.MAX,1)aggDataSet.print()

}

}

8. minBy 和 maxBy

代码实现

import org.apache.flink.api.java.aggregation.Aggregations

import org.apache.flink.api.scala.{DataSet, ExecutionEnvironment}

import scala.collection.mutable

import scala.util.Randomobject BatchMinByAndMaxBy {

def main(args: Array[String]): Unit = {

val env = ExecutionEnvironment.getExecutionEnvironment val data = new mutable.MutableList[(Int, String, Double)]data.+=((1, "yuwen", 89.0))data.+=((2, "shuxue", 92.2)) data.+=((3, "yingyu", 89.99))data.+=((4, "wuli", 98.9)) data.+=((1, "yuwen", 88.88)) data.+=((1, "wuli", 93.00)) data.+=((1, "yuwen", 94.3))//导入隐式转换

import org.apache.flink.api.scala._

//fromCollection将数据转化成DataSet

val input: DataSet[(Int, String, Double)] = env.fromCollection(Random.shuffle(data))

input.print()println("===========获取指定字段分组后,某个字段的最大值 ==================") val output = input.groupBy(1).aggregate(Aggregations.MAX, 2) output.print()println("===========使用【MinBy】获取指定字段分组后,某个字段的最小值 ==================") // val input: DataSet[(Int, String, Double)] = env.fromCollection(Random.shuffle(data)) val output2: DataSet[(Int, String, Double)] = input.groupBy(1) //求每个学科下的最小分数//minBy的参数代表要求哪个字段的最小值

.minBy(2)

output2.print()

println("===========使用【maxBy】获取指定字段分组后,某个字段的最大值 ==================")

// val input: DataSet[(Int, String, Double)] = env.fromCollection(Random.shuffle(data))

val output3: DataSet[(Int, String, Double)] = input .groupBy(1)

//求每个学科下的最小分数//minBy的参数代表要求哪个字段的最小值 .maxBy(2) output3.print()

9. distinct 去重

代码实现

import org.apache.flink.api.scala._/**** 请将以下元组数据,使用 distinct 操作去除重复的单词 ** ("java" , 1) , ("java", 1) ,("scala" , 1) ** 去重得到 ** ("java", 1), ("scala", 1) **/object BatchDistinctDemo {

def main(args: Array[String]): Unit = {

val env = ExecutionEnvironment.getExecutionEnvironment

val textDataSet: DataSet[(String, Int)] = env.fromCollection(List(("java", 1), ("java", 1), ("scala", 1)))

textDataSet.distinct(1).print()

}

}

10. Join

代码实现

import org.apache.flink.api.scala._

/**** 使用join可以将两个DataSet连接起来 **/object BatchJoinDemo { case class Subject(id: Int, name: String) case class Score(id: Int, stuName: String, subId: Int, score: Double) def main(args: Array[String]): Unit = { val env = ExecutionEnvironment.getExecutionEnvironment val subjectDataSet: DataSet[Subject] = env.readCsvFile[Subject]("day01/data/input/subject.csv") val scoreDataSet: DataSet[Score] = env.readCsvFile[Score]("day01/data/input/score.csv") //join的替代方案:broadcast val joinDataSet: JoinDataSet[Score, Subject] = scoreDataSet.join(subjectDataSet).where(_.subId).equalTo(_.id) joinDataSet.print() } }

11. LeftOuterJoin

代码实现

import org.apache.flink.api.scala.ExecutionEnvironment

import scala.collection.mutable.ListBuffer

/*** 左外连接,左边的Dataset中的每一个元素,去连接右边的元素 */object BatchLeftOuterJoinDemo { def main(args: Array[String]): Unit = { val env = ExecutionEnvironment.getExecutionEnvironment import org.apache.flink.api.scala._ val data1 = ListBuffer[Tuple2[Int, String]]()

data1.append((1, "zhangsan"))

data1.append((2, "lisi"))

data1.append((3, "wangwu"))

data1.append((4, "zhaoliu")) val data2 = ListBuffer[Tuple2[Int, String]]()

data2.append((1, "beijing"))

data2.append((2, "shanghai"))

data2.append((4, "guangzhou")) val text1 = env.fromCollection(data1)

val text2 = env.fromCollection(data2)

text1.leftOuterJoin(text2).where(0).equalTo(0).apply((first, second) => {if (second == null) { (first._1, first._2, "null") } else { (first._1, first._2, second._2)} }).print() }}

12. RightOuterJoin

代码实现

import org.apache.flink.api.scala.ExecutionEnvironment

import scala.collection.mutable.ListBuffer

/*** 左外连接,左边的Dataset中的每一个元素,去连接右边的元素 */

object BatchLeftOuterJoinDemo {

def main(args: Array[String]): Unit = { val env = ExecutionEnvironment.getExecutionEnvironment

import org.apache.flink.api.scala._

val data1 = ListBuffer[Tuple2[Int, String]]()

data1.append((1, "zhangsan"))

data1.append((2, "lisi"))

data1.append((3, "wangwu"))

data1.append((4, "zhaoliu")) val data2 = ListBuffer[Tuple2[Int, String]]()

data2.append((1, "beijing"))

data2.append((2, "shanghai"))

data2.append((4, "guangzhou")) val text1 = env.fromCollection(data1)

val text2 = env.fromCollection(data2) text1.leftOuterJoin(text2).where(0).equalTo(0).apply((first, second) => {

if (second == null) {

(first._1, first._2, "null")

} else {

(first._1, first._2, second._2)

}

}).print()

}

}

13. fullOuterJoin

代码实现

import org.apache.flink.api.common.operators.base.JoinOperatorBase.JoinHint

import org.apache.flink.api.scala.{ExecutionEnvironment, _}

import scala.collection.mutable.ListBuffer

/**** 左外连接,左边的Dataset中的每一个元素,去连接右边的元素 **/ object BatchFullOuterJoinDemo {

def main(args: Array[String]): Unit = {

val env = ExecutionEnvironment.getExecutionEnvironment val data1 = ListBuffer[Tuple2[Int, String]]()

data1.append((1, "zhangsan"))

data1.append((2, "lisi"))

data1.append((3, "wangwu"))

data1.append((4, "zhaoliu"))val data2 = ListBuffer[Tuple2[Int, String]]() data2.append((1, "beijing"))data2.append((2, "shanghai")) data2.append((4, "guangzhou")) val text1 = env.fromCollection(data1)val text2 = env.fromCollection(data2) /*** OPTIMIZER_CHOOSES:将选择权交予Flink优化器,相当于没有给提示; * BROADCAST_HASH_FIRST:广播第一个输入端,同时基于它构建一个哈希表,而第 二个输入端作为探索端,选择这种策略的场景是第一个输入端规模很小; * * BROADCAST_HASH_SECOND:广播第二个输入端并基于它构建哈希表,第一个输入端 作为探索端,选择这种策略的场景是第二个输入端的规模很小; * * REPARTITION_HASH_FIRST:该策略会导致两个输入端都会被重分区,但会基于第 一个输入端构建哈希表。该策略适用于第一个输入端数据量小于第二个输入端的数据量,但这 两个输入端的规模仍然很大,优化器也是当没有办法估算大小,没有已 存在的分区以及排序 顺序可被使用时系统默认采用的策略; * * REPARTITION_HASH_SECOND:该策略会导致两个输入端都会被重分区,但会基于 第二个输入端构建哈希表。该策略适用于两个输入端的规模都很大,但第二个输入端的数据量 小于第一个输入端的情况; * * REPARTITION_SORT_MERGE:输入端被以流的形式进行连接并合并成排过序的输入。 该策略适用于一个或两个输入端都已 排过序的情况;* */text1.fullOuterJoin(text2, JoinHint.REPARTITION_SORT_MERGE).where(0).equalTo(0).apply((first, second) => { if (first == null) { (second._1, "null", second._2) } else if (second == null) { (first._1, first._2, "null")} else { (first._1, first._2, second._2) } }).print()

}

}

14 .cross 交叉操作

代码实现

import org.apache.flink.api.scala.{DataSet, ExecutionEnvironment}

import org.apache.flink.api.scala._

/**** 通过形成这个数据集和其他数据集的笛卡尔积,创建一个新的数据集。 **/object BatchCrossDemo { def main(args: Array[String]): Unit = { val env = ExecutionEnvironment.getExecutionEnvironment

println("============cross==================")

cross(env)

println("============cross2==================")

cross2(env)

println("============cross3==================")

cross3(env)

println("============crossWithTiny==================") crossWithTiny(env) println("============crossWithHuge==================")

crossWithHuge(env)

}

/**** @param benv *交叉。拿第一个输入的每一个元素和第二个输入的每一个元素进行交叉操作。 ** res71: Seq[((Int, Int, Int), (Int, Int, Int))] = Buffer( * ((1,4,7),(10,40,70)), ((2,5,8),(10,40,70)), ((3,6,9),(10,40,70)), * * ((1,4,7),(20,50,80)), ((2,5,8),(20,50,80)), ((3,6,9),(20,50,80)), * * ((1,4,7),(30,60,90)), ((2,5,8),(30,60,90)), ((3,6,9),(30,60,90))) */

def cross(benv: ExecutionEnvironment): Unit = {

//1.定义两个DataSet

val coords1 = benv.fromElements((1, 4, 7), (2, 5, 8), (3, 6, 9))

val coords2 = benv.fromElements((10, 40, 70), (20, 50, 80), (30, 60, 90))

//2.交叉两个DataSet[Coord]

val result1 = coords1.cross(coords2)

//3.显示结果

println(result1.collect)}/**** @param benv * res69: Seq[(Coord, Coord)] = Buffer( * (Coord(1,4,7),Coord(10,40,70)), (Coord(2,5,8),Coord(10,40,70)), (Coord(3,6,9),Coord(10,40,70)), * * (Coord(1,4,7),Coord(20,50,80)), (Coord(2,5,8),Coord(20,50,80)), (Coord(3,6,9),Coord(20,50,80)), * * (Coord(1,4,7),Coord(30,60,90)), (Coord(2,5,8),Coord(30,60,90)), (Coord(3,6,9),Coord(30,60,90))) * */def cross2(benv: ExecutionEnvironment): Unit = { //1.定义 case class case class Coord(id: Int, x: Int, y: Int) //2.定义两个DataSet[Coord] val coords1: DataSet[Coord] = benv.fromElements( Coord(1, 4, 7), Coord(2, 5, 8), Coord(3, 6, 9)) val coords2: DataSet[Coord] = benv.fromElements(

Coord(10, 40, 70),

Coord(20, 50, 80),

Coord(30, 60, 90)) //3.交叉两个DataSet[Coord]

val result1 = coords1.cross(coords2)

//4.显示结果

println(result1.collect)

}/**** @param benv ** res65: Seq[(Int, Int, Int)] = Buffer( * (1,1,22), (2,1,24), (3,1,26), * * (1,2,24), (2,2,26), (3,2,28), * (1,3,26), (2,3,28), (3,3,30)) * */def cross3(benv: ExecutionEnvironment): Unit = {//1.定义 case class case class Coord(id: Int, x: Int, y: Int) //2.定义两个DataSet[Coord] val coords1: DataSet[Coord] = benv.fromElements( Coord(1, 4, 7), Coord(2, 5, 8), Coord(3, 6, 9)) val coords2: DataSet[Coord] = benv.fromElements( Coord(1, 4, 7), Coord(2, 5, 8), Coord(3, 6, 9)) //3.交叉两个DataSet[Coord],使用自定义方法

val r = coords1.cross(coords2) { (c1, c2) => {

val dist = (c1.x + c2.x) + (c1.y + c2.y) (c1.id, c2.id, dist)

}

}//4.显示结果

println(r.collect)

}/**** 暗示第二个输入较小的交叉。 ** 拿第一个输入的每一个元素和第二个输入的每一个元素进行交叉操作。 ** @param benv ** res67: Seq[(Coord, Coord)] = Buffer( ** (Coord(1,4,7),Coord(10,40,70)), (Coord(1,4,7),Coord(20,50,80)), (Coord(1,4,7),Coord(30,60,90)), ** (Coord(2,5,8),Coord(10,40,70)), (Coord(2,5,8),Coord(20,50,80)), (Coord(2,5,8),Coord(30,60,90)), ** (Coord(3,6,9),Coord(10,40,70)), (Coord(3,6,9),Coord(20,50,80)), (Coord(3,6,9),Coord(30,60,90))) **/def crossWithTiny(benv: ExecutionEnvironment): Unit = {

//1.定义 case class

case class Coord(id: Int, x: Int, y: Int)//2.定义两个DataSet[Coord] val coords1: DataSet[Coord] = benv.fromElements( Coord(1, 4, 7), Coord(2, 5, 8), Coord(3, 6, 9)) val coords2: DataSet[Coord] = benv.fromElements( Coord(10, 40, 70), Coord(20, 50, 80),Coord(30, 60, 90)) //3.交叉两个DataSet[Coord],暗示第二个输入较小

val result1 = coords1.crossWithTiny(coords2) //4.显示结果println(result1.collect) }/****** @param benv ** 暗示第二个输入较大的交叉。** 拿第一个输入的每一个元素和第二个输入的每一个元素进行交叉操作。* res68: Seq[(Coord, Coord)] = Buffer( * (Coord(1,4,7),Coord(10,40,70)), (Coord(2,5,8),Coord(10,40,70)), (Coord(3,6,9),Coord(10,40,70)), * * (Coord(1,4,7),Coord(20,50,80)), (Coord(2,5,8),Coord(20,50,80)), (Coord(3,6,9),Coord(20,50,80)),* * (Coord(1,4,7),Coord(30,60,90)), (Coord(2,5,8),Coord(30,60,90)), (Coord(3,6,9),Coord(30,60,90))) * **/ def crossWithHuge(benv: ExecutionEnvironment): Unit = {

//1.定义 case class

case class Coord(id: Int, x: Int, y: Int)

//2.定义两个DataSet[Coord]

val coords1: DataSet[Coord] = benv.fromElements(Coord(1, 4, 7), Coord(2, 5, 8), Coord(3, 6, 9)) val coords2: DataSet[Coord] = benv.fromElements(Coord(10, 40, 70),

Coord(20, 50, 80),

Coord(30, 60, 90))

//3.交叉两个DataSet[Coord],暗示第二个输入较大

val result1 = coords1.crossWithHuge(coords2) //4.显示结果

println(result1.collect)

}

}

15. Union

代码实现

import org.apache.flink.api.scala._

/*** 将两个DataSet取并集,并不会进行去重。 */ object BatchUnionDemo {

def main(args: Array[String]): Unit = { val env = ExecutionEnvironment.getExecutionEnvironment

// 使用`fromCollection`创建两个数据源

val wordDataSet1 = env.fromCollection(List("hadoop", "hive", "flume"))

val wordDataSet2 = env.fromCollection(List("hadoop", "hive", "spark"))

val wordDataSet3 = env.fromElements("hadoop")

val wordDataSet4 = env.fromElements("hadoop")

wordDataSet1.union(wordDataSet2).print()

wordDataSet3.union(wordDataSet4).print()

}

}

16. Rebalance

代码实现

import org.apache.flink.api.common.functions.RichMapFunction

import org.apache.flink.api.scala.ExecutionEnvironment

import org.apache.flink.api.scala._

/**** 对数据集进行再平衡,重分区,消除数据倾斜 **/

object BatchRebalanceDemo {

def main(args: Array[String]): Unit = { val env = ExecutionEnvironment.getExecutionEnvironment

val ds = env.generateSequence(0, 100)

val rebalanced = ds.filter(_ > 8)

// val rebalanced = skewed.rebalance()

val countsInPartition = rebalanced.map(new RichMapFunction[Long, (Int, Long)] { def map(in: Long) = {

//获取并行时子任务的编号getRuntimeContext.getIndexOfThisSubtask

(getRuntimeContext.getIndexOfThisSubtask, in)

}})countsInPartition.print() } }

2:使用 rebalance

import org.apache.flink.api.common.functions.RichMapFunction

import org.apache.flink.api.scala.ExecutionEnvironment

import org.apache.flink.api.scala._

/**** 对数据集进行再平衡,重分区,消除数据倾斜 **/

object BatchDemoRebalance2 {

def main(args: Array[String]): Unit = { val env = ExecutionEnvironment.getExecutionEnvironment

//TODO rebalance

val ds = env.generateSequence(1, 3000)

val skewed = ds.filter(_ > 780)

val rebalanced = skewed.rebalance()

val countsInPartition = rebalanced.map( new RichMapFunction[Long, (Int, Long)] {

def map(in: Long) = {

//获取并行时子任务的编号 getRuntimeContext.getIndexOfThisSubtask

(getRuntimeContext.getIndexOfThisSubtask, in)

}}) countsInPartition.print() } }

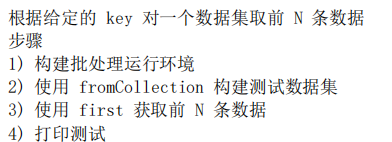

17 .First

代码实现

import org.apache.flink.api.common.operators.Order

import org.apache.flink.api.scala.ExecutionEnvironment

import scala.collection.mutable.ListBuffer object BatchFirstNDemo {

def main(args: Array[String]): Unit = {val env=ExecutionEnvironment.getExecutionEnvironment import org.apache.flink.api.scala._ val data = ListBuffer[Tuple2[Int,String]]()data.append((2,"zs"))data.append((4,"ls"))data.append((3,"ww"))data.append((1,"xw")) data.append((1,"aw"))data.append((1,"mw")) val text = env.fromCollection(data)//获取前3条数据,按照数据插入的顺序 text.first(3).print() println("==============================") //根据数据中的第一列进行分组,获取每组的前2个元素 text.groupBy(0).first(2).print() println("==============================") //根据数据中的第一列分组,再根据第二列进行组内排序[升序],获取每组的前2个元素 text.groupBy(0).sortGroup(1,Order.ASCENDING).first(2).print() println("==============================") //不分组,全局排序获取集合中的前3个元素, text.sortPartition(0,Order.ASCENDING).sortPartition(1,Order.DESCENDIN G).first(3).print() } }