尺度不变特征核心是不同尺度拍摄的两幅图像的同一个物体,对应的两个theta比率等于拍摄两幅图像的尺度的比率。

而OpenCV提供的SIFT和Surf正是利用尺度不变性就行特征点检测的代表。它们的原理可以参考本文的参考文献,写的很详细,本来想在这里介绍下它们的原理的,但是看到参考的blog中写的太好了,我不能写的这么清楚,就省去了。

使用起来也很方便,比如利用Sift找到匹配物体代码如下:

int main(int argc, char** argv){Mat img_scene = imread("./box_in_scene.png", CV_LOAD_IMAGE_GRAYSCALE);Mat img_object = imread("./box.png", CV_LOAD_IMAGE_GRAYSCALE);if (!img_object.data || !img_scene.data){std::cout << " --(!) Error reading images " << std::endl; return -1;}std::vector<KeyPoint> keypoints_object, keypoints_scene;//-- Step 1: Detect the keypoints using SIFT DetectorPtr<FeatureDetector> detector = SiftFeatureDetector::create(400);detector->detect(img_object, keypoints_object);detector->detect(img_scene, keypoints_scene);//-- Step 2: Calculate descriptors (feature vectors)Ptr<DescriptorExtractor> extractor = SiftDescriptorExtractor::create();Mat descriptors_object, descriptors_scene;extractor->compute(img_object, keypoints_object, descriptors_object);extractor->compute(img_scene, keypoints_scene, descriptors_scene);//-- Step 3: Matching descriptor vectors using FLANN matcherFlannBasedMatcher matcher;std::vector< DMatch > matches;matcher.match(descriptors_object, descriptors_scene, matches);double max_dist = 0; double min_dist = 100;//-- Quick calculation of max and min distances between keypointsfor (int i = 0; i < descriptors_object.rows; i++){double dist = matches[i].distance;if (dist < min_dist) min_dist = dist;if (dist > max_dist) max_dist = dist;}//-- Draw only "good" matches (i.e. whose distance is less than 3*min_dist )std::vector< DMatch > good_matches;for (int i = 0; i < descriptors_object.rows; i++){if (matches[i].distance < 3 * min_dist){good_matches.push_back(matches[i]);}}Mat img_matches;drawMatches(img_object, keypoints_object, img_scene, keypoints_scene,good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);//-- Localize the objectstd::vector<Point2f> obj;std::vector<Point2f> scene;for (int i = 0; i < good_matches.size(); i++){//-- Get the keypoints from the good matchesobj.push_back(keypoints_object[good_matches[i].queryIdx].pt);scene.push_back(keypoints_scene[good_matches[i].trainIdx].pt);}Mat H = findHomography(obj, scene, RANSAC);//Mat H = getPerspectiveTransform(obj, scene);//-- Get the corners from the image_1 ( the object to be "detected" )std::vector<Point2f> obj_corners(4);obj_corners[0] = Point(0, 0);obj_corners[1] = Point(img_object.cols, 0);obj_corners[2] = Point(img_object.cols, img_object.rows);obj_corners[3] = Point(0, img_object.rows);std::vector<Point2f> scene_corners(4);perspectiveTransform(obj_corners, scene_corners, H);//-- Draw lines between the corners (the mapped object in the scene - image_2 )line(img_matches, scene_corners[0] + Point2f(img_object.cols, 0), scene_corners[1] + Point2f(img_object.cols, 0), Scalar(0, 255, 0), 4);line(img_matches, scene_corners[1] + Point2f(img_object.cols, 0), scene_corners[2] + Point2f(img_object.cols, 0), Scalar(0, 255, 0), 4);line(img_matches, scene_corners[2] + Point2f(img_object.cols, 0), scene_corners[3] + Point2f(img_object.cols, 0), Scalar(0, 255, 0), 4);line(img_matches, scene_corners[3] + Point2f(img_object.cols, 0), scene_corners[0] + Point2f(img_object.cols, 0), Scalar(0, 255, 0), 4);//-- Show detected matchesimshow("Good Matches & Object detection", img_matches);waitKey(0);return 0;}

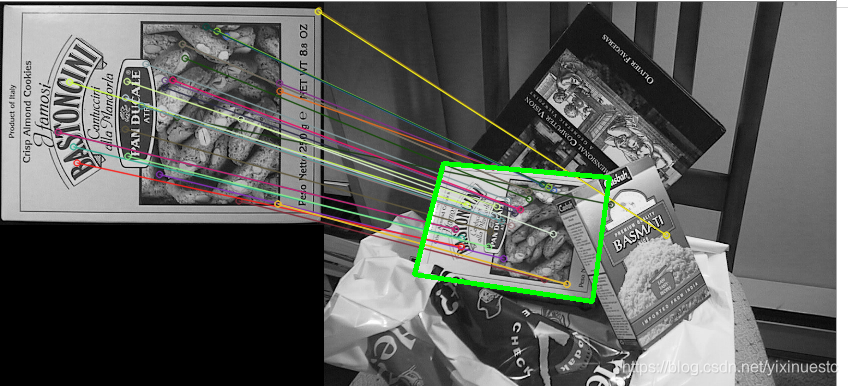

结果如下:

如果用Surf只用把代码中的Sift换成Surf即可。研究Sift和Surf原理会发现,他们都会用到Hessian矩阵,而且Hessian矩阵的特征值大小和它两个方向的边缘强度有关,特征向量是它梯度方向即垂直于边缘方向。

通过这一点我们可以得到启发,其实利用它的这一思想,可以进行边缘检测。

比如对下图进行边缘检测,因为整体对比度不高,我们先试试用Canny边缘检测。

Mat gray;int low_threshold = 100;const int max_threshold = 128;void canny_edge_detector(int, void*){Mat edge;Canny(gray, edge, low_threshold, 2 * low_threshold);imshow("canny detect", edge);}int main(int argc, char** argv){Mat src = imread("./demo.png", CV_LOAD_IMAGE_COLOR);imshow("source image", src);cvtColor(src, gray, CV_BGR2GRAY);namedWindow("canny detect");createTrackbar("canny threshold", "canny detect", &low_threshold, max_threshold, canny_edge_detector);canny_edge_detector(0, 0);waitKey(0);return 0;}

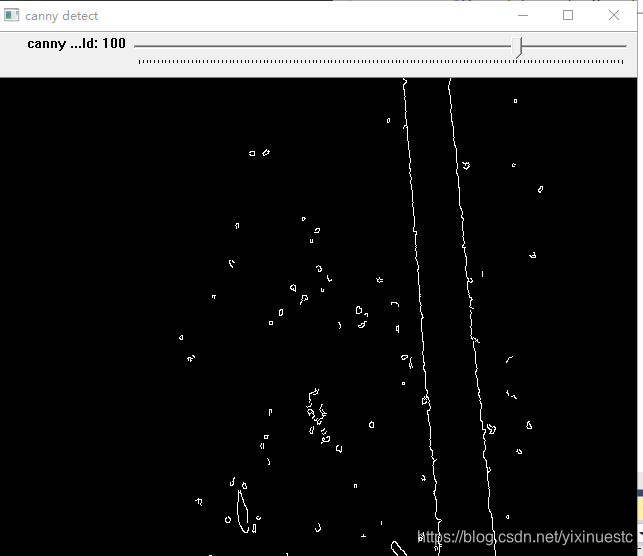

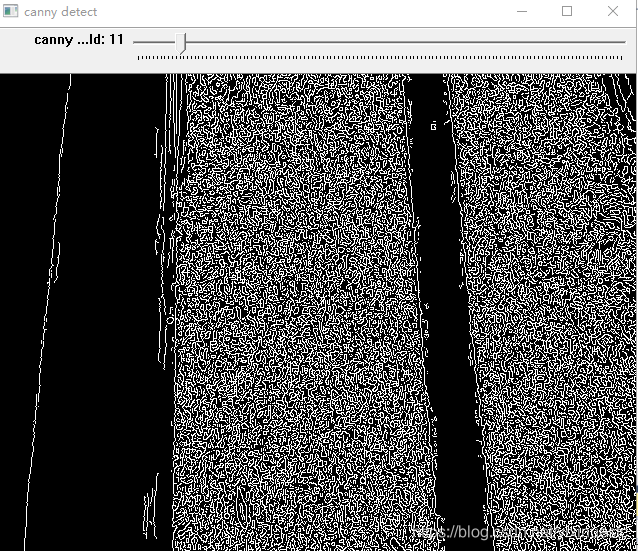

结果如下:

在低阈值为100,高阈值为200时候,可以准确检测到白线的边缘,但是左侧边缘因为对比度低,丢失了,如果调整阈值,比较小的时候可以依稀看到左侧边缘,但是此时干扰太多,而且我们很难能够得到准确的阈值时多少,如果借助Hessian矩阵特征值,最大特征值是最小特征值的10倍以上,认为是边缘点(这个条件比较宽松,后续可以再改进)

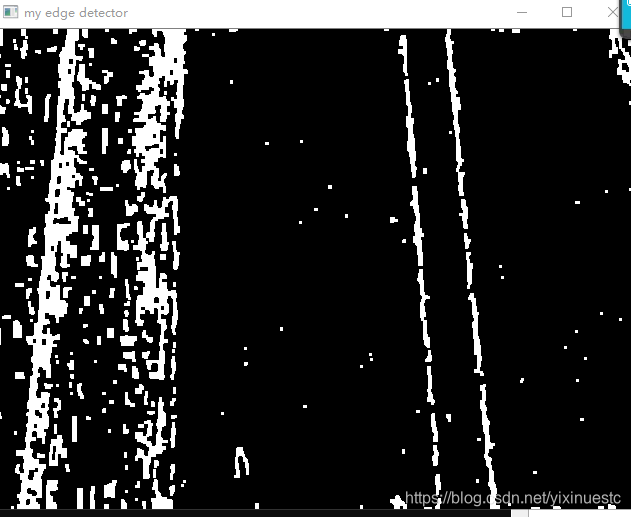

int main(int argc, char** argv){Mat src = imread("./demo.png", CV_LOAD_IMAGE_COLOR);imshow("source image", src);Mat gray;cvtColor(src, gray, CV_BGR2GRAY);// 高斯滤波Mat filter;cv::GaussianBlur(gray, filter, cv::Size(3, 3), 1.1, 1.1);cv::GaussianBlur(filter, filter, cv::Size(3, 3), 1.1, 1.1);// 利用cornerEigenValsAndVecs计算Hessian矩阵特征值和特征向量Mat dst;Mat result = Mat::zeros(src.size(), CV_8UC1);cornerEigenValsAndVecs(filter, dst, 3, 3); // dst每一个元素是Vec6ffor (int r = 0; r < dst.rows; r++){for (int c = 0; c < dst.cols; c++){float lamda1 = dst.at<Vec6f>(r, c)[0];float lamda2 = dst.at<Vec6f>(r, c)[1];float a1 = dst.at<Vec6f>(r, c)[2];float b1 = dst.at<Vec6f>(r, c)[3];float a2 = dst.at<Vec6f>(r, c)[4];float b2 = dst.at<Vec6f>(r, c)[5]; if ((lamda1 > 10 * lamda2 || lamda2 > 10 * lamda1)){result.at<uchar>(r, c) = 255;}}}// 数学形态学 腐蚀Mat k1 = getStructuringElement(MORPH_RECT, Size(3, 3), Point(-1, -1));Mat k2 = getStructuringElement(MORPH_RECT, Size(5, 5), Point(-1, -1));morphologyEx(result, result, MORPH_ERODE, k2, Point(-1, -1), 1);morphologyEx(result, result, MORPH_DILATE, k1, Point(-1, -1), 1);imshow("my edge detector", result);waitKey(0);return 0;}

可以保留所有的边缘,后面再根据一些过滤条件,应该可以获取边缘信息。

参考:

《OpenCV计算机视觉编程攻略(第2版)》 [加] Robert 著 相银初 译

https://blog.csdn.net/zddblog/article/details/7521424

https://www.jianshu.com/p/db4bbe760d2e

http://www.doc88.com/p-6458994174161.html