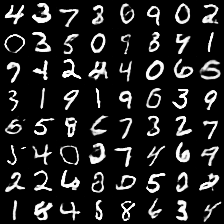

WGAN-GP生成MNIST

参考博客点击打开链接

点击打开链接

33个epoch结果

#coding:utf-8

import os

import numpy as np

import scipy.misc

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data #as mnist_datadef conv2d(name, tensor,ksize, out_dim, stddev=0.01, stride=2, padding='SAME'):with tf.variable_scope(name):w = tf.get_variable('w', [ksize, ksize, tensor.get_shape()[-1],out_dim], dtype=tf.float32,initializer=tf.random_normal_initializer(stddev=stddev))var = tf.nn.conv2d(tensor,w,[1,stride, stride,1],padding=padding)b = tf.get_variable('b', [out_dim], 'float32',initializer=tf.constant_initializer(0.01))return tf.nn.bias_add(var, b)def deconv2d(name, tensor, ksize, outshape, stddev=0.01, stride=2, padding='SAME'):with tf.variable_scope(name):w = tf.get_variable('w', [ksize, ksize, outshape[-1], tensor.get_shape()[-1]], dtype=tf.float32,initializer=tf.random_normal_initializer(stddev=stddev))var = tf.nn.conv2d_transpose(tensor, w, outshape, strides=[1, stride, stride, 1], padding=padding)b = tf.get_variable('b', [outshape[-1]], 'float32', initializer=tf.constant_initializer(0.01))return tf.nn.bias_add(var, b)def fully_connected(name,value, output_shape):with tf.variable_scope(name, reuse=None) as scope:shape = value.get_shape().as_list()w = tf.get_variable('w', [shape[1], output_shape], dtype=tf.float32,initializer=tf.random_normal_initializer(stddev=0.01))b = tf.get_variable('b', [output_shape], dtype=tf.float32, initializer=tf.constant_initializer(0.0))return tf.matmul(value, w) + bdef relu(name, tensor):return tf.nn.relu(tensor, name)def lrelu(name,x, leak=0.2):return tf.maximum(x, leak * x, name=name)DEPTH = 28

OUTPUT_SIZE = 28

batch_size = 64

def Discriminator(name,inputs,reuse):with tf.variable_scope(name, reuse=reuse):output = tf.reshape(inputs, [-1, 28, 28, 1])output1 = conv2d('d_conv_1', output, ksize=5, out_dim=DEPTH)output2 = lrelu('d_lrelu_1', output1)output3 = conv2d('d_conv_2', output2, ksize=5, out_dim=2*DEPTH)output4 = lrelu('d_lrelu_2', output3)output5 = conv2d('d_conv_3', output4, ksize=5, out_dim=4*DEPTH)output6 = lrelu('d_lrelu_3', output5)# output7 = conv2d('d_conv_4', output6, ksize=5, out_dim=8*DEPTH)# output8 = lrelu('d_lrelu_4', output7)chanel = output6.get_shape().as_list()output9 = tf.reshape(output6, [batch_size, chanel[1]*chanel[2]*chanel[3]])output0 = fully_connected('d_fc', output9, 1)return output0def generator(name, reuse=False):with tf.variable_scope(name, reuse=reuse):noise = tf.random_normal([batch_size, 128])#.astype('float32')noise = tf.reshape(noise, [batch_size, 128], 'noise')output = fully_connected('g_fc_1', noise, 2*2*8*DEPTH)output = tf.reshape(output, [batch_size, 2, 2, 8*DEPTH], 'g_conv')output = deconv2d('g_deconv_1', output, ksize=5, outshape=[batch_size, 4, 4, 4*DEPTH])output = tf.nn.relu(output)output = tf.reshape(output, [batch_size, 4, 4, 4*DEPTH])output = deconv2d('g_deconv_2', output, ksize=5, outshape=[batch_size, 7, 7, 2* DEPTH])output = tf.nn.relu(output)output = deconv2d('g_deconv_3', output, ksize=5, outshape=[batch_size, 14, 14, DEPTH])output = tf.nn.relu(output)output = deconv2d('g_deconv_4', output, ksize=5, outshape=[batch_size, OUTPUT_SIZE, OUTPUT_SIZE, 1])# output = tf.nn.relu(output)output = tf.nn.sigmoid(output)return tf.reshape(output,[-1,784])def save_images(images, size, path):# 图片归一化img = (images + 1.0) / 2.0h, w = img.shape[1], img.shape[2]merge_img = np.zeros((h * size[0], w * size[1], 3))for idx, image in enumerate(images):i = idx % size[1]j = idx // size[1]merge_img[j * h:j * h + h, i * w:i * w + w, :] = imagereturn scipy.misc.imsave(path, merge_img)LAMBDA = 10

EPOCH = 40

def train():# print os.getcwd()with tf.variable_scope(tf.get_variable_scope()):# real_data = tf.placeholder(dtype=tf.float32, shape=[-1, OUTPUT_SIZE*OUTPUT_SIZE*3])path = os.getcwd()data_dir = path + "/train.tfrecords"#准备使用自己的数据集# print data_dir'''获得数据'''z = tf.placeholder(dtype=tf.float32, shape=[batch_size, 100])#build placeholderreal_data = tf.placeholder(tf.float32, shape=[batch_size,784])with tf.variable_scope(tf.get_variable_scope()):fake_data = generator('gen',reuse=False)disc_real = Discriminator('dis_r',real_data,reuse=False)disc_fake = Discriminator('dis_r',fake_data,reuse=True)t_vars = tf.trainable_variables()d_vars = [var for var in t_vars if 'd_' in var.name]g_vars = [var for var in t_vars if 'g_' in var.name]'''计算损失'''gen_cost = tf.reduce_mean(disc_fake)disc_cost = -tf.reduce_mean(disc_fake) + tf.reduce_mean(disc_real)alpha = tf.random_uniform(shape=[batch_size, 1],minval=0.,maxval=1.)differences = fake_data - real_datainterpolates = real_data + (alpha * differences)gradients = tf.gradients(Discriminator('dis_r',interpolates,reuse=True), [interpolates])[0]slopes = tf.sqrt(tf.reduce_sum(tf.square(gradients), reduction_indices=[1]))gradient_penalty = tf.reduce_mean((slopes - 1.) ** 2)disc_cost += LAMBDA * gradient_penaltywith tf.variable_scope(tf.get_variable_scope(), reuse=None):gen_train_op = tf.train.AdamOptimizer(learning_rate=1e-4,beta1=0.5,beta2=0.9).minimize(gen_cost,var_list=g_vars)disc_train_op = tf.train.AdamOptimizer(learning_rate=1e-4,beta1=0.5,beta2=0.9).minimize(disc_cost,var_list=d_vars)saver = tf.train.Saver()# os.environ['CUDA_VISIBLE_DEVICES'] = str(0)#gpu环境# config = tf.ConfigProto()# config.gpu_options.per_process_gpu_memory_fraction = 0.5#调用50%GPU资源# sess = tf.InteractiveSession(config=config)sess = tf.InteractiveSession()coord = tf.train.Coordinator()threads = tf.train.start_queue_runners(sess=sess, coord=coord)if not os.path.exists('img'):os.mkdir('img')init = tf.global_variables_initializer()# init = tf.initialize_all_variables()sess.run(init)mnist = input_data.read_data_sets("MNIST_data", one_hot=True)# mnist = mnist_data.read_data_sets("data", one_hot=True, reshape=False, validation_size=0)for epoch in range (1, EPOCH):idxs = 1000for iters in range(1, idxs):img, _ = mnist.train.next_batch(batch_size)# img2 = tf.reshape(img, [batch_size, 784])for x in range (0,5):_, d_loss = sess.run([disc_train_op, disc_cost], feed_dict={real_data: img})_, g_loss = sess.run([gen_train_op, gen_cost])# print "fake_data:%5f disc_real:%5f disc_fake:%5f "%(tf.reduce_mean(fake_data)# ,tf.reduce_mean(disc_real),tf.reduce_mean(disc_fake))print("[%4d:%4d/%4d] d_loss: %.8f, g_loss: %.8f"%(epoch, iters, idxs, d_loss, g_loss))with tf.variable_scope(tf.get_variable_scope()):samples = generator('gen', reuse=True)samples = tf.reshape(samples, shape=[batch_size, 28,28,1])samples=sess.run(samples)save_images(samples, [8,8], os.getcwd()+'/img/'+'sample_%d_epoch.png' % (epoch))if epoch>=39:checkpoint_path = os.path.join(os.getcwd(),'my_wgan-gp.ckpt')saver.save(sess, checkpoint_path, global_step=epoch)print ('********* model saved *********')coord.request_stop()coord.join(threads)sess.close()

if __name__ == '__main__':train()import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import os

import numpy as np

from scipy import misc,ndimage

import matplotlib.pyplot as plt

from bokeh.charts.attributes import colormnist = input_data.read_data_sets('./MNIST_data')batch_size = 100

width,height = 28,28

mnist_dim = width*height

random_dim = 10

epochs = 1000000def my_init(size):return tf.random_uniform(size, -0.05, 0.05)D_W1 = tf.Variable(my_init([mnist_dim, 128]))

D_b1 = tf.Variable(tf.zeros([128]))

D_W2 = tf.Variable(my_init([128, 32]))

D_b2 = tf.Variable(tf.zeros([32]))

D_W3 = tf.Variable(my_init([32, 1]))

D_b3 = tf.Variable(tf.zeros([1]))

D_variables = [D_W1, D_b1, D_W2, D_b2, D_W3, D_b3]G_W1 = tf.Variable(my_init([random_dim, 32]))

G_b1 = tf.Variable(tf.zeros([32]))

G_W2 = tf.Variable(my_init([32, 128]))

G_b2 = tf.Variable(tf.zeros([128]))

G_W3 = tf.Variable(my_init([128, mnist_dim]))

G_b3 = tf.Variable(tf.zeros([mnist_dim]))

G_variables = [G_W1, G_b1, G_W2, G_b2, G_W3, G_b3]def D(X):X = tf.nn.relu(tf.matmul(X, D_W1) + D_b1)X = tf.nn.relu(tf.matmul(X, D_W2) + D_b2)X = tf.matmul(X, D_W3) + D_b3return Xdef G(X):X = tf.nn.relu(tf.matmul(X, G_W1) + G_b1)X = tf.nn.relu(tf.matmul(X, G_W2) + G_b2)X = tf.nn.sigmoid(tf.matmul(X, G_W3) + G_b3)return Xreal_X = tf.placeholder(tf.float32, shape=[batch_size, mnist_dim])

random_X = tf.placeholder(tf.float32, shape=[batch_size, random_dim])

random_Y = G(random_X)eps = tf.random_uniform([batch_size, 1], minval=0., maxval=1.)

X_inter = eps*real_X + (1. - eps)*random_Y

grad = tf.gradients(D(X_inter), [X_inter])[0]

grad_norm = tf.sqrt(tf.reduce_sum((grad)**2, axis=1))

grad_pen = 10 * tf.reduce_mean(tf.nn.relu(grad_norm - 1.))D_loss = tf.reduce_mean(D(real_X)) - tf.reduce_mean(D(random_Y)) + grad_pen

G_loss = tf.reduce_mean(D(random_Y))D_solver = tf.train.AdamOptimizer(1e-4, 0.5).minimize(D_loss, var_list=D_variables)

G_solver = tf.train.AdamOptimizer(1e-4, 0.5).minimize(G_loss, var_list=G_variables)sess = tf.Session()

sess.run(tf.global_variables_initializer())if not os.path.exists('out/'):os.makedirs('out/')for e in range(epochs):for i in range(5):real_batch_X,_ = mnist.train.next_batch(batch_size)random_batch_X = np.random.uniform(-1, 1, (batch_size, random_dim))_,D_loss_ = sess.run([D_solver,D_loss], feed_dict={real_X:real_batch_X, random_X:random_batch_X})random_batch_X = np.random.uniform(-1, 1, (batch_size, random_dim))_,G_loss_ = sess.run([G_solver,G_loss], feed_dict={random_X:random_batch_X})if e % 10 == 0:print ('epoch %s, D_loss: %s, G_loss: %s'%(e, D_loss_, G_loss_))n_rows = 6if e % 1000 == 0:check_imgs = sess.run(random_Y, feed_dict={random_X:random_batch_X}).reshape((100, width, height))r,c = 10,10cnt = 0fig,axs = plt.subplots(r,c)for i in range(r):for j in range(c):axs[i,j].imshow(check_imgs[cnt,:,:],cmap='gray')axs[i,j].axis('off')cnt+=1plt.show()

# imgs = np.ones((width*n_rows+5*n_rows+5, height*n_rows+5*n_rows+5))

# for i in range(n_rows*n_rows):

# imgs[5+5*(i%n_rows)+width*(i%n_rows):5+5*(i%n_rows)+width+width*(i%n_rows), 5+5*(i/n_rows)+height*(i/n_rows):5+5*(i/n_rows)+height+height*(i/n_rows)] = check_imgs[i]

# misc.imsave('out/%s.png'%(e/1000), imgs)

WGAN-GP生成自己的数据

#coding:utf-8

import os

import numpy as np

import scipy.misc

import tensorflow as tf

from six.moves import xrange

import matplotlib.pyplot as pltdef conv2d(name, tensor,ksize, out_dim, stddev=0.01, stride1=1,stride2=1, padding='SAME'):with tf.variable_scope(name):w = tf.get_variable('w', [ksize, ksize, tensor.get_shape()[-1],out_dim], dtype=tf.float32,initializer=tf.random_normal_initializer(stddev=stddev))var = tf.nn.conv2d(tensor,w,[1,stride1, stride2,1],padding=padding)b = tf.get_variable('b', [out_dim], 'float32',initializer=tf.constant_initializer(0.01))return tf.nn.bias_add(var, b)def deconv2d(name, tensor, ksize, outshape, stddev=0.01, stride1= 1,stride2=1, padding='SAME'):with tf.variable_scope(name):w = tf.get_variable('w', [ksize, ksize, outshape[-1], tensor.get_shape()[-1]], dtype=tf.float32,initializer=tf.random_normal_initializer(stddev=stddev))var = tf.nn.conv2d_transpose(tensor, w, outshape, strides=[1, stride1, stride2, 1], padding=padding)b = tf.get_variable('b', [outshape[-1]], 'float32', initializer=tf.constant_initializer(0.01))return tf.nn.bias_add(var, b)def fully_connected(name,value, output_shape):with tf.variable_scope(name, reuse=None) as scope:shape = value.get_shape().as_list()w = tf.get_variable('w', [shape[1], output_shape], dtype=tf.float32,initializer=tf.random_normal_initializer(stddev=0.01))b = tf.get_variable('b', [output_shape], dtype=tf.float32, initializer=tf.constant_initializer(0.0))return tf.matmul(value, w) + bdef relu(name, tensor):return tf.nn.relu(tensor, name)def lrelu(name,x, leak=0.2):return tf.maximum(x, leak * x, name=name)width = 3

height = 60

batch_size = 100

a =32

b=5

def Discriminator(name,inputs,reuse):with tf.variable_scope(name, reuse=reuse):output = tf.reshape(inputs, [-1, 3, 60, 1])print(output.shape)output1 = conv2d('d_conv_1', output, ksize=b, out_dim=a)output2 = lrelu('d_lrelu_1', output1)print(output2.shape)output3 = conv2d('d_conv_2', output2, ksize=b, out_dim=2*a)output4 = lrelu('d_lrelu_2', output3)print(output4.shape)output5 = conv2d('d_conv_3', output4, ksize=b, out_dim=4*a)output6 = lrelu('d_lrelu_3', output5)print(output6.shape)# output7 = conv2d('d_conv_4', output6, ksize=5, out_dim=8*width)

# output8 = lrelu('d_lrelu_4', output7)chanel = output6.get_shape().as_list()output9 = tf.reshape(output6, [batch_size, chanel[1]*chanel[2]*chanel[3]])print(output9.shape)output0 = fully_connected('d_fc', output9, 1)return output0def generator(name, reuse=False):with tf.variable_scope(name, reuse=reuse):noise = tf.random_normal([batch_size, 100])#.astype('float32')noise = tf.reshape(noise, [batch_size, 100], 'noise')output = fully_connected('g_fc_1', noise, 3*60*8*a)output = tf.reshape(output, [batch_size, 3, 60, 8*a], 'g_conv')print(output.shape)output = deconv2d('g_deconv_1', output, ksize=b, outshape=[batch_size, 3, 60, 4*a])output = tf.nn.relu(output)output = tf.reshape(output, [batch_size, 3, 60, 4*a])print(output.shape)output = deconv2d('g_deconv_2', output, ksize=b, outshape=[batch_size, 3, 60, 2* a])output = tf.nn.relu(output)output = deconv2d('g_deconv_3', output, ksize=b, outshape=[batch_size, 3, 60, a])output = tf.nn.relu(output)output = deconv2d('g_deconv_4', output, ksize=b, outshape=[batch_size, 3, 60, 1])print(output.shape)

# output = tf.nn.relu(output)output = tf.nn.sigmoid(output)return tf.reshape(output,[-1,180])def save_images(images, size, path):# 图片归一化img = (images + 1.0) / 2.0h, w = img.shape[1], img.shape[2]merge_img = np.zeros((h * size[0], w * size[1], 3))for idx, image in enumerate(images):i = idx % size[1]j = idx // size[1]merge_img[j * h:j * h + h, i * w:i * w + w, :] = imagereturn scipy.misc.imsave(path, merge_img)LAMBDA = 10

EPOCH = 40

def train():# print os.getcwd()with tf.variable_scope(tf.get_variable_scope()):# real_data = tf.placeholder(dtype=tf.float32, shape=[-1, height*height*3])path = os.getcwd()data_dir = path + "/train.tfrecords"#准备使用自己的数据集# print data_dir'''获得数据'''z = tf.placeholder(dtype=tf.float32, shape=[batch_size, 100])#build placeholderreal_data = tf.placeholder(tf.float32, shape=[batch_size,180])with tf.variable_scope(tf.get_variable_scope()):fake_data = generator('gen',reuse=False)disc_real = Discriminator('dis_r',real_data,reuse=False)disc_fake = Discriminator('dis_r',fake_data,reuse=True)t_vars = tf.trainable_variables()d_vars = [var for var in t_vars if 'd_' in var.name]g_vars = [var for var in t_vars if 'g_' in var.name]'''计算损失'''gen_cost = tf.reduce_mean(disc_fake)disc_cost = -tf.reduce_mean(disc_fake) + tf.reduce_mean(disc_real)alpha = tf.random_uniform(shape=[batch_size, 1],minval=0.,maxval=1.)differences = fake_data - real_datainterpolates = real_data + (alpha * differences)gradients = tf.gradients(Discriminator('dis_r',interpolates,reuse=True), [interpolates])[0]slopes = tf.sqrt(tf.reduce_sum(tf.square(gradients), reduction_indices=[1]))gradient_penalty = tf.reduce_mean((slopes - 1.) ** 2)disc_cost += LAMBDA * gradient_penaltywith tf.variable_scope(tf.get_variable_scope(), reuse=None):gen_train_op = tf.train.AdamOptimizer(learning_rate=1e-4,beta1=0.5,beta2=0.9).minimize(gen_cost,var_list=g_vars)disc_train_op = tf.train.AdamOptimizer(learning_rate=1e-4,beta1=0.5,beta2=0.9).minimize(disc_cost,var_list=d_vars)saver = tf.train.Saver()# os.environ['CUDA_VISIBLE_DEVICES'] = str(0)#gpu环境# config = tf.ConfigProto()# config.gpu_options.per_process_gpu_memory_fraction = 0.5#调用50%GPU资源# sess = tf.InteractiveSession(config=config)sess = tf.InteractiveSession()coord = tf.train.Coordinator()threads = tf.train.start_queue_runners(sess=sess, coord=coord)if not os.path.exists('img'):os.mkdir('img')init = tf.global_variables_initializer()# init = tf.initialize_all_variables()sess.run(init)data = np.load('data/final37.npy')# print(data.shape)data = data[:,:,0:60]for epoch in range (1, EPOCH):idxs = 1000for iters in range(1, idxs):X_train = dataidx = np.random.randint(0,X_train.shape[0],batch_size)img = X_train[idx]img = img.reshape(-1,180)for x in range (0,5):_, d_loss = sess.run([disc_train_op, disc_cost], feed_dict={real_data: img})_, g_loss = sess.run([gen_train_op, gen_cost])print("[%4d:%4d/%4d] d_loss: %.8f, g_loss: %.8f"%(epoch, iters, idxs, d_loss, g_loss))with tf.variable_scope(tf.get_variable_scope()):samples = generator('gen', reuse=True)samples = tf.reshape(samples, shape=[batch_size, 3,60,1])samples=sess.run(samples)gen = samplesr, c = 10, 10fig, axs = plt.subplots(r, c)cnt = 0for i in range(r):for j in range(c):xy = gen[cnt]#第n个分叉图,有三个分支,每个分支21个数for k in range(len(xy)):x = xy[k][0:30]y = xy[k][30:60]axs[i,j].plot(x,y)axs[i,j].axis('off')cnt += 1if not os.path.exists('wgan-gp'):os.makedirs('wgan-gp')fig.savefig("wgan-gp/%d.png" % epoch)plt.close()if epoch>=39:checkpoint_path = os.path.join(os.getcwd(),'my_wgan-gp.ckpt')saver.save(sess, checkpoint_path, global_step=epoch)print ('********* model saved *********')coord.request_stop()coord.join(threads)sess.close()

if __name__ == '__main__':train()