论文地址:https://arxiv.org/pdf/2103.01077v1.pdf

代码地址:https://github. com/AMIGWU/UP-FSOD

Abstract

Few-shot object detection (FSOD) aims to strengthen the performance of novel object detection with few labeled samples. To alleviate the constraint of few samples, enhancing the generalization ability of learned features for novel objects plays a key role. Thus, the feature learning process of FSOD should focus more on intrinsical object characteristics, which are invariant under different visual changes and therefore are helpful for feature generalization. Unlike previous attempts of the meta-learning paradigm, in this paper , we explore how to enhance object features with intrinsical characteristics that are universal across different object categories. We propose a new prototype, namely universal prototype, that is learned from all object categories. Besides the advantage of characterizing invariant characteristics, the universal prototypes alleviate the impact of unbalanced object categories. After enhancing object features with the universal prototypes, we impose a consistency loss to maximize the agreement between the enhanced features and the original ones, which is beneficial for learning invariant object characteristics. Thus, we develop a new framework of few-shot object detection with universal prototypes (F SODup) that owns the merit of feature generalization towards novel objects. Experimental results on PASCAL VOC and MS COCO show the effectiveness of F SODup. Particularly, for the 1-shot case of VOC Split2, FSODup outperforms the baseline by 6.8% in terms of mAP.

小样本目标检测(FSOD)旨在通过少量标记样本增强新目标检测的性能。为了减轻样本数较少的限制,提高学习特征对新目标的泛化能力起着关键作用。因此,FSOD的特征学习过程应该更多地关注目标的本质特征,这些特征在不同的视觉变化下是不变的,因此有助于特征泛化。与以往元学习范式的尝试不同,在本文中,我们探讨了如何利用不同目标类别中普遍存在的本质特征来增强对象特征。我们提出了一个新的原型,即通用原型,它是从所有目标类别中学习的。除了具有描述不变特征的优势外,通用原型还减轻了不平衡对象类别的影响。在使用通用原型增强对象特征后,我们施加一致性损失,以最大限度地提高增强特征与原始特征之间的一致性,这有利于学习不变对象特征。因此,我们开发了一种新的通用原型小样本目标检测框架(F-SODup),该框架具有对新对象进行特征泛化的优点。在PASCAL VOC和MS COCO上的实验结果表明了FSODup的有效性。特别是,对于VOC Split2的1-shot情况,FSODup的mAP比基线高6.8%

1 Introduction

Recently, owing to the success of deep learning, great progress has been made on object detection [26, 11, 14, 12]. However, the outstanding performance [25, 21, 3, 18] depends on abundant annotated objects in training images for each category. As a challenging task, few-shot object detection (FSOD) [16, 35] mainly aims to improve the detection performance for novel objects that belong to certain categories but appear rarely in the annotated training images.

最近,由于深度学习的成功,在目标检测方面取得了巨大进展[26,11,14,12]。然而,出色的性能[25,21,3,18]取决于每个类别的训练图像中丰富的注释目标。作为一项具有挑战性的任务,小样本目标检测(FSOD)[16,35]主要目的是提高属于特定类别但很少出现在注释训练图像中的新目标的检测性能。

The main challenge of FSOD lies in how to learn generalized object features from both abundant samples in base categories and few samples in novel categories, which can simultaneously describe invariant object characteristics and alleviate the impact of unbalanced categories. Recently, meta-learning strategy [28, 30, 9] has been utilized in [38, 37, 35, 8] to adapt representation ability from base object categories to novel categories. However, the weak performance compared to basic fine-tuning methods [33, 36, 4, 5] shows the meta-learning technique fails to improve the generalization ability of object feature learning.

FSOD的主要挑战在于如何从基本类别中的丰富样本和新类别中的少量样本中学习广义目标特征,从而能够同时描述不变目标特征并减轻不平衡类别的影响。最近,元学习策略[28,30,9]已在[38,37,35,8]中得到应用,以将表示能力从基本目标类别调整为新类别。然而,与基本微调方法[33,36,4,5]相比,该方法的性能较弱,表明元学习技术无法提高目标特征学习的泛化能力。

One possible reason is that the adaptation process in meta-learning mechanism could not capture the invariant characteristics across categories sufficiently. The invariance, i.e., invariant under different visual changes like textual variances or environmental noises, is always associated with the intrinsical object characteristics. As demonstrated in [23], the models that could extract invariant representations often generalize better than their non-invariant counterparts. Therefore, in this paper, we explore how to enhance the generalization ability of object feature learning with the invariant object characteristics.

一个可能的原因是元学习机制中的适应过程不能充分捕捉到跨类别的不变特征。不变性,即在文本变化或环境噪声等不同视觉变化下的不变性,总是与物体的本质特征有关。如[23]所示,能够提取不变表示的模型通常比其非不变对应模型更具通用性。因此,在本文中,我们探讨了如何利用不变的目标特征来提高目标特征学习的泛化能力。

We devise universal prototypes (as shown in Fig. 1) to learn the invariant object characteristics. Different from the prototypes that are separately learned from each category [28, 20, 32], the proposed universal prototypes are learned from all object categories. The benefits are two-fold. On the one hand, prototypes from all categories capture rich information not only from different object categories but also from contexts of images. On the other hand, the universal prototypes reduce the impact of data-imbalance across different categories. Moreover, via fine-tuning, the universal prototypes can be effectively adapted to data-scarce novel categories. To this end, we develop a new framework of few-shot object detection with universal prototypes (FSODup). Particularly, we utilize a soft-attention of the learned universal prototypes to enhance the object features. Such a universal-prototype enhancement (i.e., each element of the enhanced features is a combination of prototypes) aims to simultaneously improve invariance and retain the semantic information of original object features. Here we employ a consistency loss to enable the maximum agreement between the enhanced and original object features. During training, we first train the model on data-abundant base categories. Then, the model is fine-tuned on a reconstructed training set that contains a small number of balanced training samples from both base and novel object categories. Experimental results on two benchmarks and extensive visualization analyses demonstrate the effectiveness of the proposed method. Our code will be available at https://github.com/AmingWu/UP-FSOD.

我们设计了通用原型(如图1所示)来学习不变目标的特征。与分别从每个类别学习的原型不同[28,20,32],建议的通用原型从所有目标类别学习。这样做有两方面优点。一方面,所有类别的原型不仅从不同的目标类别,而且从图像的上下文中捕获丰富的信息。另一方面,通用原型减少了不同类别数据不平衡的影响。此外,通过微调,通用原型可以有效地适应数据稀缺的新类别。为此,我们开发了一种新的通用原型小样本目标检测框架(FSODup)。特别是,我们利用学习到的通用原型的软注意力机制来增强目标特征。这种通用原型增强(即增强特征的每个元素都是原型的组合)旨在同时提高不变性并保留原始目标特征的语义信息。在这里,我们使用一致性损失来实现增强目标特征和原始目标特征之间的最大一致性。在训练期间,我们首先在数据丰富的基础类别上训练模型。然后,在重构的训练集上对模型进行微调,该训练集包含来自基本和新目标类别的少量平衡训练样本。在两个基准测试和大量可视化分析上的实验结果证明了该方法的有效性。我们的代码将在https://github. com/AMIGWU/UP-FSOD。

Figure 1. Universal prototypes (colorful stars) are learned from all object categories, which are not specific to certain object categories. Universal prototypes capture different intrinsical object characteristics via latent projection, e.g., the prototype F incorporates object characteristics of ‘car’ and ‘motorbike’. 通用原型(彩色星星)从所有目标类别中学习,而不是特定于某些目标类别。通用原型通过潜在投影捕捉不同的本质目标特征,例如。G原型F结合了“汽车”和“摩托车”的目标特征。

The contributions are summarized as follows: (1) Towards FSOD, we devise a dedicated prototype and a new framework with universal-prototype enhancenment. (2) We successfully demonstrate that, after fine-tuning with universal-prototype enhanced features, object detectors effectively adapt to novel categories. (3) We obtain new performance on PASCAL VOC [7, 6] and MS COCO [19]. Enhancing invariance and generalization with the learned universal prototypes is empirically verified. Moreover, extensive visualization analyses also show that universal prototypes are capable of enhancing object characteristics, which is beneficial for FSOD.

主要贡献如下:(1)针对FSOD,我们设计了一个专用原型和一个具有通用原型增强的新框架。(2) 我们成功地证明,在使用通用原型增强功能进行微调后,目标检测器可以有效地适应新的类别。(3) 我们在PASCAL VOC[7,6]和MS COCO[19]上获得了新的性能。通过实验验证了利用所学的通用原型增强不变性和泛化能力。此外,广泛的可视化分析还表明,通用原型能够增强目标特征,这有利于FSOD。

2 Related Work

Few-shot image classification. Few-shot image classification [31, 24, 29, 13, 10] targets to recognize novel categories with only few samples in each category. Meta learning is a widely used method to solve few-shot classification [22], which aims to leverage task-level meta knowledge to help the model adapt to new tasks with few labeled samples. Vinyals et al. [31] and Snell et al. [28] employed the meta-learning policy to learn the similarity metric that could be transferrable across different tasks. Particularly, based on the policy of meta-learning, prototypical network [28] is proposed to take the center of congener support samples’ embeddings as the prototype of this category. The classification can be performed by computing distances between the representations of samples and prototype of each category. However, when the data is unbalanced or scarce, the learned prototypes could not represent the information of each category accurately, which affects the classification performance. Besides, during meta-learning, Gidaris et al. [10] and Wang et al. [34] introduced new parameters to promote the adaptation to novel tasks. However, these meta-learning methods for few-shot image classification could not be directly applied to object detection that requires localizing and recognizing objects.

小样本图像分类。小样本图像分类[31,24,29,13,10]目标识别新类别,每个类别中只有很少的样本。Meta learning是一种广泛用于解决小样本分类的方法[22],其目的是利用任务级元知识帮助模型适应具有少量标记样本的新任务。Vinyals等人[31]和Snell等人[28]采用元学习策略来学习可在不同任务间转移的相似性度量。特别是,基于元学习策略,提出了原型网络[28],以同类支持样本的嵌入为中心作为这一范畴的原型。分类可以通过计算样本表示和每个类别的原型之间的距离来执行。然而,当数据不平衡或稀缺时,学习的原型不能准确地表示每个类别的信息,从而影响分类性能。此外,在元学习过程中,Gidaris等人[10]和Wang等人[34]引入了新参数,以促进对新任务的适应。然而,这些用于小样本图像分类的元学习方法不能直接应用于需要定位和识别目标的目标检测。

Few-shot object detection. Most existing methods employ meta-learning [8, 17] or fine-tuning [39, 36] strategies to solve FSOD. Specifically, Wang et al. [35] developed a meta-learning based framework to leverage meta-level knowledge from data-abundant base categories to learn a detector for novel categories. Yan et al. [38] further extended Faster R-CNN [26] by performing meta-learning over RoI (Region-of-Interest) features. However, the weak performance compared to basic fine-tuning methods shows meta-learning based methods fail to improve the generalization ability of object detectors. For the method of finetuning and the model pre-trained on the base categories, Wang et al. [33] employed a two-stage fine-tuning process, i.e., fine-turning the last layers of the detector and freezing the other parameters of the detector, to make the object predictor adapt to novel categories. Wu et al. [36] proposed a method of multi-scale positive sample refinement to handle the problem of scale variations in object detection, which is similar to data augmentation [40].

小样本目标检测。大多数现有方法采用元学习[8,17]或微调[39,36]策略来解决FSOD。具体而言,Wang等人[35]开发了一个基于元学习的框架,以利用来自数据丰富的基本类别的元级知识来学习新类别的检测器。Yan等人[38]通过对RoI(感兴趣区域)特征进行元学习,进一步扩展了Faster R-CNN[26]。然而,与基本的微调方法相比,基于元学习的方法性能较差,无法提高目标检测器的泛化能力。对于微调方法和基于基本类别预先训练的模型,Wang等人[33]采用了两阶段微调过程,即微调检测器的最后一层并冻结检测器的其他参数,以使对象预测器适应新类别。Wu等人[36]提出了一种多尺度正样本细化方法来处理目标检测中的尺度变化问题,类似于数据增强[40]。

Different from previous methods for FSOD, in this paper, we propose to learn universal prototypes from all object categories. And we develop a new framework of FSOD with universal-prototype enhancement. Experimental results and visualization analysis demonstrate the effectiveness of universal-prototype enhancement.

与以往的FSOD方法不同,在本文中,我们建议从所有目标类别中学习通用原型。我们开发了一个具有通用原型增强功能的FSOD新框架。实验结果和可视化分析证明了通用原型增强的有效性。

3 FSOD with Universal Prototypes

In this paper, we follow the same FSOD settings introduced in Kang et al. [16]. Annotated detection data are divided into a set of base categories that have abundant instances and a set of novel categories that have only few (usually less than 30) instances per category. The main purpose is to improve the generalization ability of detectors.

在本文中,我们遵循Kang等人[16]中介绍的相同FSOD设置。带注释的检测数据分为一组基本类别(具有大量实例)和一组新类别(每个类别只有少数(通常少于30个)实例)。其主要目的是提高检测器的泛化能力。

3.1. Learning of Universal Prototypes

Recently, many methods [28, 20, 32] construct a prototype for each category to solve few-shot image classification. Though prototypes reflecting category information have been demonstrated to be effective for image classification, they could not be applied to FSOD. The reason may be that these category-specific prototypes represent imagelevel information and fail to capture object characteristics that are helpful for localizing and recognizing objects. Different from category-specific prototypes, based on all object categories, we attempt to learn universal prototypes that are beneficial for capturing intrinsical object characteristics that are invariant under different visual changes.

最近,许多方法[28、20、32]为每个类别构造一个原型,以解决小样本图像分类问题。虽然反映类别信息的原型已被证明对图像分类有效,但它们不能应用于FSOD。原因可能是这些特定于类别的原型表示图像级别的信息,无法捕获有助于定位和识别目标的目标特征。与特定类别的原型不同,基于所有目标类别,我们尝试学习通用原型,这些原型有助于捕获在不同视觉变化下不变的本质目标特征。

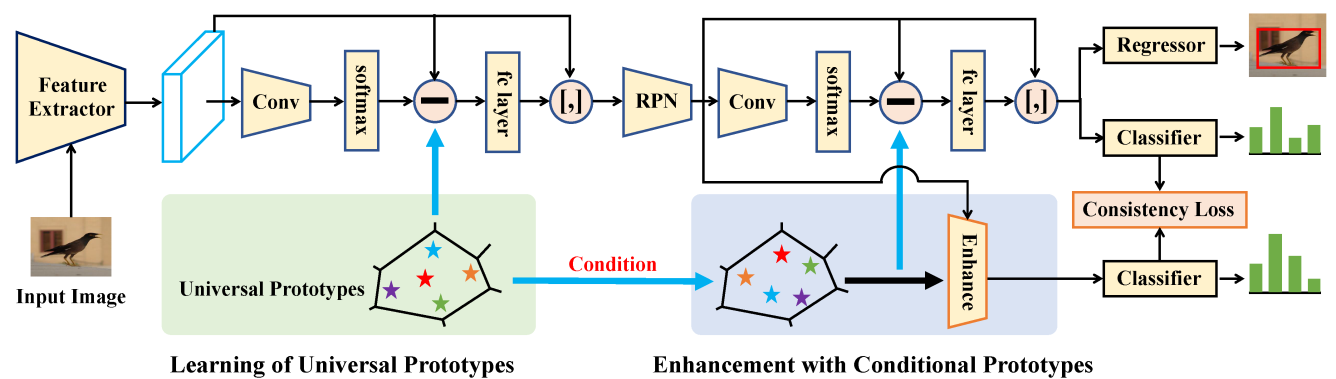

Figure 2. The architecture of few-shot object detection with universal-prototype enhancement. ‘Conv’ and ‘fc layer’ separately indicate convolution and fully-connected layer. The colorful stars are the learned universal prototypes.

?and ‘[,]’ denote the residual operation and concatenation operation, respectively. We focus on improving the generalization of detectors via learning invariant object characteristics. Firstly, universal prototypes are learned from all object categories. With the output of RPN (Region Proposal Network), we obtain the conditional prototypes via a conditional transformation of universal prototypes. Next, the enhanced object features are calculated based on conditional prototypes. Finally, a consistency loss is computed between the enhanced and original features. 具有通用原型增强的小样本目标检测体系结构Conv和fc layer分别表示卷积层和完全连接层。五颜六色的星星是通用原型。

和“[,]”分别表示残差运算和串联运算。我们致力于通过学习不变目标特征来提高检测器的泛化能力。首先,从所有目标类别中学习通用原型。利用RPN(Region Proposition Network,区域建议网络)的输出,通过对通用原型进行条件变换,得到条件原型。接下来,基于条件原型计算增强目标特征。最后,计算增强特征和原始特征之间的一致性损失。

Concretely, the left part of Fig. 2 shows the learning process of universal prototypes. We adopt widely used Faster R-CNN [26], a two-stage object detector, as the base detection model. Given an input image, we first employ the feature extractor, e.g., ResNet [15], to extract corresponding features F ∈ Rw×h×m, where w, h, and m separately denote width, height, and the number of channels. Then, the universal prototypes are defined as C = {ci∈ Rm, i = 1, ..., D}. Next, based on the prototypical set C, we calculate descriptors that represent image-level information.

具体而言,图2的左半部分显示了通用原型的学习过程。我们采用了广泛使用的Faster R-CNN[26],一种两级目标检测器,作为基本检测模型。在给定输入图像的情况下,我们首先使用特征提取器ResNet[15],以提取相应的特征F∈ Rw×h×m,其中w、h和m分别表示通道的宽度、高度和数量。然后,通用原型被定义为C={ci∈ Rm,i=1,…,D}。接下来,基于原型集C,我们计算表示图像级信息的描述符。

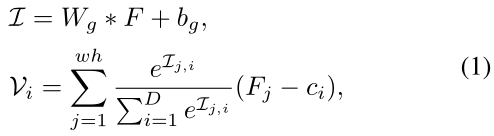

?

?

where ![]() ?are convolutional parameters.

?are convolutional parameters. ![]() ?represents the output descriptors. ‘Fj? ci’ indicates the residual operation, by which the visual features can be assigned to the corresponding prototype. Finally, we take the concatenated result of F and V as the input of the RPN module.

?represents the output descriptors. ‘Fj? ci’ indicates the residual operation, by which the visual features can be assigned to the corresponding prototype. Finally, we take the concatenated result of F and V as the input of the RPN module.

其中![]() ?是卷积参数。

?是卷积参数。![]() ?表示输出描述符。Fj? ci表示残差操作,通过该操作可以将视觉特征分配给相应的原型。最后,我们将F和V的级联结果作为RPN模块的输入。

?表示输出描述符。Fj? ci表示残差操作,通过该操作可以将视觉特征分配给相应的原型。最后,我们将F和V的级联结果作为RPN模块的输入。

![]() ?

?

where![]() ?is the reshaped result of V. Meanwhile,

?is the reshaped result of V. Meanwhile, ![]() ? parameters of the fully-connected layer. ‘[,]’ is the concatenation operation. By the concatenation operation, the descriptors V can be fused into the original features F, which enhances the representation ability of F. Ψ consists of two convolutional layers with ReLU activation and is used to transform the concatenated result. Finally,

? parameters of the fully-connected layer. ‘[,]’ is the concatenation operation. By the concatenation operation, the descriptors V can be fused into the original features F, which enhances the representation ability of F. Ψ consists of two convolutional layers with ReLU activation and is used to transform the concatenated result. Finally,![]() ?is the output of RPN with RoI Pooling [26, 14], where n and s separately indicate the number of proposals and the size of proposals. The feature dimension of P is the same as F.

?is the output of RPN with RoI Pooling [26, 14], where n and s separately indicate the number of proposals and the size of proposals. The feature dimension of P is the same as F.

![]() ?是v的整形结果。同时,

?是v的整形结果。同时,![]() ?是全连接层的参数。'[,]”是串联操作。通过级联操作,描述符V可以融合到原始特征F中,这增强了F的表示能力。ψ由两个卷积层组成,具有ReLU激活,用于转换级联结果。最后,

?是全连接层的参数。'[,]”是串联操作。通过级联操作,描述符V可以融合到原始特征F中,这增强了F的表示能力。ψ由两个卷积层组成,具有ReLU激活,用于转换级联结果。最后,![]() ?是具有RoI池的RPN的输出[26,14],其中n和s分别表示建议框的数量和大小。P的特征尺寸与F相同。

?是具有RoI池的RPN的输出[26,14],其中n和s分别表示建议框的数量和大小。P的特征尺寸与F相同。

3.2. Enhancement of Object Features

As shown in the right part of Fig. 2, we first compute conditional prototypes based on the universal prototypes C. Then, we conduct enhancement of object features with the conditional prototypes.

如图2右侧所示,我们首先基于通用原型C计算条件原型。然后,我们使用条件原型对目标特征进行增强。

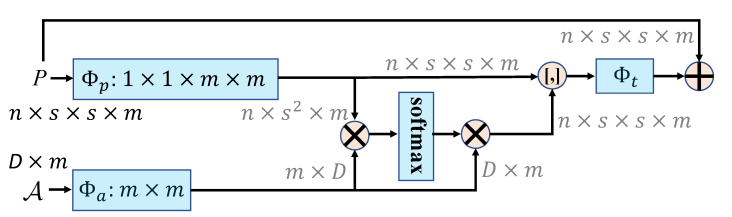

3.2.1 The Computation of Conditional Prototypes

Since the computation of Eq. (1) is based on the extracted features that represent the whole input image, the universal prototypes C mainly reflect image-level information. Here, the image-level information includes object-level information and other associated information about image content. Whereas, after RPN, the proposal features P mainly contain object-level information. The directly using of universal prototypes C may not accurately represent object-level information. Thus, we make an affine transformation to promote C to move towards the space of object-level features.

由于公式(1)的计算基于代表整个输入图像的提取特征,因此通用原型C主要反映图像级信息。这里,图像级信息包括目标级信息和关于图像内容的其他关联信息。然而,在RPN之后,建议框特征P主要包含目标级信息。直接使用通用原型C可能无法准确表示目标级信息。因此,我们进行了仿射变换,以促使C向目标级特征空间移动。

![]() ?

?

where![]() ?are the transformed parameters.

?are the transformed parameters. ![]() ? is element-wise product. Finally,

? is element-wise product. Finally, ![]() ? represents the conditional prototypes. Next, we employ the same processes as Eq. (1) to generate object-level descriptors. The processes are shown as follows:

? represents the conditional prototypes. Next, we employ the same processes as Eq. (1) to generate object-level descriptors. The processes are shown as follows:

![]() ?是转换参数,

?是转换参数,![]() ?是一种元素级产品。最后,

?是一种元素级产品。最后,![]() ?表示条件原型。接下来,我们采用与等式(1)相同的过程来生成目标级描述符。流程如下所示:

?表示条件原型。接下来,我们采用与等式(1)相同的过程来生成目标级描述符。流程如下所示:

?

?

where k = 1,· · · , n. ![]() ? are convolutional parameters.

? are convolutional parameters. ![]() ?is the i-th conditional prototype of A.

?is the i-th conditional prototype of A. ![]() ?indicates the output descriptors. Finally, we take the concatenated result of P and O as the input of the classifier.

?indicates the output descriptors. Finally, we take the concatenated result of P and O as the input of the classifier.

其中k=1,··,n。![]() ?是卷积参数。

?是卷积参数。![]() ?是A的第i个条件原型,

?是A的第i个条件原型,![]() ?表示输出描述符。最后,我们将P和O的级联结果作为分类器的输入。

?表示输出描述符。最后,我们将P和O的级联结果作为分类器的输入。

![]() ?

?

where ![]() ?is the reshaped result of O. Clf denotes the classifier. Meanwhile,

?is the reshaped result of O. Clf denotes the classifier. Meanwhile, ![]() ?and

?and![]() ?are parameters of the fully-connected layer. Ψc consists of two fully-connected layers and outputs a matrix with the dimension n × 2m. Finally, y is the predicted probability. In the experiment, we find employing the descriptors O generated based on the conditional prototypes improves the performance of FSOD, which shows the effectiveness of conditional prototypes.

?are parameters of the fully-connected layer. Ψc consists of two fully-connected layers and outputs a matrix with the dimension n × 2m. Finally, y is the predicted probability. In the experiment, we find employing the descriptors O generated based on the conditional prototypes improves the performance of FSOD, which shows the effectiveness of conditional prototypes.

![]() ?是O的整形结果。Clf表示分类器。同时,

?是O的整形结果。Clf表示分类器。同时,![]() ?和

?和![]() ?是全连接层的参数。ψc由两个完全连接的层组成,并输出一个尺寸为n×2m的矩阵。最后,y是预测概率。在实验中,我们发现使用基于条件原型生成的描述符O可以提高FSOD的性能,这表明了条件原型的有效性。

?是全连接层的参数。ψc由两个完全连接的层组成,并输出一个尺寸为n×2m的矩阵。最后,y是预测概率。在实验中,我们发现使用基于条件原型生成的描述符O可以提高FSOD的性能,这表明了条件原型的有效性。

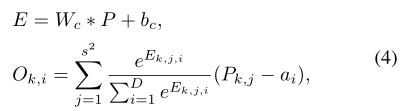

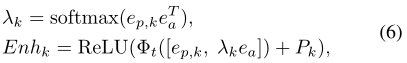

3.2.2 Enhancement with Conditional Prototypes

In order to improve the generalization of detectors, we explore to utilize conditional prototypes to enhance object features. Specifically, Fig. 3 shows the enhancement details. For proposal features![]() ? and conditional prototypes

? and conditional prototypes ![]() ?, we separately employ a convolutional layer

?, we separately employ a convolutional layer ![]() ?and fully-connected layer

?and fully-connected layer ![]() ?to project P and A into an embedding space, i.e., ep= Φp(P) and ea= Φa(A). Then, based on each element of

?to project P and A into an embedding space, i.e., ep= Φp(P) and ea= Φa(A). Then, based on each element of![]() ?, we calculate the soft-attention of

?, we calculate the soft-attention of ![]() ?to obtain enhancement of object features.

?to obtain enhancement of object features.

为了提高检测器的泛化能力,我们探索利用条件原型来增强目标特征。具体地,图3示出了增强细节。建议框特征![]() ?和条件原型

?和条件原型![]() ?,我们分别使用卷积层

?,我们分别使用卷积层![]() ?和全连接层

?和全连接层![]() ?将P和A投影到嵌入空间,即

?将P和A投影到嵌入空间,即![]() ?。然后,基于

?。然后,基于![]() ?的每个元素,计算

?的每个元素,计算![]() ?的软注意,以获得目标特征的增强。

?的软注意,以获得目标特征的增强。

Figure 3. Enhancement of object features. Based on each element of RPN output P , we calculate the soft-attention of the conditional prototypes A to generate enhanced features. Each element of the enhanced features is a combination of conditional prototypes, which retains the semantic information of P .增强目标特征。基于RPN输出P的每个元素,我们计算条件原型A的软注意,以生成增强的特征。增强特征的每个元素都是条件原型的组合,保留了P的语义信息。

?

?

where k = 1,· · · , n. ![]() ?indicates the k-th component of

?indicates the k-th component of ![]() ?.

?. ![]() ?denotes attention weights. Φt consists of two convolutional layers with ReLU activation. And the output dimension of Φt is m.

?denotes attention weights. Φt consists of two convolutional layers with ReLU activation. And the output dimension of Φt is m. ![]() ?is the k-th component of P. Finally,

?is the k-th component of P. Finally, ![]() ?is the enhanced object features, which fuses the information of conditional prototypes and is helpful for improving the generalization on novel objects. Next, Enh is taken as the input of the classifier to output the predicted probability.

?is the enhanced object features, which fuses the information of conditional prototypes and is helpful for improving the generalization on novel objects. Next, Enh is taken as the input of the classifier to output the predicted probability.

其中k=1,··,n。![]() ?表示ep的第k个分量。

?表示ep的第k个分量。![]() ?是注意力权重。Φt由两个具有ReLU激活的卷积层组成。以及Φt是m的输出尺寸。

?是注意力权重。Φt由两个具有ReLU激活的卷积层组成。以及Φt是m的输出尺寸。![]() ? P的第k个分量。最后,

? P的第k个分量。最后,![]() ?增强了目标特征,融合了条件原型的信息,有助于提高对新目标的泛化能力。然后,将Enh作为分类器的输入,输出预测概率。

?增强了目标特征,融合了条件原型的信息,有助于提高对新目标的泛化能力。然后,将Enh作为分类器的输入,输出预测概率。

![]() ?

?

where yenh is the predicted probability. Besides, Eq. (5) and Eq. (7) share the same classifier. In the experiment, we find the enhanced operations (Eq. (6) and (7)) are beneficial for FSOD, which further indicates the learned prototypes contain object-level information.

其中![]() ?是预测的概率。此外,等式(5)和等式(7)共享相同的分类器。在实验中,我们发现增强操作(等式(6)和(7))有利于FSOD,这进一步表明学习的原型包含目标级信息。

?是预测的概率。此外,等式(5)和等式(7)共享相同的分类器。在实验中,我们发现增强操作(等式(6)和(7))有利于FSOD,这进一步表明学习的原型包含目标级信息。

3.3. Two-stage Fine-tuning Approach

Many semi-supervised learning methods [2, 1] rely on a consistency loss to enforce that the model output remains unchanged when the input is perturbed. Inspired by this idea, to learn invariant object characteristics, we compute the consistency loss between the prediction y from original features (see Eq. (5)) and the prediction yenhfrom enhanced features. Particularly, the KL-Divergence loss is employed to enforce consistent predictions, i.e., Lcon= H(y, yenh). The joint training loss is defined as follows:

许多半监督学习方法[2,1]依赖于一致性损失,以确保当输入受到扰动时,模型输出保持不变。受这一思想的启发,为了学习不变的目标特征,我们计算原始特征的预测y(见等式(5))和增强特征的预测y之间的一致性损失。特别地,KL散度损失被用来执行一致的预测,即Lcon=H(y,yenh)。联合训练损失的定义如下:

![]() ?

?

where Lrpnis the loss of the RPN to distinguish foreground from background and refine bounding-box anchors. Lcls and Llocseparately indicate classification loss and box regression loss. And γ is a hyper-parameter.

其中,Lrpn是RPN的loss,用于区分前景和背景,并优化边界框定位。Lcl和Lloc分别表示分类损失和方框回归损失。γ是一个超参数。

During training, we employ a two-stage fine-tuning approach (as shown in Fig. 4) to optimize F SODupmodel. Concretely, in the base training stage, we employ the joint loss L to optimize the entire model based on the dataabundant base classes. After the base training stage, only the last fully-connected layer (for classification) of the detection head is replaced. The new classification layer is randomly initialized. Besides, during few-shot fine-tuning stage, different from the work [33], none of the network layers is frozen. And we still employ the loss L to fine-tune the entire model based on a balanced training set consisting of both the few base and novel categories.

在训练期间,我们采用两阶段微调方法(如图4所示)来优化FSODup模型。具体地说,在基础训练阶段,我们基于数据丰富的基类,使用联合损失L来优化整个模型。在基本训练阶段之后,仅更换探测头的最后一个完全连接的层(用于分类)。新的分类层是随机初始化的。此外,在小样本微调阶段,与工作[33]不同的是,没有一个网络层被冻结。我们仍然使用损失L,基于由少数基本类别和新类别组成的平衡训练集,对整个模型进行微调。

3.4. Discussion

In this section, we further discuss universal prototypes for few-shot object detection.

在本节中,我们将进一步讨论小样本目标检测的通用原型。

Though prototypes have been demonstrated to be effective for few-shot image classification [28, 31], it is unclear how to build prototypes for FSOD [16]. (1) If we follow few-shot image classification and construct prototypes for each category, the computational costs increase for the case of a large number of object categories. Meanwhile, due to the unbalanced object categories, the constructed prototypes may not accurately reflect category information. (2) Related to the above, detectors for certain object category can be affected by co-appearing objects in one image, and thus the quality of the constructed prototype for such category may be burdened. (3) More importantly, since the number of object categories in the stage of the base training is different from that of the few-shot fine-tuning, constructing a prototype for each object category makes it impossible to align the prototypes between the base training and the few-shot fine-tuning. That is to say, the prototypes pretrained on base categories cannot be directly utilized in the fine-tuning stage. Therefore, for fine-tuning based methods, it is difficult to build a prototype for each category.

尽管原型已被证明对小样本图像分类有效[28,31],但尚不清楚如何为FSOD构建原型[16]。(1) 如果我们遵循小样本图像分类并为每个类别构造原型,那么对于大量目标类别的情况,计算成本会增加。同时,由于目标类别的不平衡,所构建的原型可能无法准确反映类别信息。(2) 与上述相关,特定目标类别的检测器可能会受到同一图像中同时出现的对象的影响,因此,此类类别的构造原型的质量可能会受到影响。(3) 更重要的是,由于基础训练阶段的目标类别数量不同于小样本微调阶段的目标类别数量,因此为每个目标类别构建一个原型使得无法在基础训练和小样本微调阶段之间对齐原型。也就是说,在基础类别上预先训练的原型不能直接用于微调阶段。因此,对于基于微调的方法,很难为每个类别构建原型。

To solve FSOD, we propose to learn universal prototypes from all object categories. The universal prototypes are not specific to certain object categories and can be effectively adapted to novel categories via fine-tuning. In the experiments, we find that the universal prototypes are helpful for characterizing the regional information of different object categories. Meanwhile, with the help of universal-prototype enhancement, the performance of few-shot detection can be significantly improved.

为了解决FSOD问题,我们建议从所有目标类别中学习通用原型。通用原型并不特定于某些目标类别,可以通过微调有效地适应新类别。在实验中,我们发现通用原型有助于表征不同目标类别的区域信息。同时,借助于通用原型增强,可以显著提高小样本检测的性能。

4. Experiments

We first evaluate our method on PASCAL VOC [7, 6] and MS COCO [19]. For a fair comparison, we use the settings in [16, 38] to construct few-shot detection datasets. Concretely, for PASCAL VOC, the 20 classes are randomly divided into 5 novel classes and 15 base classes. Here, we follow the work [16] to use the same three class splits, where only K object instances are available for each novel category and K is set to 1, 2, 3, 5, 10. For MS COCO, the 20 categories overlapped with PASCAL VOC are used as novel categories with K = 10, 30. And the remaining 60 categories are taken as the base categories.

我们首先在PASCAL VOC[7,6]和MS COCO[19]上评估了我们的方法。为了进行公平比较,我们使用[16,38]中的设置来构建小样本检测数据集。具体来说,对于PASCAL VOC,20个类被随机分为5个新类和15个基类。在这里,我们按照工作[16]使用相同的三个类拆分,其中每个新类别只有K个目标实例可用,K设置为1、2、3、5、10。对于MS COCO,与PASCAL VOC重叠的20个类别被用作K=10,30的新类别。其余60个类别作为基本类别。

Implementation Details. Faster R-CNN [26] is used as the base detector. Our backbone is Resnet-101 [15] with the RoI Align [14] layer. We use the weights pre-trained on ImageNet [27] in initialization. For FSOD, the number of universal prototypes (see Eq. (1)) is set to 24. All these prototypes are randomly initialized. Next, the model is trained with a batchsize of 2 on 2 GPUs, 1 image per GPU. Meanwhile, to alleviate the impact of the scale issue, we employ the positive sample refinement [36]. The hyper-parameter γ (see Eq. (8)) is set to 1.0. All models are trained using SGD optimizer with a momentum of 0.9 and a weight decay of 0.0001. Finally, during inference, we take the output y of Eq. (5) as the classification result.

实施细节。Faster R-CNN[26]被用作基本检测器。我们的骨干网络是带有RoI Align[14]层的Resnet-101[15]。我们在初始化时使用在ImageNet[27]上预先训练的权重。对于FSOD,通用原型的数量(见等式(1))设置为24。所有这些原型都是随机初始化的。接下来,使用2个GPU上的2个batchsize训练模型,每个GPU 1个图像。同时,为了缓解规模问题的影响,我们采用了正样本细化[36]。超参数γ(见等式(8))设置为1.0。所有模型都使用SGD优化器进行训练,动量为0.9,权重衰减为0.0001。最后,在推理过程中,我们将等式(5)的输出y作为分类结果。

4.1. Performance Analysis of Few-Shot Detection

We compare FSODup with two baseline methods, i.e., TFA [33] and MPSR [36]. These two approaches all use the two-stage fine-tuning method to solve FSOD.

我们将FSODup与两种基线方法进行比较,即TFA[33]和MPSR[36]。这两种方法均采用两阶段微调法求解FSOD。

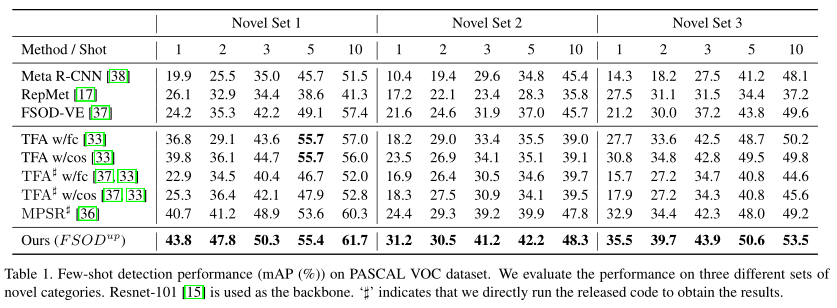

Results on PASCAL VOC. Table 1 shows the results of PASCAL VOC. As the number of novel categories decreases, the performance degrades significantly. This indicates that addressing the few-shot problem is crucial to improve the generalization of detectors. We can see that the proposed F SODup method consistently outperforms the two baseline methods. This shows that employing universal-prototype enhancement is helpful for learning invariant object characteristics and thus improves performance. Meanwhile, this also indicates that focusing on invariance plays a key role in solving FSOD.

PASCAL VOC.的结果。表1显示了PASCAL VOC的结果。随着新类别数量的减少,性能显著下降。这表明解决小样本问题对于提高检测器的通用性至关重要。我们可以看到,建议的FSODup方法始终优于两种基线方法。这表明,采用通用原型增强有助于学习不变目标特征,从而提高性能。同时,这也表明关注不变性在求解FSOD中起着关键作用。

?

?

In Fig. 5, we show the detection results of MPSR [36] and our method. ‘bird’ and ‘bus’ belong to the novel categories. We can see that our method can successfully detect objects existing in images. This further shows that the proposed universal-prototype enhancement is helpful for capturing invariant object characteristics, which improves the accuracy of detection.

在图5中,我们展示了MPSR[36]的检测结果和我们的方法。”“鸟”和“巴士”属于新类范畴。我们可以看到,我们的方法可以成功地检测图像中存在的对象。这进一步表明,所提出的通用原型增强有助于捕获不变目标特征,从而提高检测精度。

?

?

Results on MS COCO. Table 2 shows the few-shot detection performance on MS COCO dataset. Compared with two baseline methods, i.e., TFA [33] and MPSR [36], our method consistently outperforms their performance. This further demonstrates the effectiveness of the proposed universal-prototype enhancement. Besides, FSOD-VE [37] is a recently proposed meta-learning based method, which combines FSOD with a few-shot viewpoint estimation and follows Meta R-CNN [38] to optimize detectors. Though FSOD-VE’s performance of the 10-shot case is higher than our method, our method outperforms FSOD-VE on the small objects. Meanwhile, compared with FSOD-VE, the training of our method is much easier. And we do not use the viewpoint information. These results further demonstrate that exploiting universal-prototype enhancement is helpful for improving detectors’ generalization

MS-COCO的结果。表2显示了MS COCO数据集上的小样本检测性能。与两种基线方法,即TFA[33]和MPSR[36]相比,我们的方法始终优于它们的性能。这进一步证明了所提出的通用原型增强的有效性。此外,FSOD-VE[37]是最近提出的基于元学习的方法,它将FSOD与小样本视点估计相结合,并遵循meta R-CNN[38]优化检测器。虽然FSOD-VE在10-shot情况下的性能高于我们的方法,但我们的方法在小目标上的性能优于FSOD-VE。同时,与FSOD-VE相比,该方法的训练更加简单。我们不使用视点信息。这些结果进一步表明,利用通用原型增强有助于提高检测器的泛化能力。

?

?

4.2. Ablation Analysis

In this section, based on the Novel Set 1 of PASCAL VOC, we make an ablation analysis of our method.

在这一部分中,我们基于PASCAL VOC的新集合1,对我们的方法进行了消融分析。

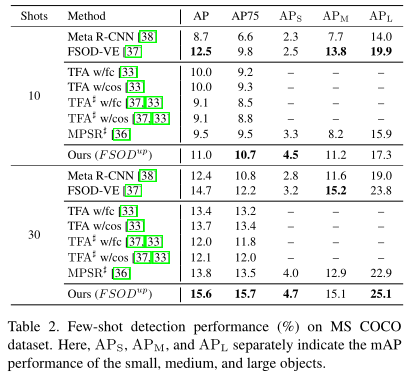

Conditional prototypes. In order to sufficiently represent object-level information, based on the universal prototypes C (see Eq. (1)), we make an affine transformation to obtain conditional prototypes A (see Eq. (3)). Next, we make an ablation analysis of conditional prototypes.

条件原型。为了充分表示目标级信息,基于通用原型C(见等式(1)),我们进行仿射变换以获得条件原型A(见等式(3))。接下来,我们对条件原型进行消融分析。

Table 3 shows the comparison results. We can see that utilizing the conditional operation improves detection performance significantly. Particularly, for the 2-shot case, our method separately outperforms ‘No Condition’ and ‘New Prototype’ by 4.0% and 3.2%. This shows that based on the universal prototypes, the conditional prototypes represent object-level information effectively, which improves the performance of detection.

表3显示了比较结果。我们可以看到,利用条件运算可以显著提高检测性能。特别是,对于2-shot的情况,我们的方法分别比“无条件”和“新原型”的性能好4.0%和3.2%。这表明,在通用原型的基础上,条件原型有效地表达了目标级信息,提高了检测性能。

?

?

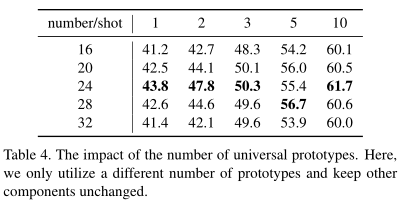

The number of universal prototypes. For our method, the number of universal prototypes (see Eq. (1)) is an important hyper-parameter. If the number is small, these prototypes could not represent invariant object characteristics sufficiently. On the contrary, a large number of prototypes may increase parameters and computational costs.

通用原型的数量。对于我们的方法,通用原型的数量(见等式(1))是一个重要的超参数。如果数量很小,这些原型就不能充分表示不变的目标特征。相反,大量原型可能会增加参数和计算成本。

Table 4 shows the performance of employing a different number of prototypes. We can see that the performance of utilizing 24 prototypes is the best. When the number is larger or fewer than 24, the performance degrades significantly. This shows the number of prototypes affects FSOD performance. In general, for the case of a large-scale dataset with a large number of categories, employing more prototypes could capture object-level characteristics sufficiently, which is helpful for improving detectors’ generalization on novel object categories.

表4显示了采用不同数量原型的性能。我们可以看到,使用24个原型的性能是最好的。当数量大于或小于24时,性能会显著降低。这表明原型的数量会影响FSOD性能。一般来说,对于具有大量类别的大规模数据集,使用更多原型可以充分捕获目标级特征,这有助于提高检测器对新目标类别的泛化能力。

?

?

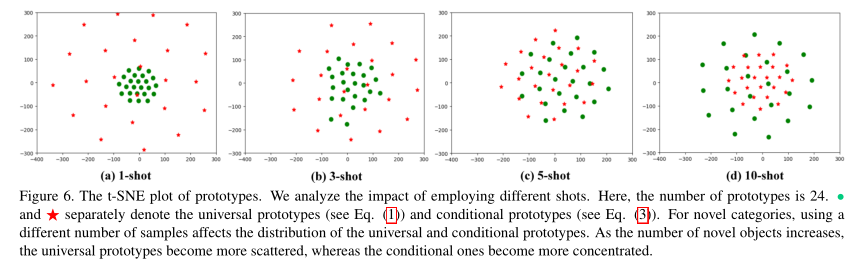

Visualization analysis of prototype distribution. In Fig. 6, based on different shots, we analyze the distribution of prototypes. Concretely, as the number of novel objects increases, in order to improve the detection performance, the universal prototypes (see Eq. (1)) will become more scattered to capture more image-level information. After RPN, the conditional prototypes are calculated to represent object-level information. And the features calculated based on the conditional prototypes are used for classification. Thus, as the number of novel objects increases, the distribution of the conditional prototypes will become more concentrated to focus on specific categories, which could improve the accuracy of detection. These analyses further show universal prototypes are capable of enhancing feature representations, which is beneficial for FSOD.

原型分布的可视化分析。在图6中,基于不同的样本量,我们分析了原型的分布。具体地说,随着新目标数量的增加,为了提高检测性能,通用原型(见等式(1))将变得更加分散,以捕获更多的图像级信息。在RPN之后,计算条件原型以表示目标级信息。基于条件原型计算的特征用于分类。因此,随着新目标数量的增加,条件原型的分布将更加集中于特定类别,这可以提高检测的准确性。这些分析进一步表明,通用原型能够增强特征表示,这有利于FSOD。

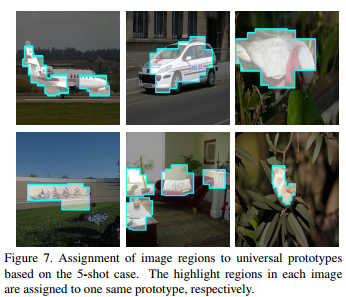

Visualization of assignment maps. In Fig. 7, we visualize the assignment maps of universal prototypes, i.e., the soft-assignment![]() ?j,iin Eq. (1). For each image, we can see that different object regions are assigned to one same universal prototype. Particularly, for the second image of the second row, the object regions of ‘sofa’ and ‘table’ are all assigned to one same prototype. This indicates the universal prototypes are not specific to certain object categories. Moreover, the universal prototypes are helpful for characterizing the region information of different objects and could be effectively adapted to novel categories via fine-tuning.

?j,iin Eq. (1). For each image, we can see that different object regions are assigned to one same universal prototype. Particularly, for the second image of the second row, the object regions of ‘sofa’ and ‘table’ are all assigned to one same prototype. This indicates the universal prototypes are not specific to certain object categories. Moreover, the universal prototypes are helpful for characterizing the region information of different objects and could be effectively adapted to novel categories via fine-tuning.

任务地图的可视化。在图7中,我们可视化了通用原型的分配图,即。E在等式(1)软赋值![]() ?。对于每个图像,我们可以看到不同的目标区域被分配给同一个通用原型。特别是,对于第二行的第二个图像,“沙发”和“桌子”的目标区域都指定给同一个原型。这表明通用原型并非特定于某些目标类别。此外,通用原型有助于表征不同目标的区域信息,并且可以通过微调有效地适应新类别。

?。对于每个图像,我们可以看到不同的目标区域被分配给同一个通用原型。特别是,对于第二行的第二个图像,“沙发”和“桌子”的目标区域都指定给同一个原型。这表明通用原型并非特定于某些目标类别。此外,通用原型有助于表征不同目标的区域信息,并且可以通过微调有效地适应新类别。

?

?

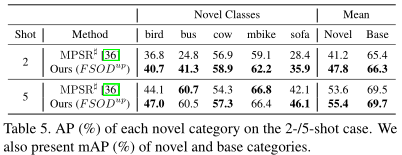

The performance of base categories. Table 5 shows the performance of each novel and base categories. We can see that our method outperforms MPSR [36] on novel and base categories. Particularly, for the ‘bus’ and ‘sofa’ category of the 2-shot case, our method outperforms MPSR by 16.5% and 7.5%. This indicates our method could improve the generalization performance of the detector.

基本类别的性能。表5显示了每种新类和基本类别的表现。我们可以看到,我们的方法在新类别和基本类别上优于MPSR[36]。特别是,对于“总线”和“沙发”类别的2杆情况,我们的方法优于MPSR 16。5%和7%。5%. 这表明我们的方法可以提高检测器的泛化性能。

?

?

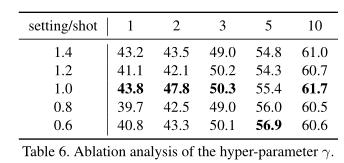

Analysis of Hyper-Parameter γ. For the joint training loss L (see Eq. (8))), we use a hyper-parameter γ to balance the consistency lossLcon. Table 6 shows the results. We can see that different settings of the hyper-parameterγ affect the performance of FSOD. For our method, when γ is set to 1.0, the performance is the best.

超参数γ的分析。对于联合训练损失L(见等式(8)),我们使用超参数γ来平衡一致性损失Lcon。表6显示了结果。我们可以看到,超参数γ的不同设置会影响FSOD的性能。对于我们的方法,当γ设置为1时。0,性能最好。

?

?

Analysis of the output descriptors. In Eq. (2) and (5), the output descriptors are fused as the input of the RPN and classifier. Next, we analyze the impact of the descriptors. Concretely, for Eq. (2), we only take F as the input of RPN and keep other components unchanged. For the 1shot and 5-shot case, fusing the descriptors improves the performance by 2.7% and 1.8%. For Eq. (5), we only take Ψc(P) as the input of classifier and keep other components unchanged. For the 1-shot and 5-shot case, fusing the descriptors improves the performance by 2.1% and 1.2%. This shows fusing descriptors into the current features is helpful for improving the representation ability of the features.

分析输出描述符。在等式(2)和(5)中,输出描述符被融合为RPN和分类器的输入。接下来,我们分析描述符的影响。具体地说,对于式(2),我们只将F作为RPN的输入,并保持其他组件不变。对于1-shot和5-shot的情况,融合描述符可将性能提高2.7%和1.8%。对于式(5),我们只将ψc(P)作为分类器的输入,并保持其他成分不变。对于1-shot和5-shot情况,融合描述符可将性能提高2.1%和1.2%。这表明将描述符融合到当前特征中有助于提高特征的表示能力。

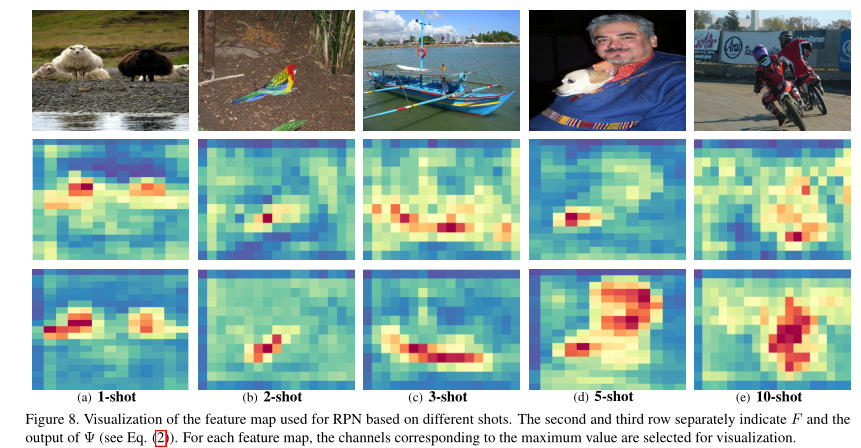

In Fig. 8, based on different shots, we show visualization results of F and the output of Ψ (see Eq. (2)). Here, we separately take F and the output of Ψ as the input of RPN. We can see that for the base and novel categories, compared with F, the output of Ψ contains more objectrelated information. Taking the 5-shot result as an example, the output of our method (the fourth image of the third row) contains more information about ‘Person’ category. This further indicates fusing descriptors is helpful for enhancing the object-level information.

在图8中,基于不同的样本量,我们显示了F的可视化结果和ψ的输出(见等式(2))。这里,我们分别将F和ψ的输出作为RPN的输入。我们可以看到,对于基本类别和新类别,与F相比,ψ的输出包含更多与目标相关的信息。以5-shot结果为例,我们方法的输出(第三行的第四幅图像)包含更多关于“Person”类别的信息。这进一步表明融合描述符有助于增强目标级信息。

?

?

5. Conclusion

To solve FSOD, we propose to learn universal prototypes from all object categories. Meanwhile, we develop an approach of few-shot object detection with universal prototypes (F SODup). Concretely, after obtaining the universal and conditional prototypes, the enhanced object features are computed based on the conditional prototypes. Next, through a consistency loss, F SODupenhances the invariance and generalization. Experimental results on two datasets show the effectiveness of the proposed method.

为了解决FSOD问题,我们建议从所有目标类别中学习通用原型。同时,我们提出了一种基于通用原型(F-SODup)的小样本目标检测方法。具体来说,在获得通用原型和条件原型后,基于条件原型计算增强目标特征。接下来,通过一致性损失,FSODup增强了不变性和泛化性。在两个数据集上的实验结果表明了该方法的有效性。