Homework 1: Linear Regression

Task Description:

-

本次作业的资料是从行政院环境环保署空气品质监测网所下载的观测资料。

-

希望大家能在本作业实作 linear regression 预测出 PM2.5 的数值。

Data Description:

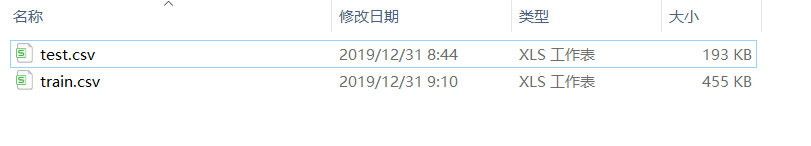

- 本次作业使用丰原站的观测记录,分成 train set 跟 test set,train set 是丰原站每个月的前 20 天所有资料。test set 则是从丰原站剩下的资料中取样出来。

train.csv: 每个月前 20 天的完整资料。

test.csv : 从剩下的资料当中取样出连续的 10 小时为一笔,前九小时的所有观测数据当作 feature,第十小时的 PM2.5 当作 answer。

一共取出 240 笔不重复的 test data,请根据 feature 预测这 240 笔的 PM2.5。

- Data 含有 18 项观测数据 AMB_TEMP, CH4, CO, NHMC, NO, NO2, NOx, O3, PM10, PM2.5, RAINFALL, RH, SO2, THC, WD_HR, WIND_DIREC, WIND_SPEED, WS_HR。

### 到网站上爬出正确资料拿来做参考也将视为作弊,请务必注意!!!

其他参考:作业1:线性回归预测PM2.5

先上参考代码:

#import sys

'''

本次作业的资料是从中央气象局网站下载的真实观测资料,大家必须利用 linear regression 或其他方法预测 PM2.5 的数值。观测记录被分成 train set 跟 test set,前者是每个月的前 20 天所有资料;后者则是从剩下的资料中随机取样出来的。train.csv: 每个月前 20 天的完整资料。test.csv: 从剩下的 10 天资料中取出 240 笔资料。每一笔资料都有连续 9 小时的观测数据,儿童学必须以此预测第十小时的 PM2.5。'''import pandas as pd

import numpy as np

import math

import csvdata = pd.read_csv('./train.csv', encoding = 'big5')#从train.csv载入数据 20*18*12=4320

data = data.iloc[:, 3:]#截取正式数据部分 data.to_csv('data_df.csv')

data[data == 'NR'] = 0#取需要的数值部分,将 'RAINFALL' 栏位全部替换为0。

raw_data = data.to_numpy()#raw_data.shape → (4320, 24) 已转换为可操作的数组数据#Extract Features (1) #12*20=240天

month_data = {} #字典,有12个月,所有键值有12个 month_data[0].shape → (18, 480) 20*24=480

for month in range(12):sample = np.empty([18, 480])#20*24=480, sample.shape=(18, 480) raw_data.shape=(4320, 24)for day in range(20):sample[:, day * 24 : (day + 1) * 24] = raw_data[18 * (20 * month + day) : 18 * (20 * month + day + 1), :]month_data[month] = sample# month_data = { 0: array([...],1: array([...],2: array([...], ... ,12: array([...])

'''

原来:

一天的数据:18*24

一月的数据:(18*20)*24提取后:

一月的数据:18*(20*24) 装入字典中去month_data[0].shape = (18, 480)

'''#Extract Features (2)

x = np.empty([12 * 471, 18 * 9], dtype = float)#把数据平铺了,一个特征数据,就一行,一个月的就有471行特征数据

y = np.empty([12 * 471, 1], dtype = float)#

for month in range(12):for day in range(20):for hour in range(24):if day == 19 and hour > 14:#23-9=14continue#后面不执行,继续下一轮循环x[month * 471 + day * 24 + hour, :] = month_data[month][:,day * 24 + hour : day * 24 + hour + 9].reshape(1, -1) #vector dim:18*9 (9 9 9 9 9 9 9 9 9 9 9 9 9 9 9 9 9 9)y[month * 471 + day * 24 + hour, 0] = month_data[month][9, day * 24 + hour + 9] #value#month_data[0].shape = (18, 480) → (471, 18 * 9) 这个数据有重叠部分#x.shape = (12 * 471, 18 * 9)

#y.shape = (12 * 471, 1)# Normalize(1) (标准化)即x = (x - mean(x)) / std(x)

mean_x = np.mean(x, axis = 0) # axis=0表示按列求平均值,shape=(1,18*9)

std_x = np.std(x, axis = 0) #按列求方差,shape=(1,18*9)

# std(x,0)等价于std(x),计算标准差的时候除的是根号N-1,这个是无偏的。#方差:每个样本值 与 全体样本值的平均数 之差的平方值 的平均数;#标准差:总体各单位标准值与其平均数离差平方的算术平均数的平方根;#标准差 s =方差的算术平方根for i in range(len(x)): #12 * 471 = 5652 每行走起 for j in range(len(x[0])): #18 * 9 #遍历每个数据 每行中的每列 if std_x[j] != 0:x[i][j] = (x[i][j] - mean_x[j]) / std_x[j] #(标准化)即x = (x - mean(x)) / std(x)

#最常见的标准化方法就是Z标准化 标准差标准化

#标准化后的变量值围绕0上下波动,大于0说明高于平均水平,小于0说明低于平均水平。#Split Training Data Into "train_set" and "validation_set"

#将训练集再分出训练集和测试集,前80%的数据为训练集,后20%的数据为测试集,math.floor意为向下取整#len(x)=5652 len(x) * 0.8=4521.6 math.floor(len(x) * 0.8)=4521,向下取整

x_train_set = x[: math.floor(len(x) * 0.8), :] #x_train_set.shape=(4521, 18 * 9)

x_validation = x[math.floor(len(x) * 0.8): , :]#x_validation.shape=(1131, 18 * 9)y_train_set = y[: math.floor(len(y) * 0.8), :] #y_train_set.shape = (4521, 1)

y_validation = y[math.floor(len(y) * 0.8): , :]#y_validation.shape =(1131, 1)dim = 18 * 9 + 1 # +1表示加入偏置(b)

w = np.zeros([dim, 1]) # 权重和偏置,w.shape=(18*9+1, 1)

x = np.concatenate((np.ones([12 * 471, 1]), x), axis = 1).astype(float)#concatenate:连在一起

# 在x中的第一列前插入一列 全为1,相当于偏置(b)的系数, x.shape=(471*12, 18*9+1)learning_rate = 100 # learning rate

iter_time = 1000 # 训练次数

adagrad = np.zeros([dim, 1]) # ada梯度矩阵,shape和w的shape一样 adagrad.shape=(18*9+1, 1)

eps = 0.0000000001 # 10的-6次方,防止出现除零错误for t in range(iter_time):##y.shape = (12 * 471, 1) x.shape=(471*12, 18*9+1)loss = np.sqrt(np.sum(np.power(np.dot(x, w) - y, 2))/471/12)#rmseif(t%100==0):print(str(t) + ":" + str(loss))#打印出来了if(t>(iter_time-10)):print(str(t) + ":" + str(loss))#打印出来了gradient = 2 * np.dot(x.transpose(), np.dot(x, w) - y) #dim*1 # loss对w的梯度 x.transpose() 转置adagrad += gradient ** 2w = w - learning_rate * gradient / np.sqrt(adagrad + eps)#Adagrad w.shape=(18*9+1, 1)np.save('weight.npy', w)# 保存权重 w.shape=(18*9+1, 1)"""

#******************************************************************

# x_train_set(4521, 18*9) y_train_set(4521, 1) ; x_validation(1131,18*9) y_validation(1131, 1)

# w.shape=(18*9+1, 1)

x_train_set = np.concatenate((np.ones([4521, 1]), x_train_set), axis = 1).astype(float)#concatenate:连在一起

predict_y = np.dot(x_train_set, w)

cnt=0

for i in range(len(predict_y)):if abs(math.floor(predict_y[i])-math.floor(y_train_set[i])) < 5:cnt+=1#print(y_train_set[i])

print("准确率=",str(cnt*100/len(predict_y)),"%")#******************************************************************

"""

# Testing Predict PM2.5

test_data = pd.read_csv('./test.csv', header = None, encoding = 'big5')

test_data = test_data.iloc[:, 2:]

test_data[test_data == 'NR'] = 0

test_data = test_data.to_numpy() #test_data.shape = (18*240, 9) 18*240组=4320 9个小时的数据

test_x = np.empty([240, 18*9], dtype = float)#test_x.shape=(240, 18*9)for i in range(240):#数据的压缩,一组id数据,放在一行处理;由原来的的18 x 9变成1 x (18*9) test_x[i, :] = test_data[18 * i: 18* (i + 1), :].reshape(1, -1)#test_x.shape=(240, 162)for i in range(len(test_x)): #len(test_x)=240for j in range(len(test_x[0])): #len(test_x[0])=18*9=162if std_x[j] != 0:test_x[i][j] = (test_x[i][j] - mean_x[j]) / std_x[j] #数据标准化处理

test_x = np.concatenate((np.ones([240, 1]), test_x), axis = 1).astype(float)#concatenate:连在一起

#在test_x中的第一列前插入一列 全为1,相当于偏置(b)的系数, test_x.shape=(240,18*9+1)#Predict PM2.5 预测第十小时的 PM2.5

w = np.load('weight.npy')# w.shape=(18*9+1, 1)

ans_y = np.dot(test_x, w)# test_x.shape = (240, 163)#Save Prediction to CSV File

with open('submit.csv', mode='w', newline='') as submit_file:csv_writer = csv.writer(submit_file)header = ['id', 'PM2.5_value']print(header)csv_writer.writerow(header)for i in range(240):row = ['id_' + str(i), ans_y[i][0]]csv_writer.writerow(row)print(row)

Kaggle & Submission Format:

- Link: https://www.kaggle.com/c/ml2020spring-hw1

- 预测 240 笔 testing data 中的 PM2.5 值,并将预测结果上传至 Kaggle

Upload format : csv file

第一行必须是 id,value

第二行开始,每行分别为 id 值及预测 PM2.5 数值,以逗号隔开。

作业规定 Assignment Regulation

hw1.sh

请手刻实作 linear regression,方法限使用 gradient descent。

禁止使用 numpy.linalg.lstsq

hw1_best.sh

不限定作法,但套件规定仍必须遵照期初公告。

提交格式 Submission Format

- GitHub 上的 hw1-<account> 裡至少要有下列 3 类档案:

report.pdf

hw1.sh及training、testing相关程式码

hw1_best.sh及training、testing相关程式码

请勿上传 train.csv, test.csv!

- 你的 repo 裡可以还有其他档案:

e.g., model.npy

- hw1.sh 及 hw1_best.sh 将只执行 testing,请自行跑完 training 部分并且储存相关模型参数并上传至 GitHub。

批改规则及 Script 格式

- test data 会 shuffle 过,请勿直接输出事先存取的答案

- 助教在批改程式部分时,会执行以下指令:

bash hw1.sh [input file] [output file]

bash hw1_best.sh [input file] [output file]

[input file] 为助教提供的 test.csv 路径

[output file] 为助教提供的 output file 路径

E.g. 如果助教执行了 bash hw1.sh ./data/test.csv ./result/ans.csv,则应该要在 result 资料夹中产生一个档名为 ans.csv 的档案

- hw1.sh 及 hw1_best.sh 需要在 3 分钟内执行完毕,否则该部分将以 0 分计算。

- 切勿于程式内写死 test.csv 或者是 output file 的路径,否则该部分将以 0 分计算。

- Script 所使用之模型,如 npy 档、pickle 档等,可以于程式内写死路径,助教会 cd 进 hw1 资料夹执行 reproduce 程序。

Reproducing Result

- hw1.sh在reproduce时只需要超过simple baseline即可。

- hw1_best.sh必需要能reproduce出Kaggle上所勾选的成绩。

- 请同学确保你上传的程式所产生的结果,会跟你在 Kaggle 上的结果一致,基本上误差范围在 0.1 之内都属于一致,若超过范围,Kaggle 的部份将不予计分。

本次目标:由前 9 个小时的 18 个 features (包含 PM2.5)预测的 10 个小时的 PM2.5。

若有任何问题,欢迎来信至助教信箱 ntu-ml-2020spring-ta@googlegroups.com

1、Load 'train.csv'

train.csv 的资料为 12 个月中,每个月取 20 天,每天 24 小时的资料(每小时资料有 18 个 features)。

import sys

import pandas as pd

import numpy as np

from google.colab import drive

!gdown --id '1wNKAxQ29G15kgpBy_asjTcZRRgmsCZRm' --output data.zip

!unzip data.zip

# data = pd.read_csv('gdrive/My Drive/hw1-regression/train.csv', header = None, encoding = 'big5')

data = pd.read_csv('./train.csv', encoding = 'big5')data.zip (提取码:8686 )

2、Preprocessing

取需要的数值部分,将 'RAINFALL' 栏位全部补 0。另外,如果要在 colab 重覆这段程式码的执行,请从头开始执行(把上面的都重新跑一次),以避免跑出不是自己要的结果(若自己写程式不会遇到,但 colab 重複跑这段会一直往下取资料。意即第一次取原本资料的第三栏之后的资料,第二次取第一次取的资料掉三栏之后的资料,...)。

data = data.iloc[:, 3:]

data[data == 'NR'] = 0

raw_data = data.to_numpy()

month_data = {}

for month in range(12):sample = np.empty([18, 480])for day in range(20):sample[:, day * 24 : (day + 1) * 24] = raw_data[18 * (20 * month + day) : 18 * (20 * month + day + 1), :]month_data[month] = sample

x = np.empty([12 * 471, 18 * 9], dtype = float)

y = np.empty([12 * 471, 1], dtype = float)

for month in range(12):for day in range(20):for hour in range(24):if day == 19 and hour > 14:continuex[month * 471 + day * 24 + hour, :] = month_data[month][:,day * 24 + hour : day * 24 + hour + 9].reshape(1, -1) #vector dim:18*9 (9 9 9 9 9 9 9 9 9 9 9 9 9 9 9 9 9 9)y[month * 471 + day * 24 + hour, 0] = month_data[month][9, day * 24 + hour + 9] #value

print(x)

print(y)运行后:

[[14. 14. 14. ... 2. 2. 0.5][14. 14. 13. ... 2. 0.5 0.3][14. 13. 12. ... 0.5 0.3 0.8]...[17. 18. 19. ... 1.1 1.4 1.3][18. 19. 18. ... 1.4 1.3 1.6][19. 18. 17. ... 1.3 1.6 1.8]]

[[30.][41.][44.]...[17.][24.][29.]]Normalize (1)

mean_x = np.mean(x, axis = 0) #18 * 9

std_x = np.std(x, axis = 0) #18 * 9

for i in range(len(x)): #12 * 471for j in range(len(x[0])): #18 * 9 if std_x[j] != 0:x[i][j] = (x[i][j] - mean_x[j]) / std_x[j]

x运行后:

array([[-1.35825331, -1.35883937, -1.359222 , ..., 0.26650729,0.2656797 , -1.14082131],[-1.35825331, -1.35883937, -1.51819928, ..., 0.26650729,-1.13963133, -1.32832904],[-1.35825331, -1.51789368, -1.67717656, ..., -1.13923451,-1.32700613, -0.85955971],...,[-0.88092053, -0.72262212, -0.56433559, ..., -0.57693779,-0.29644471, -0.39079039],[-0.7218096 , -0.56356781, -0.72331287, ..., -0.29578943,-0.39013211, -0.1095288 ],[-0.56269867, -0.72262212, -0.88229015, ..., -0.38950555,-0.10906991, 0.07797893]])Split Training Data Into "train_set" and "validation_set"

这部分是针对作业中 report 的第二题、第三题做的简单示范,以生成比较中用来训练的 train_set 和不会被放入训练、只是用来验证的 validation_set。

import math

x_train_set = x[: math.floor(len(x) * 0.8), :]

y_train_set = y[: math.floor(len(y) * 0.8), :]

x_validation = x[math.floor(len(x) * 0.8): , :]

y_validation = y[math.floor(len(y) * 0.8): , :]

print(x_train_set)

print(y_train_set)

print(x_validation)

print(y_validation)

print(len(x_train_set))

print(len(y_train_set))

print(len(x_validation))

print(len(y_validation))运行后:

[[-1.35825331 -1.35883937 -1.359222 ... 0.26650729 0.2656797-1.14082131][-1.35825331 -1.35883937 -1.51819928 ... 0.26650729 -1.13963133-1.32832904][-1.35825331 -1.51789368 -1.67717656 ... -1.13923451 -1.32700613-0.85955971]...[ 0.86929969 0.70886668 0.38952809 ... 1.39110073 0.2656797-0.39079039][ 0.71018876 0.39075806 0.07157353 ... 0.26650729 -0.39013211-0.39079039][ 0.3919669 0.07264944 0.07157353 ... -0.38950555 -0.39013211-0.85955971]]

[[30.][41.][44.]...[ 7.][ 5.][14.]]

[[ 0.07374504 0.07264944 0.07157353 ... -0.38950555 -0.85856912-0.57829812][ 0.07374504 0.07264944 0.23055081 ... -0.85808615 -0.577506920.54674825][ 0.07374504 0.23170375 0.23055081 ... -0.57693779 0.54674191-0.1095288 ]...[-0.88092053 -0.72262212 -0.56433559 ... -0.57693779 -0.29644471-0.39079039][-0.7218096 -0.56356781 -0.72331287 ... -0.29578943 -0.39013211-0.1095288 ][-0.56269867 -0.72262212 -0.88229015 ... -0.38950555 -0.109069910.07797893]]

[[13.][24.][22.]...[17.][24.][29.]]

4521

4521

1131

1131

dim = 18 * 9 + 1

w = np.zeros([dim, 1])

x = np.concatenate((np.ones([12 * 471, 1]), x), axis = 1).astype(float)

learning_rate = 100

iter_time = 1000

adagrad = np.zeros([dim, 1])

eps = 0.0000000001

for t in range(iter_time):loss = np.sqrt(np.sum(np.power(np.dot(x, w) - y, 2))/471/12)#rmseif(t%100==0):print(str(t) + ":" + str(loss))gradient = 2 * np.dot(x.transpose(), np.dot(x, w) - y) #dim*1adagrad += gradient ** 2w = w - learning_rate * gradient / np.sqrt(adagrad + eps)

np.save('weight.npy', w)

w运行后:

0:27.071214829194115

100:33.78905859777454

200:19.91375129819709

300:13.53106819368969

400:10.64546615844617

500:9.277353455475065

600:8.5180420459565

700:8.014061987588422

800:7.63675682477569

900:7.3365637403711235

array([[ 2.13740269e+01],[ 3.58888909e+00],[ 4.56386323e+00],[ 2.16307023e+00],[-6.58545223e+00],[-3.38885580e+01],[ 3.22235518e+01],[ 3.49340354e+00],[-4.60308671e+00],[-1.02374754e+00],[-3.96791501e-01],[-1.06908800e-01],[ 2.22488184e-01],[ 8.99634117e-02],[ 1.31243105e-01],[ 2.15894989e-02],[-1.52867263e-01],[ 4.54087776e-02],[ 5.20999235e-01],[ 1.60824213e-01],[-3.17709451e-02],[ 1.28529025e-02],[-1.76839437e-01],[ 1.71241371e-01],[-1.31190032e-01],[-3.51614451e-02],[ 1.00826192e-01],[ 3.45018257e-01],[ 4.00130315e-02],[ 2.54331382e-02],[-5.04425219e-01],[ 3.71483018e-01],[ 8.46357671e-01],[-8.11920428e-01],[-8.00217575e-02],[ 1.52737711e-01],[ 2.64915130e-01],[-5.19860416e-02],[-2.51988315e-01],[ 3.85246517e-01],[ 1.65431451e-01],[-7.83633314e-02],[-2.89457231e-01],[ 1.77615023e-01],[ 3.22506948e-01],[-4.59955256e-01],[-3.48635358e-02],[-5.81764363e-01],[-6.43394528e-02],[-6.32876949e-01],[ 6.36624507e-02],[ 8.31592506e-02],[-4.45157961e-01],[-2.34526366e-01],[ 9.86608594e-01],[ 2.65230652e-01],[ 3.51938093e-02],[ 3.07464334e-01],[-1.04311239e-01],[-6.49166901e-02],[ 2.11224757e-01],[-2.43159815e-01],[-1.31285604e-01],[ 1.09045810e+00],[-3.97913710e-02],[ 9.19563678e-01],[-9.44824150e-01],[-5.04137735e-01],[ 6.81272939e-01],[-1.34494828e+00],[-2.68009542e-01],[ 4.36204342e-02],[ 1.89619513e+00],[-3.41873873e-01],[ 1.89162461e-01],[ 1.73251268e-02],[ 3.14431930e-01],[-3.40828467e-01],[ 4.92385651e-01],[ 9.29634214e-02],[-4.50983589e-01],[ 1.47456584e+00],[-3.03417236e-02],[ 7.71229328e-02],[ 6.38314494e-01],[-7.93287087e-01],[ 8.82877506e-01],[ 3.18965610e+00],[-5.75671706e+00],[ 1.60748945e+00],[ 1.36142440e+01],[ 1.50029111e-01],[-4.78389603e-02],[-6.29463755e-02],[-2.85383032e-02],[-3.01562821e-01],[ 4.12058013e-01],[-6.77534154e-02],[-1.00985479e-01],[-1.68972973e-01],[ 1.64093233e+00],[ 1.89670371e+00],[ 3.94713816e-01],[-4.71231449e+00],[-7.42760774e+00],[ 6.19781936e+00],[ 3.53986244e+00],[-9.56245861e-01],[-1.04372792e+00],[-4.92863713e-01],[ 6.31608790e-01],[-4.85175956e-01],[ 2.58400216e-01],[ 9.43846795e-02],[-1.29323184e-01],[-3.81235287e-01],[ 3.86819479e-01],[ 4.04211627e-01],[ 3.75568914e-01],[ 1.83512261e-01],[-8.01417708e-02],[-3.10188597e-01],[-3.96124612e-01],[ 3.66227853e-01],[ 1.79488593e-01],[-3.14477051e-01],[-2.37611443e-01],[ 3.97076104e-02],[ 1.38775912e-01],[-3.84015069e-02],[-5.47557119e-02],[ 4.19975207e-01],[ 4.46120687e-01],[-4.31074826e-01],[-8.74450768e-02],[-5.69534264e-02],[-7.23980157e-02],[-1.39880128e-02],[ 1.40489658e-01],[-2.44952334e-01],[ 1.83646770e-01],[-1.64135512e-01],[-7.41216452e-02],[-9.71414213e-02],[ 1.98829041e-02],[-4.46965919e-01],[-2.63440959e-01],[ 1.52924043e-01],[ 6.52532847e-02],[ 7.06818266e-01],[ 9.73757051e-02],[-3.35687787e-01],[-2.26559165e-01],[-3.00117086e-01],[ 1.24185231e-01],[ 4.18872344e-01],[-2.51891946e-01],[-1.29095731e-01],[-5.57512471e-01],[ 8.76239582e-02],[ 3.02594902e-01],[-4.23463160e-01],[ 4.89922051e-01]])

载入 test data,并且以相似于训练资料预先处理和特徵萃取的方式处理,使 test data 形成 240 个维度为 18 * 9 + 1 的资料。

# testdata = pd.read_csv('gdrive/My Drive/hw1-regression/test.csv', header = None, encoding = 'big5')

testdata = pd.read_csv('./test.csv', header = None, encoding = 'big5')

test_data = testdata.iloc[:, 2:]

test_data[test_data == 'NR'] = 0

test_data = test_data.to_numpy()

test_x = np.empty([240, 18*9], dtype = float)

for i in range(240):test_x[i, :] = test_data[18 * i: 18* (i + 1), :].reshape(1, -1)

for i in range(len(test_x)):for j in range(len(test_x[0])):if std_x[j] != 0:test_x[i][j] = (test_x[i][j] - mean_x[j]) / std_x[j]

test_x = np.concatenate((np.ones([240, 1]), test_x), axis = 1).astype(float)

test_x运行后:

/usr/local/lib/python3.6/dist-packages/ipykernel_launcher.py:4: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value insteadSee the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copyafter removing the cwd from sys.path.

/usr/local/lib/python3.6/dist-packages/pandas/core/frame.py:3093: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrameSee the caveats in the documentation: https://pandas.pydata.org/pandas-docs/stable/user_guide/indexing.html#returning-a-view-versus-a-copyself._where(-key, value, inplace=True)

array([[ 1. , -0.24447681, -0.24545919, ..., -0.67065391,-1.04594393, 0.07797893],[ 1. , -1.35825331, -1.51789368, ..., 0.17279117,-0.10906991, -0.48454426],[ 1. , 1.5057434 , 1.34508393, ..., -1.32666675,-1.04594393, -0.57829812],...,[ 1. , 0.3919669 , 0.54981237, ..., 0.26650729,-0.20275731, 1.20302531],[ 1. , -1.8355861 , -1.8360023 , ..., -1.04551839,-1.13963133, -1.14082131],[ 1. , -1.35825331, -1.35883937, ..., 2.98427476,3.26367657, 1.76554849]])

Prediction

w = np.load('weight.npy')

ans_y = np.dot(test_x, w)

ans_yarray([[ 5.17496040e+00],[ 1.83062143e+01],[ 2.04912181e+01],[ 1.15239429e+01],[ 2.66160568e+01],[ 2.05313481e+01],[ 2.19065510e+01],[ 3.17364687e+01],[ 1.33916741e+01],[ 6.44564665e+01],[ 2.02645688e+01],[ 1.53585761e+01],[ 6.85894728e+01],[ 4.84281137e+01],[ 1.87023338e+01],[ 1.01885957e+01],[ 3.07403629e+01],[ 7.11322178e+01],[-4.13051739e+00],[ 1.82356940e+01],[ 3.85789223e+01],[ 7.13115197e+01],[ 7.41034816e+00],[ 1.87179553e+01],[ 1.49372503e+01],[ 3.67197367e+01],[ 1.79616970e+01],[ 7.57894629e+01],[ 1.23093102e+01],[ 5.62953517e+01],[ 2.51131609e+01],[ 4.61024867e+00],[ 2.48377055e+00],[ 2.47594223e+01],[ 3.04802805e+01],[ 3.84639307e+01],[ 4.42023106e+01],[ 3.00868360e+01],[ 4.04736750e+01],[ 2.92264799e+01],[ 5.60645605e+00],[ 3.86660161e+01],[ 3.46102134e+01],[ 4.83896975e+01],[ 1.47572477e+01],[ 3.44668201e+01],[ 2.74831069e+01],[ 1.20008794e+01],[ 2.13780362e+01],[ 2.85444031e+01],[ 2.01655138e+01],[ 1.07966781e+01],[ 2.21710358e+01],[ 5.34462631e+01],[ 1.22195811e+01],[ 4.33009685e+01],[ 3.21823351e+01],[ 2.25672175e+01],[ 5.67395142e+01],[ 2.07450529e+01],[ 1.50288546e+01],[ 3.98553016e+01],[ 1.29753407e+01],[ 5.17416596e+01],[ 1.87833696e+01],[ 1.23487528e+01],[ 1.56336237e+01],[-5.88714707e-02],[ 4.15080111e+01],[ 3.15487475e+01],[ 1.86042512e+01],[ 3.74768197e+01],[ 5.65203907e+01],[ 6.58787719e+00],[ 1.22293397e+01],[ 5.20369640e+00],[ 4.79273751e+01],[ 1.30207057e+01],[ 1.71103017e+01],[ 2.06032345e+01],[ 2.12844816e+01],[ 3.86929353e+01],[ 3.00207167e+01],[ 8.87674067e+01],[ 3.59847002e+01],[ 2.67569136e+01],[ 2.39635168e+01],[ 3.27472428e+01],[ 2.21890438e+01],[ 2.09921589e+01],[ 2.95559943e+01],[ 4.09921689e+01],[ 8.62511781e+00],[ 3.23214718e+01],[ 4.65980444e+01],[ 2.28840708e+01],[ 3.15181297e+01],[ 1.11982335e+01],[ 2.85274366e+01],[ 2.91150680e-01],[ 1.79669611e+01],[ 2.71241639e+01],[ 1.13982328e+01],[ 1.64264269e+01],[ 2.34252610e+01],[ 4.06160827e+01],[ 2.58641250e+01],[ 5.42273695e+00],[ 1.07949211e+01],[ 7.28621369e+01],[ 4.80228371e+01],[ 1.57468083e+01],[ 2.46704106e+01],[ 1.28277933e+01],[ 1.01580576e+01],[ 2.72692233e+01],[ 2.92087386e+01],[ 8.83533962e+00],[ 2.00510881e+01],[ 2.02123337e+01],[ 7.99060093e+01],[ 1.80616143e+01],[ 3.05428093e+01],[ 2.59807924e+01],[ 5.21257727e+00],[ 3.03556973e+01],[ 7.76832289e+00],[ 1.53282683e+01],[ 2.26663657e+01],[ 6.27420542e+01],[ 1.89507804e+01],[ 1.90763556e+01],[ 6.13715741e+01],[ 1.58845621e+01],[ 1.34094181e+01],[ 8.48772484e-01],[ 7.83499672e+00],[ 5.70128290e+01],[ 2.56079968e+01],[ 4.96170473e+00],[ 3.64148790e+01],[ 2.87900067e+01],[ 4.91941210e+01],[ 4.03068699e+01],[ 1.33161806e+01],[ 2.76610119e+01],[ 1.71580275e+01],[ 4.96872626e+01],[ 2.30302723e+01],[ 3.92409365e+01],[ 1.31967539e+01],[ 5.94889370e+00],[ 2.58216090e+01],[ 8.25863421e+00],[ 1.91463205e+01],[ 4.31824865e+01],[ 6.71784358e+00],[ 3.38696152e+01],[ 1.53699378e+01],[ 1.69390450e+01],[ 3.78853368e+01],[ 1.92024845e+01],[ 9.05950472e+00],[ 1.02833996e+01],[ 4.86724471e+01],[ 3.05877162e+01],[ 2.47740990e+00],[ 1.28116039e+01],[ 7.03247898e+01],[ 1.48409677e+01],[ 6.88655876e+01],[ 4.27419924e+01],[ 2.40002615e+01],[ 2.34207249e+01],[ 6.16721244e+01],[ 2.54942028e+01],[ 1.90048098e+01],[ 3.48866829e+01],[ 9.40231340e+00],[ 2.95200113e+01],[ 1.45739659e+01],[ 9.12556314e+00],[ 5.28125840e+01],[ 4.50395380e+01],[ 1.74524347e+01],[ 3.84939353e+01],[ 2.70389191e+01],[ 6.55817097e+01],[ 7.03730638e+00],[ 5.27144771e+01],[ 3.82064593e+01],[ 2.11698011e+01],[ 3.02475569e+01],[ 2.71442299e+00],[ 1.99329326e+01],[-3.41333234e+00],[ 3.24459994e+01],[ 1.05829730e+01],[ 2.17752257e+01],[ 6.24652921e+01],[ 2.41329437e+01],[ 2.62012396e+01],[ 6.37444772e+01],[ 2.83429777e+00],[ 1.43792470e+01],[ 9.36985073e+00],[ 9.88116661e+00],[ 3.49494536e+00],[ 1.22608049e+02],[ 2.10835130e+01],[ 1.75322206e+01],[ 2.01830983e+01],[ 3.63931322e+01],[ 3.49351512e+01],[ 1.88303127e+01],[ 3.83445555e+01],[ 7.79166341e+01],[ 1.79532355e+00],[ 1.34458279e+01],[ 3.61311556e+01],[ 1.51504035e+01],[ 1.29418483e+01],[ 1.13125241e+02],[ 1.52246047e+01],[ 1.48240260e+01],[ 5.92673537e+01],[ 1.05836953e+01],[ 2.09930626e+01],[ 9.78936588e+00],[ 4.77118001e+00],[ 4.79278069e+01],[ 1.23994384e+01],[ 4.81464766e+01],[ 4.04663804e+01],[ 1.69405903e+01],[ 4.12665445e+01],[ 6.90278920e+01],[ 4.03462492e+01],[ 1.43137440e+01],[ 1.57707266e+01]])Save Prediction to CSV File

import csv

with open('submit.csv', mode='w', newline='') as submit_file:csv_writer = csv.writer(submit_file)header = ['id', 'value']print(header)csv_writer.writerow(header)for i in range(240):row = ['id_' + str(i), ans_y[i][0]]csv_writer.writerow(row)print(row)运行后:

['id', 'value']

['id_0', 5.174960398984736]

['id_1', 18.306214253527905]

['id_2', 20.491218094180542]

['id_3', 11.523942869805357]

['id_4', 26.61605675230614]

['id_5', 20.531348081761216]

['id_6', 21.906551018797387]

['id_7', 31.736468747068837]

['id_8', 13.391674055111729]

['id_9', 64.45646650291954]

['id_10', 20.26456883615944]

['id_11', 15.358576077361231]

['id_12', 68.58947276926726]

['id_13', 48.42811374745719]

['id_14', 18.702333824193214]

['id_15', 10.188595737466702]

['id_16', 30.74036285982045]

['id_17', 71.13221776355105]

['id_18', -4.130517391262438]

['id_19', 18.23569401642869]

['id_20', 38.578922275007756]

['id_21', 71.31151972531332]

['id_22', 7.410348162634086]

['id_23', 18.717955330321395]

['id_24', 14.937250260084578]

['id_25', 36.719736694705304]

['id_26', 17.961697005662725]

['id_27', 75.78946287210537]

['id_28', 12.30931024861448]

['id_29', 56.29535173964959]

['id_30', 25.113160865661477]

['id_31', 4.610248674094039]

['id_32', 2.4837705545150315]

['id_33', 24.75942226132128]

['id_34', 30.48028046559117]

['id_35', 38.46393074642665]

['id_36', 44.20231060933004]

['id_37', 30.086836019866002]

['id_38', 40.47367501574007]

['id_39', 29.226479902317358]

['id_40', 5.60645605434392]

['id_41', 38.666016078789596]

['id_42', 34.61021343187719]

['id_43', 48.389697507384824]

['id_44', 14.757247666944176]

['id_45', 34.466820110872085]

['id_46', 27.483106874184358]

['id_47', 12.000879378154046]

['id_48', 21.37803615160377]

['id_49', 28.544403091663288]

['id_50', 20.165513818411583]

['id_51', 10.796678149746493]

['id_52', 22.171035755750125]

['id_53', 53.446263109352266]

['id_54', 12.219581121610016]

['id_55', 43.300968455171535]

['id_56', 32.18233510328541]

['id_57', 22.56721751457079]

['id_58', 56.73951416554704]

['id_59', 20.745052945295473]

['id_60', 15.028854557473279]

['id_61', 39.85530159038512]

['id_62', 12.97534068072828]

['id_63', 51.74165959283005]

['id_64', 18.783369632539863]

['id_65', 12.348752842777719]

['id_66', 15.633623653541935]

['id_67', -0.0588714706849891]

['id_68', 41.508011073075956]

['id_69', 31.548747530656026]

['id_70', 18.604251157547083]

['id_71', 37.476819724880684]

['id_72', 56.52039065762304]

['id_73', 6.587877193521949]

['id_74', 12.229339737435016]

['id_75', 5.203696404134638]

['id_76', 47.92737510380061]

['id_77', 13.02070568559467]

['id_78', 17.110301693903622]

['id_79', 20.603234531002034]

['id_80', 21.28448156078461]

['id_81', 38.692935290511805]

['id_82', 30.020716675725843]

['id_83', 88.76740666723546]

['id_84', 35.984700239668264]

['id_85', 26.756913553477187]

['id_86', 23.96351684356439]

['id_87', 32.74724282808308]

['id_88', 22.189043755319933]

['id_89', 20.99215885362656]

['id_90', 29.55599431664545]

['id_91', 40.992168866517794]

['id_92', 8.625117809911565]

['id_93', 32.32147180887792]

['id_94', 46.59804436536756]

['id_95', 22.884070826723526]

['id_96', 31.51812972825165]

['id_97', 11.198233479766103]

['id_98', 28.527436642529608]

['id_99', 0.2911506800896479]

['id_100', 17.966961079539697]

['id_101', 27.124163929470143]

['id_102', 11.398232780652847]

['id_103', 16.426426865673527]

['id_104', 23.42526104692217]

['id_105', 40.6160826705684]

['id_106', 25.86412502656041]

['id_107', 5.42273695167238]

['id_108', 10.794921122256103]

['id_109', 72.86213692992125]

['id_110', 48.02283705948137]

['id_111', 15.746808276903014]

['id_112', 24.67041061417796]

['id_113', 12.827793326536707]

['id_114', 10.158057570240526]

['id_115', 27.269223342020997]

['id_116', 29.20873857793244]

['id_117', 8.835339619930757]

['id_118', 20.051088137129774]

['id_119', 20.21233374376426]

['id_120', 79.90600929870557]

['id_121', 18.061614288263605]

['id_122', 30.542809341304334]

['id_123', 25.980792377728033]

['id_124', 5.212577268164774]

['id_125', 30.355697305856214]

['id_126', 7.768322888914648]

['id_127', 15.328268255393299]

['id_128', 22.666365717697964]

['id_129', 62.74205421109007]

['id_130', 18.95078036798799]

['id_131', 19.07635563083854]

['id_132', 61.37157409163711]

['id_133', 15.884562052629718]

['id_134', 13.409418077705553]

['id_135', 0.848772483611288]

['id_136', 7.834996717304147]

['id_137', 57.01282901179679]

['id_138', 25.60799675181382]

['id_139', 4.961704729242088]

['id_140', 36.41487903906277]

['id_141', 28.79000672197594]

['id_142', 49.19412096197634]

['id_143', 40.30686985573449]

['id_144', 13.316180593982674]

['id_145', 27.661011875229164]

['id_146', 17.158027524366755]

['id_147', 49.68726256929681]

['id_148', 23.030272291604753]

['id_149', 39.24093652484279]

['id_150', 13.196753889412534]

['id_151', 5.948893701039419]

['id_152', 25.821608976304237]

['id_153', 8.258634214291622]

['id_154', 19.146320517225583]

['id_155', 43.18248652651673]

['id_156', 6.717843578093042]

['id_157', 33.86961524681065]

['id_158', 15.369937846981811]

['id_159', 16.939044973551926]

['id_160', 37.885336794634846]

['id_161', 19.202484541054417]

['id_162', 9.059504715654723]

['id_163', 10.283399610648505]

['id_164', 48.672447125698284]

['id_165', 30.587716213230827]

['id_166', 2.477409897532172]

['id_167', 12.811603937805952]

['id_168', 70.32478980976464]

['id_169', 14.840967694067047]

['id_170', 68.8655875667886]

['id_171', 42.74199244486634]

['id_172', 24.000261542920146]

['id_173', 23.420724860321457]

['id_174', 61.672124435682385]

['id_175', 25.494202845059203]

['id_176', 19.00480978686909]

['id_177', 34.88668288189682]

['id_178', 9.402313398379736]

['id_179', 29.520011314408034]

['id_180', 14.573965885700478]

['id_181', 9.125563143203589]

['id_182', 52.812583998131856]

['id_183', 45.039537994389605]

['id_184', 17.452434679183295]

['id_185', 38.493935279714314]

['id_186', 27.03891909264384]

['id_187', 65.58170967424583]

['id_188', 7.037306380769573]

['id_189', 52.7144771341157]

['id_190', 38.20645933704974]

['id_191', 21.169801059557855]

['id_192', 30.247556879488403]

['id_193', 2.714422989716317]

['id_194', 19.932932587640835]

['id_195', -3.413332337603944]

['id_196', 32.44599940281317]

['id_197', 10.582973029979941]

['id_198', 21.775225707258457]

['id_199', 62.4652920656779]

['id_200', 24.13294368731649]

['id_201', 26.20123964740095]

['id_202', 63.74447723440287]

['id_203', 2.8342977741290327]

['id_204', 14.379246986978854]

['id_205', 9.36985073175388]

['id_206', 9.881166613595415]

['id_207', 3.4949453589721426]

['id_208', 122.6080493792178]

['id_209', 21.08351301448057]

['id_210', 17.532220599455112]

['id_211', 20.18309834459699]

['id_212', 36.393132212281834]

['id_213', 34.93515120529069]

['id_214', 18.830312661458645]

['id_215', 38.34455552272332]

['id_216', 77.91663413807038]

['id_217', 1.7953235508882108]

['id_218', 13.445827939135777]

['id_219', 36.131155590412135]

['id_220', 15.150403498166293]

['id_221', 12.94184833441792]

['id_222', 113.1252409378639]

['id_223', 15.224604677934396]

['id_224', 14.824025968612084]

['id_225', 59.26735368854044]

['id_226', 10.583695290718488]

['id_227', 20.993062563532188]

['id_228', 9.789365880830388]

['id_229', 4.7711800087059775]

['id_230', 47.9278069048129]

['id_231', 12.39943839475103]

['id_232', 48.14647656264416]

['id_233', 40.46638039656415]

['id_234', 16.940590270332937]

['id_235', 41.266544489418735]

['id_236', 69.027892033729]

['id_237', 40.34624924412242]

['id_238', 14.313743982871115]

['id_239', 15.770726634219812]相关 reference 可以参考:

Adagrad : https://youtu.be/yKKNr-QKz2Q?list=PLJV_el3uVTsPy9oCRY30oBPNLCo89yu49&t=705

RMSprop : https://www.youtube.com/watch?v=5Yt-obwvMHI

Adam https://www.youtube.com/watch?v=JXQT_vxqwIs

以上 print 的部分主要是为了看一下资料和结果的呈现,拿掉也无妨。另外,在自己的 linux 系统,可以将档案写死的的部分换成 sys.argv 的使用 (可在 terminal 自行输入档案和档案位置)。

最后,可以藉由调整 learning rate、iter_time (iteration 次数)、取用 features 的多寡(取几个小时,取哪些特徵栏位),甚至是不同的 model 来超越 baseline。

Report 的问题模板请参照 : https://docs.google.com/document/d/1s84RXs2AEgZr54WCK9IgZrfTF-6B1td-AlKR9oqYa4g/edit