22 layers

The main idea of the Inception architecture is based on finding out how an optimal local sparse structure in a convolutional vision network can be approximated and covered by readily available dense components..

inception结构的idea基于: 在convolutional vision network中局部稀疏的结构如何近似和cover readily available dense components.

-----------------------------------------------------------

uniformly 增加网络的深度(deep)和广度(width) cause major potential drawbacks:

1. a large number of parameters, prone to overfitting

2. dramatically increase use of computational resources

--------------------------------Inception,-----------------------------

1x1的卷积有什么作用

-多通道信息交互融合(eg.NIN) feature map的线性组合

-降维和升维(googlenet), 减少参数数量

-在不改变feature map尺寸(无信息损失)下增加非线性(deeper),增加网络的表达能力 (NIN)

-------------------------------------------

Loss,

loss = 0.3*loss1+ 0.3*loss2+loss3

--------------------------------------------------------------------

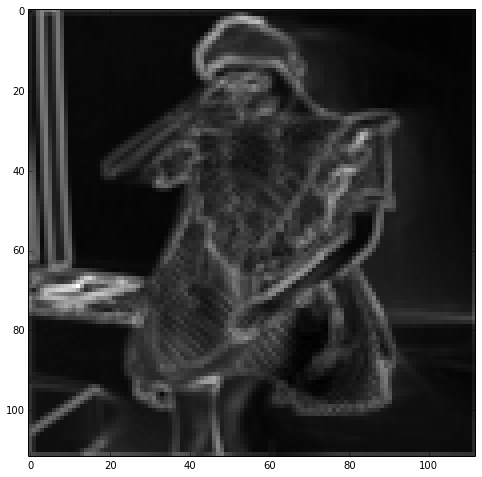

c = net.blobs['conv2/3x3'].data

print c.shape

sumxx = np.zeros((c.shape[2],c.shape[3]))

for m in c[0]: std = np.std(m) ave = np.average(m) if std ==0 : sumxx += (m-ave)else: sumxx += (m-ave)/std

#print std

sumxx = (sumxx - np.min(sumxx))/ (np.max(sumxx) - np.min(sumxx))

plt.imshow(sumxx)原图:

conv1其中的一个feature map 归一化

vonv1所有feature map 处理后相加后的图片

# (x-average)/std -> 归一化(x'-min)/(max-min) -> 所有feature map对应位置相加 -> 归一化

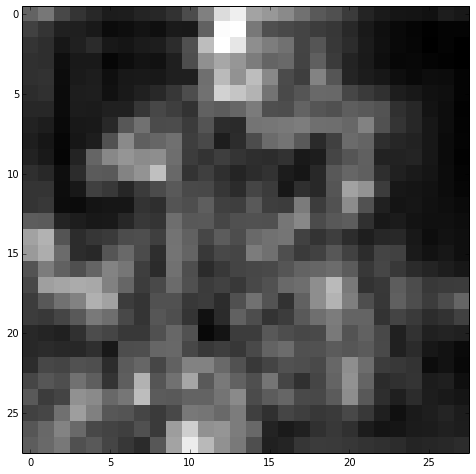

relu1 所有feature map的和

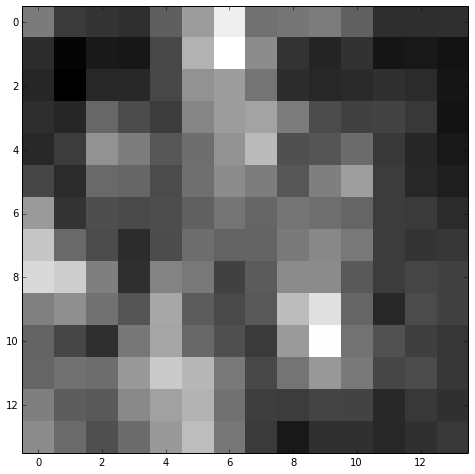

max pooling1

norm1

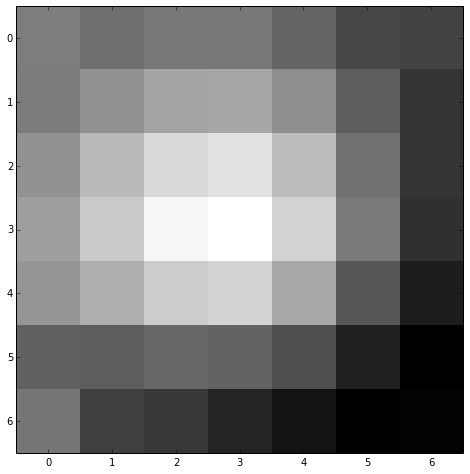

inception_3a/output

inception_4a/output

inception_5b/output