1. BLEU定义

双语替换评测(英语:bilingual evaluation understudy,缩写:BLEU)是用于评估自然语言的字句用机器翻译出来的品质的一种算法。

通过将各个译文片段(通常是句子)与一组翻译品质好的参考译文进行比较,计算出各个片段的分数。 接着这些分数平均于整个语料库,估算翻译的整体品质。此算法不考虑字句的可理解性或语法的正确性。

双语替换评测的输出分数始终为0到1之间的数字。该输出值意味着候选译文与参考译文之间的相似程度,越接近1的值表示文本相似度越高。

2. 示例

双语替换评测:

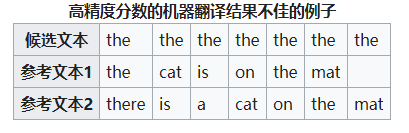

对于候选译文中的每个候选单词,在任何参考译文中,算法改采用其最大总数 m m a x {\displaystyle ~m_{max}} mmax? 。 上述例子中,单词“the”在参考文本1中出现两次,在参考文本2中出现一次,因此 m m a x = 2 {\displaystyle ~m_{max}=2} mmax?=2 。候选文本的单词总数为7,因此,BLEU分数为 2 7 \frac{2}{7} 72? .

但是在实际中,只使用单个单词进行比较缺乏对单词的考虑,计算的结果是有偏差的,因此,BLEU使用了ngram的方法。

对于候选集the the cat,如果只关注单个单词,那个计算的分数是1;如果是Bigram,计算结果是0.5,更加反映实际的翻译结果。

3. 计算公式

n-gram的精度计算:

BLEU计算细节:

主要对n-gram计算的精度还进行了加权。

python实现:

import collections

import mathdef _get_ngrams(segment, max_order):"""Extracts all n-grams upto a given maximum order from an input segment.Args:segment: text segment from which n-grams will be extracted.max_order: maximum length in tokens of the n-grams returned by thismethods.Returns:The Counter containing all n-grams upto max_order in segmentwith a count of how many times each n-gram occurred."""ngram_counts = collections.Counter()for order in range(1, max_order + 1):for i in range(0, len(segment) - order + 1):ngram = tuple(segment[i:i+order])ngram_counts[ngram] += 1return ngram_countsdef compute_bleu(reference_corpus, translation_corpus, max_order=4,smooth=False):"""Computes BLEU score of translated segments against one or more references.Args:reference_corpus: list of lists of references for each translation. Eachreference should be tokenized into a list of tokens.translation_corpus: list of translations to score. Each translationshould be tokenized into a list of tokens.max_order: Maximum n-gram order to use when computing BLEU score.smooth: Whether or not to apply Lin et al. 2004 smoothing.Returns:3-Tuple with the BLEU score, n-gram precisions, geometric mean of n-gramprecisions and brevity penalty."""matches_by_order = [0] * max_orderpossible_matches_by_order = [0] * max_orderreference_length = 0translation_length = 0for (references, translation) in zip(reference_corpus,translation_corpus):reference_length += min(len(r) for r in references)translation_length += len(translation)merged_ref_ngram_counts = collections.Counter()for reference in references:merged_ref_ngram_counts |= _get_ngrams(reference, max_order)translation_ngram_counts = _get_ngrams(translation, max_order)overlap = translation_ngram_counts & merged_ref_ngram_countsfor ngram in overlap:matches_by_order[len(ngram)-1] += overlap[ngram]for order in range(1, max_order+1):possible_matches = len(translation) - order + 1if possible_matches > 0:possible_matches_by_order[order-1] += possible_matchesprecisions = [0] * max_orderfor i in range(0, max_order):if smooth:precisions[i] = ((matches_by_order[i] + 1.) /(possible_matches_by_order[i] + 1.))else:if possible_matches_by_order[i] > 0:precisions[i] = (float(matches_by_order[i]) /possible_matches_by_order[i])else:precisions[i] = 0.0if min(precisions) > 0:p_log_sum = sum((1. / max_order) * math.log(p) for p in precisions)geo_mean = math.exp(p_log_sum)else:geo_mean = 0ratio = float(translation_length) / reference_lengthif ratio > 1.0:bp = 1.else:bp = math.exp(1 - 1. / ratio)bleu = geo_mean * bpreturn (bleu, precisions, bp, ratio, translation_length, reference_length)

参考:

- wiki;

- en wiki;

- BLEU: a Method for Automatic Evaluation of Machine Translation;