0. Hadoop源码包下载

http://mirror.bit.edu.cn/apache/hadoop/common

1. 集群环境

Master xxx.xxx.xxx.xxx

Slave1 xxx.xxx.xxx.xxx

Slave2 xxx.xxx.xxx.xxx

vim /etc/hosts 里追加

2. 下载安装包

#Master

wget http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-2.6.5/hadoop-2.6.5.tar.gz

tar zxvf hadoop-2.6.5.tar.gz

2.1关闭系统防火墙及内核防火墙

#Master、Slave1、Slave2

#清空系统防火墙

iptables -F

#保存防火墙配置

service iptables save

![]()

# 临时关闭防火墙

service iptables stop

# 永久关闭防火墙

chkconfig iptables off

#临时关闭内核防火墙

setenforce 0

#永久关闭内核防火墙

vim /etc/selinux/config

SELINUX=disabled

3. 修改Hadoop配置文件

#Master

cd hadoop-2.6.5/etc/hadoop

vim hadoop-env.sh

export JAVA_HOME=/usr/local/src/jdk1.8.0_152vim yarn-env.sh

export JAVA_HOME=/usr/local/src/jdk1.8.0_152vim slaves

slave1slave2vim core-site.xml

<configuration><property><name>fs.defaultFS</name><value>hdfs://master:9000</value></property><property><name>hadoop.tmp.dir</name><value>file:/usr/local/src/hadoop-2.6.5/tmp/</value></property>

</configuration>vim hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9001</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/src/hadoop-2.6.5/dfs/name/</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/src/hadoop-2.6.5/dfs/data/</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property><property><name>mapreduce.jobhistory.address</name><value>http://master:10020</value></property><property><name>mapreduce.jobhistory.webapp.address</name><value>http://master:19888</value></property>

</configuration>vim yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8035</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

<!-- 关闭虚拟内存检查-->

<property><name>yarn.nodemanager.vmem-check-enabled</name><value>false</value>

</property>

</configuration>#创建临时目录和文件目录

mkdir /usr/local/src/hadoop-2.6.5/tmp

mkdir -p /usr/local/src/hadoop-2.6.5/dfs/name

mkdir -p /usr/local/src/hadoop-2.6.5/dfs/data

4. 配置环境变量

#Master、Slave1、Slave2

vim ~/.bashrc

export HADOOP_HOME=/usr/local/src/hadoop-2.6.5

export PATH=$PATH:$HADOOP_HOME/bin

#刷新环境变量

source ~/.bashrc

5. 拷贝安装包

#Master

scp -r /usr/local/src/hadoop-2.6.5 root@slave1:/usr/local/src/hadoop-2.6.5

scp -r /usr/local/src/hadoop-2.6.5 root@slave2:/usr/local/src/hadoop-2.6.5

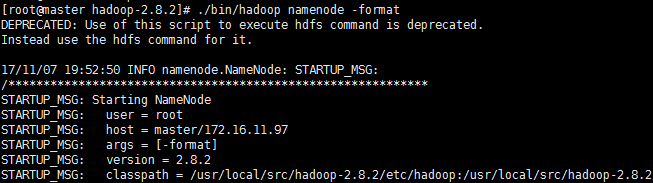

6. 启动集群

#Master

#初始化Namenode

hadoop namenode -format

#启动集群

./sbin/start-all.sh

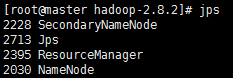

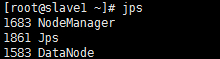

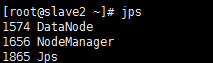

6. 集群状态

jps

#Master

#Slave1

#Slave2

7.启动历史服务器

sbin/mr-jobhistory-daemon.sh start historyserver

8.监控网页

http://master:8088(使用非系统自带浏览器打开)

8. 操作命令

#和Hadoop1.0操作命令是一样的

9. 关闭集群

./sbin/hadoop stop-all.sh