YOLOv5 源码中,模型是依靠 yaml 文件建立的。而 yaml 文件中涉及到的卷积神经网络单元都是在 models 文件夹中的 common.py 声明的,所以自行设计网络结构之前有必要详解这个文件。这个文件囊括了近年来表现突出的结构单元,就算不学 YOLOv5 也建议 copy 收藏

参数说明

| c1 | c2 | c_ | k | s | p | g | act | shortcut |

| 输入信号 通道 |

卷积产生 通道 |

隐藏层 通道 |

卷积核 尺寸 |

卷积 步长 |

边界 填充 |

卷积 组数 |

激活 函数 |

残差 连接 |

Conv(c1, c2, k=1, s=1, p=None, g=1, act=True)

DWConv(c1, c2, k=1, s=1, act=True)TransformerLayer(c, num_heads)

TransformerBlock(c1, c2, num_heads, num_layers)Bottleneck(c1, c2, shortcut=True, g=1, e=0.5)

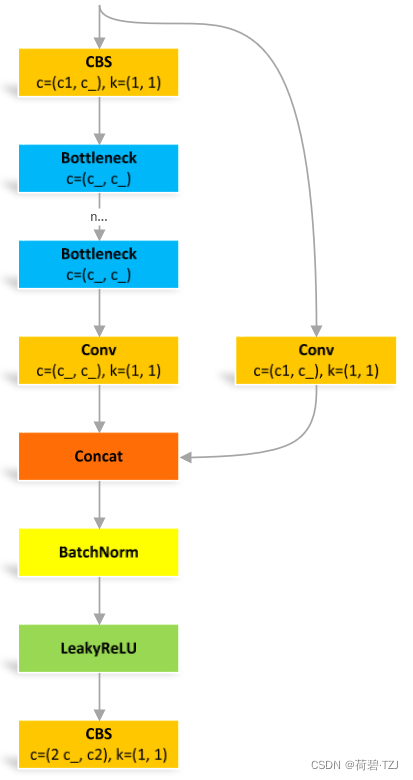

BottleneckCSP(c1, c2, n=1, shortcut=True, g=1, e=0.5)

C3(c1, c2, n=1, shortcut=True, g=1, e=0.5)GhostConv(c1, c2, k=1, s=1, g=1, act=True)

GhostBottleneck(c1, c2, k=3, s=1)SPP(c1, c2, k=(5, 9, 13))

SPPF(c1, c2, k=5)Focus(c1, c2, k=1, s=1, p=None, g=1, act=True)

Contract(gain=2)

Expand(gain=2)Concat(dimension=1)autopad

def autopad(k, p=None): # kernel, padding# Pad to 'same'if p is None:p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-padreturn p- 如果有既定的 p 则直接 return

- 如果无设定的 p,则 return 使图像在卷积操作后尺寸不变的 p:

- 如果 k 是 5,则 p = 5 // 2 = 2

- 如果 k 是 (5, 5),则 p = (5, 5) // 2 = (2, 2)

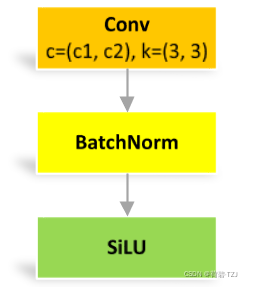

Conv

标准卷积单元,记为 CBS

关于二维多通道卷积的运算方式,可以参考我的这篇文章:Link ~

SiLU 函数定义为:,其函数图像与 ReLU 近似

class Conv(nn.Module):# Standard convolutiondef __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groupssuper().__init__()self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False)self.bn = nn.BatchNorm2d(c2)self.act = nn.SiLU() if act is True else (act if isinstance(act, nn.Module) else nn.Identity())def forward(self, x):return self.act(self.bn(self.conv(x)))def forward_fuse(self, x):return self.act(self.conv(x))DWConv

深度可分离卷积,继承自 Conv

不同点:卷积组数是 c1 和 c2 的最大公约数

DWConv 和 PWConv(1 × 1 卷积)通常交替连接

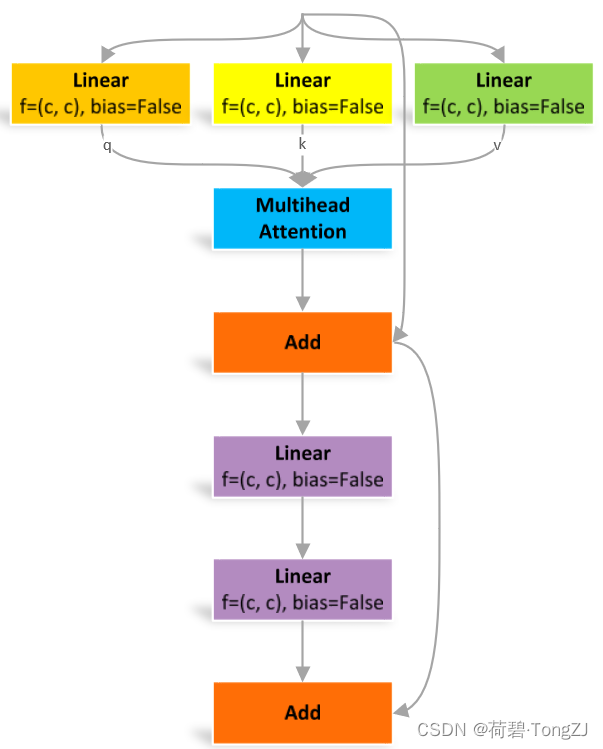

class DWConv(Conv):# Depth-wise convolution classdef __init__(self, c1, c2, k=1, s=1, act=True): # ch_in, ch_out, kernel, stride, padding, groupssuper().__init__(c1, c2, k, s, g=math.gcd(c1, c2), act=act)TransformerLayer

单头注意力:

多头注意力:q, k, v 均是长度 c 的向量,单头注意力也是长度 c 的向量,n 个单头注意力拼接后得到长度 nc 的行向量。经过线性层运算后再得到长度 c 的向量

class TransformerLayer(nn.Module):# Transformer layer https://arxiv.org/abs/2010.11929 (LayerNorm layers removed for better performance)def __init__(self, c, num_heads):super().__init__()self.q = nn.Linear(c, c, bias=False)self.k = nn.Linear(c, c, bias=False)self.v = nn.Linear(c, c, bias=False)self.ma = nn.MultiheadAttention(embed_dim=c, num_heads=num_heads)self.fc1 = nn.Linear(c, c, bias=False)self.fc2 = nn.Linear(c, c, bias=False)def forward(self, x):x = self.ma(self.q(x), self.k(x), self.v(x))[0] + xx = self.fc2(self.fc1(x)) + xreturn xTransformerBlock

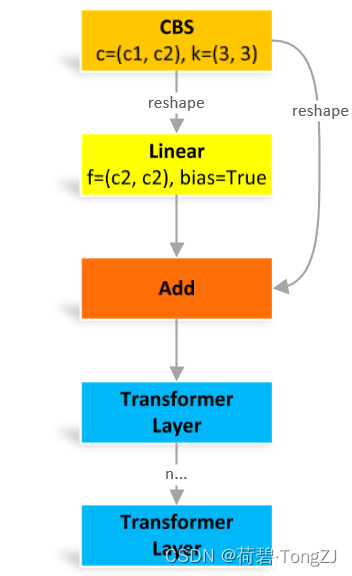

reshape:原图像是 [batch, channel, height, width],变换成 [height × width, batch, channel]

这个结构原本是用于自然语言处理的,但是据说用在图像处理有奇效就引入了。reshape 操作其实就是把图像中各个像素点看作一个单词,其对应通道的信息连在一起就是词向量,用自然语言处理的方法处理之后,再变回原来的图像结构

class TransformerBlock(nn.Module):# Vision Transformer https://arxiv.org/abs/2010.11929def __init__(self, c1, c2, num_heads, num_layers):super().__init__()self.conv = Noneif c1 != c2:self.conv = Conv(c1, c2)self.linear = nn.Linear(c2, c2) # learnable position embeddingself.tr = nn.Sequential(*[TransformerLayer(c2, num_heads) for _ in range(num_layers)])self.c2 = c2def forward(self, x):if self.conv is not None:x = self.conv(x)b, _, w, h = x.shapep = x.flatten(2).unsqueeze(0).transpose(0, 3).squeeze(3)return self.tr(p + self.linear(p)).unsqueeze(3).transpose(0, 3).reshape(b, self.c2, w, h)Bottleneck

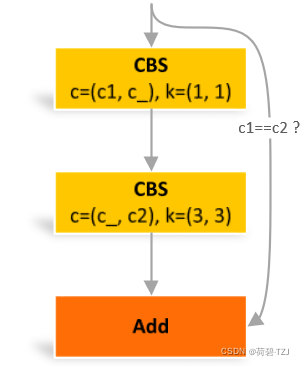

经典的残差卷积单元,先使用 1×1 卷积降维,剔除冗余信息;再使用 3×3 卷积升维

class Bottleneck(nn.Module):# Standard bottleneckdef __init__(self, c1, c2, shortcut=True, g=1, e=0.5): # ch_in, ch_out, shortcut, groups, expansionsuper().__init__()c_ = int(c2 * e) # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c_, c2, 3, 1, g=g)self.add = shortcut and c1 == c2def forward(self, x):return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))BottleneckCSP

class BottleneckCSP(nn.Module):# CSP Bottleneck https://github.com/WongKinYiu/CrossStagePartialNetworksdef __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansionsuper().__init__()c_ = int(c2 * e) # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = nn.Conv2d(c1, c_, 1, 1, bias=False)self.cv3 = nn.Conv2d(c_, c_, 1, 1, bias=False)self.cv4 = Conv(2 * c_, c2, 1, 1)self.bn = nn.BatchNorm2d(2 * c_) # applied to cat(cv2, cv3)self.act = nn.LeakyReLU(0.1, inplace=True)self.m = nn.Sequential(*[Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)])def forward(self, x):y1 = self.cv3(self.m(self.cv1(x)))y2 = self.cv2(x)return self.cv4(self.act(self.bn(torch.cat((y1, y2), dim=1))))C3

与 BottleneckCSP 类似,但少了 1 个 Conv、1 个 BN、1 个 Act,运算量更少

class C3(nn.Module):# CSP Bottleneck with 3 convolutionsdef __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansionsuper().__init__()c_ = int(c2 * e) # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c1, c_, 1, 1)self.cv3 = Conv(2 * c_, c2, 1) # act=FReLU(c2)self.m = nn.Sequential(*[Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)])# self.m = nn.Sequential(*[CrossConv(c_, c_, 3, 1, g, 1.0, shortcut) for _ in range(n)])def forward(self, x):return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))C3TR

继承自 C3,n 个 Bottleneck 更换为 1 个 TransformerBlock

class C3TR(C3):# C3 module with TransformerBlock()def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)c_ = int(c2 * e)self.m = TransformerBlock(c_, c_, 4, n)C3SPP

继承自 C3,n 个 Bottleneck 更换为 1 个 SPP

class C3SPP(C3):# C3 module with SPP()def __init__(self, c1, c2, k=(5, 9, 13), n=1, shortcut=True, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)c_ = int(c2 * e)self.m = SPP(c_, c_, k)C3Ghost

继承自 C3,Bottleneck 更换为 GhostBottleneck

class C3Ghost(C3):# C3 module with GhostBottleneck()def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)c_ = int(c2 * e) # hidden channelsself.m = nn.Sequential(*[GhostBottleneck(c_, c_) for _ in range(n)])GhostConv

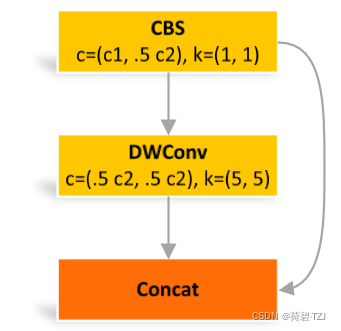

在卷积的过程中,常有一些特征图会出现类似的通道 (即卷积核的权值类似)

这些重复的通道,若使用标准卷积计算会耗费大量的算力

如果先使用标准卷积获得一部分通道,再在该部分通道上使用深度可分离卷积获得另一部分通道,则在减少计算量的同时,也避免了类似通道的出现

class GhostConv(nn.Module):# Ghost Convolution https://github.com/huawei-noah/ghostnetdef __init__(self, c1, c2, k=1, s=1, g=1, act=True): # ch_in, ch_out, kernel, stride, groupssuper().__init__()c_ = c2 // 2 # hidden channelsself.cv1 = Conv(c1, c_, k, s, None, g, act)self.cv2 = Conv(c_, c_, 5, 1, None, c_, act)def forward(self, x):y = self.cv1(x)return torch.cat([y, self.cv2(y)], 1)GhostBottleneck

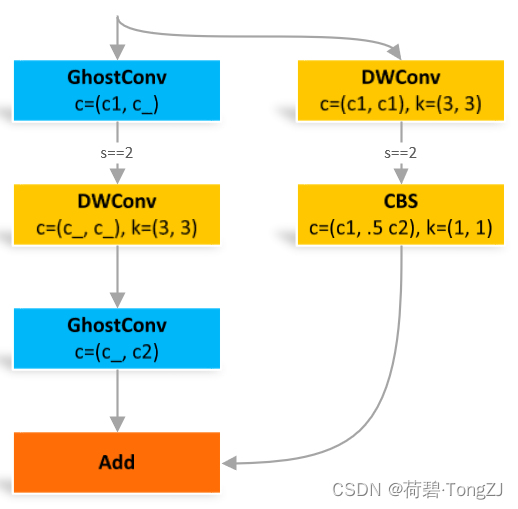

这个结构受步长 s 的影响较大,s = 2 时多了两个卷积

class GhostBottleneck(nn.Module):# Ghost Bottleneck https://github.com/huawei-noah/ghostnetdef __init__(self, c1, c2, k=3, s=1): # ch_in, ch_out, kernel, stridesuper().__init__()c_ = c2 // 2self.conv = nn.Sequential(GhostConv(c1, c_, 1, 1), # pwDWConv(c_, c_, k, s, act=False) if s == 2 else nn.Identity(), # dwGhostConv(c_, c2, 1, 1, act=False)) # pw-linearself.shortcut = nn.Sequential(DWConv(c1, c1, k, s, act=False),Conv(c1, c2, 1, 1, act=False)) if s == 2 else nn.Identity()def forward(self, x):return self.conv(x) + self.shortcut(x)SPP

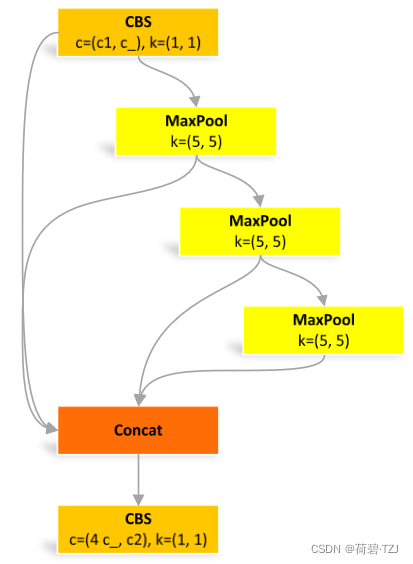

空间金字塔池化,用于扩大骨干网络的感受野,是非常重要的一个单元

class SPP(nn.Module):# Spatial Pyramid Pooling (SPP) layer https://arxiv.org/abs/1406.4729def __init__(self, c1, c2, k=(5, 9, 13)):super().__init__()c_ = c1 // 2 # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c_ * (len(k) + 1), c2, 1, 1)self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])def forward(self, x):x = self.cv1(x)with warnings.catch_warnings():warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warningreturn self.cv2(torch.cat([x] + [m(x) for m in self.m], 1))SPPF

快速版的空间金字塔池化

池化尺寸等价于:5、9、13,和原来一样

但是运算量从原来的 减少到了

class SPPF(nn.Module):# Spatial Pyramid Pooling - Fast (SPPF) layer for YOLOv5 by Glenn Jocherdef __init__(self, c1, c2, k=5): # equivalent to SPP(k=(5, 9, 13))super().__init__()c_ = c1 // 2 # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c_ * 4, c2, 1, 1)self.m = nn.MaxPool2d(kernel_size=k, stride=1, padding=k // 2)def forward(self, x):x = self.cv1(x)with warnings.catch_warnings():warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warningy1 = self.m(x)y2 = self.m(y1)return self.cv2(torch.cat([x, y1, y2, self.m(y2)], 1))Focus

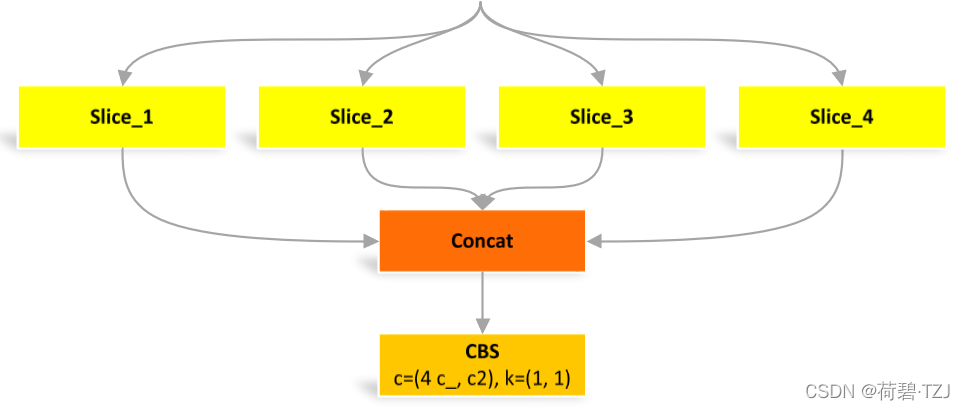

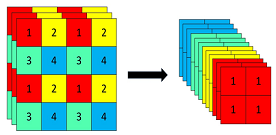

四个 Slice 是由图像在像素位置上分割出来的

class Focus(nn.Module):# Focus wh information into c-spacedef __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groupssuper().__init__()self.conv = Conv(c1 * 4, c2, k, s, p, g, act)# self.contract = Contract(gain=2)def forward(self, x): # x(b,c,w,h) -> y(b,4c,w/2,h/2)return self.conv(torch.cat([x[..., ::2, ::2], x[..., 1::2, ::2], x[..., ::2, 1::2], x[..., 1::2, 1::2]], 1))# return self.conv(self.contract(x))Contrast

当 gain = 2 的时候,(64, 80, 80) 的图像 -> (256, 40, 40) 的图像。其操作类似 Focus,但更灵活,相比之下少了一个卷积

class Contract(nn.Module):# Contract width-height into channels, i.e. x(1,64,80,80) to x(1,256,40,40)def __init__(self, gain=2):super().__init__()self.gain = gaindef forward(self, x):b, c, h, w = x.size() # assert (h / s == 0) and (W / s == 0), 'Indivisible gain's = self.gainx = x.view(b, c, h // s, s, w // s, s) # x(1,64,40,2,40,2)x = x.permute(0, 3, 5, 1, 2, 4).contiguous() # x(1,2,2,64,40,40)return x.view(b, c * s * s, h // s, w // s) # x(1,256,40,40)Expand

当 gain = 2 的时候,(1,64,80,80) 的图像 -> (1,16,160,160) 的图像。是 Contrast 的逆操作

class Expand(nn.Module):# Expand channels into width-height, i.e. x(1,64,80,80) to x(1,16,160,160)def __init__(self, gain=2):super().__init__()self.gain = gaindef forward(self, x):b, c, h, w = x.size() # assert C / s ** 2 == 0, 'Indivisible gain's = self.gainx = x.view(b, s, s, c // s ** 2, h, w) # x(1,2,2,16,80,80)x = x.permute(0, 3, 4, 1, 5, 2).contiguous() # x(1,16,80,2,80,2)return x.view(b, c // s ** 2, h * s, w * s) # x(1,16,160,160)Concat

当 dimension = 1 时,将多张相同尺寸的图像在通道维度上拼接 (通道数可不同)

class Concat(nn.Module):# Concatenate a list of tensors along dimensiondef __init__(self, dimension=1):super().__init__()self.d = dimensiondef forward(self, x):return torch.cat(x, self.d)