回顾上次内容:http://blog.csdn.net/acdreamers/article/details/27365941

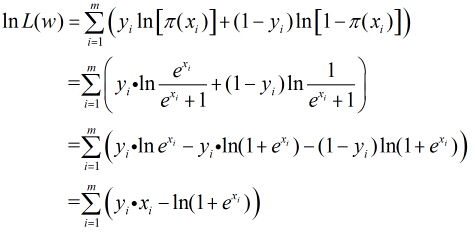

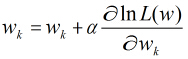

经过上次对Logistic回归理论的学习,我们已经推导出取对数后的似然函数为

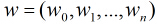

现在我们的目的是求一个向量 ,使得

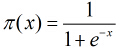

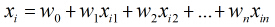

,使得 最大。其中

最大。其中

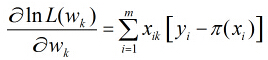

对这个似然函数求偏导后得到

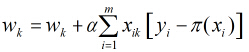

根据梯度上升算法有

进一步得到

我们可以初始化向量 为0,或者随机值,然后进行迭代达到指定的精度为止。

为0,或者随机值,然后进行迭代达到指定的精度为止。

现在就来用C++一步一步实现Logistic回归,我们对文章末尾列出的数据进行训练。

首先,我们要对文本进行读取,在训练数据中,每一行代表一组训练数据,每一组有7个数字,第一个数字代表ID,

可以忽略之,2~6代表这组训练数据的特征输入,第7个数字代表输出,为0或者1。每个数据之间用一个空格隔开。

首先我们来研究如何一行一行读取文本,在C++中,读取文本的一行用getline()函数。

getline()函数表示读取文本的一行,返回的是读取的字节数,如果读取失败则返回-1。用法如下:

#include <iostream>#include <string.h>#include <fstream>#include <string>#include <stdio.h>using namespace std;int main(){ string filename = "data.in"; ifstream file(filename.c_str()); char s[1024]; if(file.is_open()) { while(file.getline(s,1024)) { int x,y,z; sscanf(s,"%d %d %d",&x,&y,&z); cout<<x<<" "<<y<<" "<<z<<endl; } } return 0;}

拿到每一行后,可以把它们提取出来,进行系统输入。

代码:

#include <iostream>#include <string.h>#include <fstream>#include <stdio.h>#include <math.h>#include <vector>using namespace std;struct Data{ vector<int> x; int y; Data(){} Data(vector<int> x,int y) { this->x = x; this->y = y; }};vector<double> w;vector<Data> data;void loadData(vector<Data>& data,string path){ string filename = path; ifstream file(filename.c_str()); char s[1024]; if(file.is_open()) { while(file.getline(s,1024)) { Data tmp; int x1,x2,x3,x4,x5,x6,x7; sscanf(s,"%d %d %d %d %d %d %d",&x1,&x2,&x3,&x4,&x5,&x6,&x7); tmp.x.push_back(1); tmp.x.push_back(x2); tmp.x.push_back(x3); tmp.x.push_back(x4); tmp.x.push_back(x5); tmp.x.push_back(x6); tmp.y = x7; data.push_back(tmp); } }}void Init(){ w.clear(); data.clear(); loadData(data,"traindata.txt"); for(int i=0;i<data[0].x.size();i++) w.push_back(0);}double WX(const vector<double>& w,const Data& data){ double ans = 0; for(int i=0;i<w.size();i++) ans += w[i] * data.x[i]; return ans;}double sigmoid(const vector<double>& w,const Data& data){ double x = WX(w,data); double ans = exp(x) / (1 + exp(x)); return ans;}double Lw(vector<double> w){ double ans = 0; for(int i=0;i<data.size();i++) { double x = WX(w,data[i]); ans += data[i].y * x - log(1 + exp(x)); } return ans;}void gradient(double alpha){ for(int i=0;i<w.size();i++) { double tmp = 0; for(int j=0;j<data.size();j++) tmp += alpha * data[j].x[i] * (data[j].y - sigmoid(w,data[j])); w[i] += tmp; }}void display(int cnt,double objLw,double newLw){ cout<<"第"<<cnt<<"次迭代: ojLw = "<<objLw<<" 两次迭代的目标差为: "<<(newLw - objLw)<<endl; cout<<"参数w为: "; for(int i=0;i<w.size();i++) cout<<w[i]<<" "; cout<<endl; cout<<endl;}void Logistic(){ int cnt = 0; double alpha = 0.1; double delta = 0.0001; double objLw = Lw(w); gradient(alpha); double newLw = Lw(w); while(fabs(newLw - objLw) > delta) { objLw = newLw; gradient(alpha); newLw = Lw(w); cnt++; display(cnt,objLw,newLw); }}void Separator(){ vector<Data> data; loadData(data,"testdata.txt"); cout<<"预测分类结果:"<<endl; for(int i=0;i<data.size();i++) { double p0 = 0; double p1 = 0; double x = WX(w,data[i]); p1 = exp(x) / (1 + exp(x)); p0 = 1 - p1; cout<<"实例: "; for(int j=0;j<data[i].x.size();j++) cout<<data[i].x[j]<<" "; cout<<"所属类别为:"; if(p1 >= p0) cout<<1<<endl; else cout<<0<<endl; }}int main(){ Init(); Logistic(); Separator(); return 0;}

训练数据:traindata.txt

10009 1 0 0 1 0 110025 0 0 1 2 0 010038 1 0 0 1 1 010042 0 0 0 0 1 010049 0 0 1 0 0 010113 0 0 1 0 1 010131 0 0 1 2 1 010160 1 0 0 0 0 010164 0 0 1 0 1 010189 1 0 1 0 0 010215 0 0 1 0 1 010216 0 0 1 0 0 010235 0 0 1 0 1 010270 1 0 0 1 0 010282 1 0 0 0 1 010303 2 0 0 0 1 010346 1 0 0 2 1 010380 2 0 0 0 1 010429 2 0 1 0 0 010441 0 0 1 0 1 010443 0 0 1 2 0 010463 0 0 0 0 0 010475 0 0 1 0 1 010489 1 0 1 0 1 110518 0 0 1 2 1 010529 1 0 1 0 0 010545 0 0 1 0 0 010546 0 0 0 2 0 010575 1 0 0 0 1 010579 2 0 1 0 0 010581 2 0 1 1 1 010600 1 0 1 1 0 010627 1 0 1 2 0 010653 1 0 0 1 1 010664 0 0 0 0 1 010691 1 1 0 0 1 010692 1 0 1 2 1 010711 0 0 0 0 1 010714 0 0 1 0 0 010739 1 0 1 1 1 010750 1 0 1 0 1 010764 2 0 1 2 0 010770 0 0 1 2 1 010780 0 0 1 0 1 010784 2 0 1 0 1 010785 0 0 1 0 1 010788 1 0 0 0 0 010815 1 0 0 0 1 010816 0 0 0 0 1 010818 0 0 1 2 1 011095 0 1 1 0 0 011146 0 1 0 0 1 011206 2 1 0 0 0 011223 2 1 0 0 0 011236 1 1 0 2 0 011244 1 1 0 0 0 111245 0 1 0 0 0 011278 2 1 0 0 1 011322 0 1 0 0 1 011326 2 1 0 2 1 011329 2 1 0 2 1 011344 1 1 0 2 1 011358 0 1 0 0 0 111417 2 1 1 0 1 011421 2 1 0 1 1 011484 1 1 0 0 0 111499 2 1 0 0 0 011503 1 1 0 0 1 011527 1 1 0 0 0 011540 2 1 0 1 1 011580 1 1 0 0 1 011583 1 0 1 1 0 111592 2 1 0 1 1 011604 0 1 0 0 1 011625 1 0 1 0 0 020035 0 0 1 0 0 120053 1 0 0 0 0 020070 0 0 0 2 1 020074 1 0 1 2 0 120146 1 0 0 1 1 020149 2 0 1 2 1 020158 2 0 0 0 1 020185 1 0 0 1 1 020193 1 0 1 0 1 020194 0 0 1 0 0 020205 1 0 0 2 1 020206 2 0 1 1 1 020265 0 0 1 0 1 020311 0 0 0 0 1 020328 2 0 0 1 0 120353 0 0 1 0 0 020372 0 0 0 0 0 020405 1 0 1 1 1 120413 2 0 1 0 1 020427 0 0 0 0 0 020455 1 0 1 0 1 020462 0 0 0 0 1 020472 0 0 0 2 0 020485 0 0 0 0 0 020523 0 0 1 2 0 020539 0 0 1 0 1 020554 0 0 1 0 0 120565 0 0 0 2 1 020566 1 0 1 1 1 020567 1 0 0 1 1 020568 0 0 1 0 1 020569 1 0 0 0 0 020571 1 0 1 0 1 020581 2 0 0 0 1 020583 1 0 0 0 1 020585 2 0 0 1 1 020586 0 0 1 2 1 020591 1 0 1 2 0 020595 0 0 1 2 1 020597 1 0 0 0 0 020599 0 0 1 0 1 020607 0 0 0 1 1 020611 1 0 0 0 1 020612 2 0 0 1 1 020614 1 0 0 1 1 020615 1 0 1 0 0 021017 1 1 0 1 1 021058 2 1 0 0 1 021063 0 1 0 0 0 021084 1 1 0 1 0 121087 1 1 0 2 1 021098 0 1 0 0 0 021099 1 1 0 2 0 021113 0 1 0 0 1 021114 1 1 0 0 1 121116 1 1 0 2 1 021117 1 0 0 2 1 021138 2 1 1 1 1 021154 0 1 0 0 1 021165 0 1 0 0 1 021181 2 1 0 0 0 121183 1 1 0 2 1 021231 1 1 0 0 1 021234 1 1 1 0 0 021286 2 1 0 2 1 021352 2 1 1 1 0 021395 0 1 0 0 1 021417 1 1 0 2 1 021423 0 1 0 0 1 021426 1 1 0 1 1 021433 0 1 0 0 1 021435 0 1 0 0 0 021436 1 1 0 0 0 021439 1 1 0 2 1 021446 1 1 0 0 0 021448 0 1 1 2 0 021453 2 1 0 0 1 030042 2 0 1 0 0 130080 0 0 1 0 1 0301003 1 0 1 0 0 0301009 0 0 1 2 1 0301017 0 0 1 0 0 030154 1 0 1 0 1 030176 0 0 1 0 1 030210 0 0 1 0 1 030239 1 0 1 0 1 030311 0 0 0 0 0 130382 0 0 1 2 1 030387 0 0 1 0 1 030415 0 0 1 0 1 030428 0 0 1 0 0 030479 0 0 1 0 0 130485 0 0 1 2 1 030493 2 0 1 2 1 030519 0 0 1 0 1 030532 0 0 1 0 1 030541 0 0 1 0 1 030567 1 0 0 0 0 030569 2 0 1 1 1 030578 0 0 1 0 0 130579 1 0 1 0 0 030596 1 0 1 1 1 030597 1 0 1 1 0 030618 0 0 1 0 0 030622 1 0 1 1 1 030627 1 0 1 2 0 030648 2 0 0 0 1 030655 0 0 1 0 0 130658 0 0 1 0 1 030667 0 0 1 0 1 030678 1 0 1 0 0 030701 0 0 1 0 0 030703 2 0 1 1 0 030710 0 0 1 2 0 030713 1 0 0 1 1 130716 0 0 0 0 1 030721 0 0 0 0 0 130723 0 0 1 0 1 030724 2 0 1 2 1 030733 1 0 0 1 0 030734 0 0 1 0 0 030736 2 0 0 1 1 130737 0 0 1 0 0 030740 0 0 1 0 1 030742 2 0 1 0 1 030743 0 0 1 0 1 030745 2 0 0 0 1 030754 1 0 1 0 1 030758 1 0 0 0 1 030764 0 0 1 0 0 130765 2 0 0 0 0 030769 2 0 0 1 1 030772 0 0 1 0 1 030774 0 0 0 0 1 030784 2 0 1 0 0 030786 1 0 1 0 1 030787 0 0 0 0 1 030789 1 0 1 0 1 030800 0 0 1 0 0 030801 1 0 1 0 1 030803 1 0 1 0 1 030806 1 0 1 0 1 030817 0 0 1 2 0 030819 2 0 1 0 1 130822 0 0 1 0 1 030823 0 0 1 2 1 030834 0 0 0 0 0 030836 0 0 1 0 1 030837 1 0 1 0 1 030840 0 0 1 0 1 030841 1 0 1 0 0 030844 0 0 1 0 1 030845 0 0 1 0 0 030847 1 0 1 0 0 030848 0 0 1 0 1 030850 0 0 1 0 1 030856 1 0 0 0 1 030858 0 0 1 0 0 030860 0 0 0 0 1 030862 1 0 1 1 1 030864 0 0 0 2 0 030867 0 0 1 0 1 030869 0 0 1 0 1 030887 0 0 1 0 1 030900 1 0 0 1 1 030913 2 0 0 0 1 030914 1 0 0 0 0 030922 2 0 0 2 1 030923 0 0 1 2 1 030927 1 0 1 0 0 130929 0 0 1 2 1 030933 0 0 1 2 1 030940 0 0 1 0 1 030943 1 0 1 2 1 030945 0 0 0 2 0 030951 1 0 0 0 0 030964 0 0 0 2 1 030969 0 0 1 0 1 030979 2 0 0 0 1 030980 1 0 0 0 0 030982 1 0 0 1 1 030990 1 0 1 1 1 030991 1 0 1 0 1 130999 0 0 1 0 1 031056 1 1 0 2 1 031068 1 1 0 1 0 031108 2 1 0 2 1 031168 1 1 1 0 0 031191 0 1 1 0 0 031229 0 1 1 0 0 131263 0 1 0 0 1 031281 1 1 1 0 0 031340 1 1 1 0 1 031375 0 1 0 0 1 031401 0 1 1 0 0 131480 1 1 1 1 1 031501 1 1 0 2 1 031514 0 1 0 2 0 031518 1 1 0 2 1 031532 0 0 1 2 1 031543 2 1 1 1 1 031588 0 1 0 0 1 031590 0 0 1 0 1 031591 2 1 0 1 1 031595 0 1 0 0 1 031596 1 1 0 0 0 031598 1 1 0 0 1 031599 0 1 0 0 0 031605 0 1 1 0 0 031612 2 1 0 0 1 031615 2 1 0 0 0 031628 1 1 0 0 1 031640 2 1 0 1 1 0

测试数据:testdata.txt

10009 1 0 0 1 0 110025 0 0 1 2 0 020035 0 0 1 0 0 120053 1 0 0 0 0 030627 1 0 1 2 0 030648 2 0 0 0 1 0