使用eclipse来调试hadoop作业是非常简洁方便的,散仙以前也有用eclipse开发过hadoop程序,但是一直没有深入了解eclipse调试的一些模式,有些时候也会出一些莫名奇妙的异常,最常见的就是下面这个

- java.lang.RuntimeException:?java.lang.ClassNotFoundException:?com.qin.sort.TestSort$SMapper??

- ????at?org.apache.hadoop.conf.Configuration.getClass(Configuration.java:857)??

- ????at?org.apache.hadoop.mapreduce.JobContext.getMapperClass(JobContext.java:199)??

- ????at?org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:718)??

- ????at?org.apache.hadoop.mapred.MapTask.run(MapTask.java:364)??

- ????at?org.apache.hadoop.mapred.Child$4.run(Child.java:255)??

- ????at?java.security.AccessController.doPrivileged(Native?Method)??

- ????at?javax.security.auth.Subject.doAs(Subject.java:415)??

- ????at?org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1190)??

java.lang.RuntimeException: java.lang.ClassNotFoundException: com.qin.sort.TestSort$SMapper at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:857) at org.apache.hadoop.mapreduce.JobContext.getMapperClass(JobContext.java:199) at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:718) at org.apache.hadoop.mapred.MapTask.run(MapTask.java:364) at org.apache.hadoop.mapred.Child$4.run(Child.java:255) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1190)

这个异常是最莫名其妙的一个,明明自己的MR类里面有这个Mapper的内部类,但是一运行程序,就报这个异常,说找不到这个类,然后就百般查找问题,找来找去,也没找出个所以然。

其实这并不是程序的问题,而是对eclipse的调试模式不够了解的问题,eclipse上运行hadoop总的来说有2种模式,第一种就是Local模式,也叫本地模式,第二种就是我们正式的线上集群模式,当运行本地模式的时候,程序并不会被提交到Hadoop集群上,而是基于单机的模式跑的,但是单机的模式,运行的结果仍在是存储在HDFS上的,只不过没有利用hadoop集群的资源,单机的模式不要提交jar包到hadoop集群上,因此一般我们使用local来测试我们的MR程序是否能够正常运行,

下面我们来看下,基于Local模式跑的一个排序作业:

- 排序数据:??

- a?784??

- b?12??

- c?-11??

- dd?99999??

排序数据:a 784b 12c -11dd 99999

程序源码:

- package?com.qin.sort;??

- ??

- import?java.io.IOException;??

- ??

- import?org.apache.hadoop.conf.Configuration;??

- import?org.apache.hadoop.fs.FileSystem;??

- import?org.apache.hadoop.fs.Path;??

- import?org.apache.hadoop.io.IntWritable;??

- import?org.apache.hadoop.io.LongWritable;??

- import?org.apache.hadoop.io.Text;??

- import?org.apache.hadoop.io.WritableComparator;??

- import?org.apache.hadoop.mapred.JobConf;??

- import?org.apache.hadoop.mapreduce.Job;??

- import?org.apache.hadoop.mapreduce.Mapper;??

- import?org.apache.hadoop.mapreduce.Reducer;??

- import?org.apache.hadoop.mapreduce.lib.input.FileInputFormat;??

- import?org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;??

- ??

- ??

- ???

- /**?

- ?*?测试排序的?

- ?*?MR作业类?

- ?*??

- ?*?QQ技术交流群:324714439?

- ?*?@author?qindongliang?

- ?*??

- ?*??

- ?*?**/??

- public?class?TestSort?{??

- ??????

- ??????

- ????/**?

- ?????*?Map类?

- ?????*??

- ?????*?**/??

- ????private?static?class?SMapper?extends?Mapper<LongWritable,?Text,?IntWritable,?Text>{??

- ??????????

- ????????private?Text?text=new?Text();//输出??

- ?????????private?static?final?IntWritable?one=new?IntWritable();??

- ??????????

- ????????@Override??

- ????????protected?void?map(LongWritable?key,?Text?value,Context?context)??

- ????????????????throws?IOException,?InterruptedException?{??

- ????????????String?s=value.toString();??

- ????????????//System.out.println("abc:?"+s);??

- ????????//??if((s.trim().indexOf("?")!=-1)){??

- ?????????????String?ss[]=s.split("?");??

- ?????????????one.set(Integer.parseInt(ss[1].trim()));//??

- ?????????????text.set(ss[0].trim());????

- ?????????????context.write(one,?text);??

- ????????}??

- ????}??

- ??????

- ????/**?

- ?????*?Reduce类?

- ?????*?

- ?????*?*/??

- ?????private?static?class?SReduce?extends?Reducer<IntWritable,?Text,?Text,?IntWritable>{??

- ?????????private?Text?text=new?Text();??

- ?????????@Override??

- ????????protected?void?reduce(IntWritable?arg0,?Iterable<Text>?arg1,Context?context)??

- ????????????????throws?IOException,?InterruptedException?{??

- ???????????????

- ???????????????

- ?????????????for(Text?t:arg1){??

- ?????????????????text.set(t.toString());??

- ??????????????????

- ?????????????????context.write(text,?arg0);??

- ?????????????}???

- ????????}??

- ?????}??

- ???????

- ?????/**?

- ??????*?排序的类?

- ??????*??

- ??????*?**/??

- ?????private?static?class?SSort?extends?WritableComparator{??

- ???????????

- ?????????public?SSort()?{??

- ?????????????super(IntWritable.class,true);//注册排序组件??

- ????????}??

- ?????????@Override??

- ????????public?int?compare(byte[]?arg0,?int?arg1,?int?arg2,?byte[]?arg3,??

- ????????????????int?arg4,?int?arg5)?{??

- ????????????/**?

- ?????????????*?控制升降序的关键控制-号是降序?

- ?????????????*?*/??

- ????????????return?-super.compare(arg0,?arg1,?arg2,?arg3,?arg4,?arg5);//注意使用负号来完成降序??

- ????????}??

- ???????????

- ?????????@Override??

- ????????public?int?compare(Object?a,?Object?b)?{??

- ???????

- ????????????return????-super.compare(a,?b);//注意使用负号来完成降序??

- ????????}??

- ???????????

- ???????????

- ?????}??

- ??????

- ?????/**?

- ??????*?main方法?

- ??????*?*/??

- ?????public?static?void?main(String[]?args)?throws?Exception{??

- ?????????String?inputPath="hdfs://192.168.75.130:9000/root/output";???????

- ??????????String?outputPath="hdfs://192.168.75.130:9000/root/outputsort";??

- ??????????JobConf?conf=new?JobConf();??

- ????????//Configuration?conf=new?Configuration();??

- ???????????//在你的文件地址前自动添加:hdfs://master:9000/??

- ?????????//?conf.set("fs.default.name",?"hdfs://192.168.75.130:9000");??

- ??????????//指定jobtracker的ip和端口号,master在/etc/hosts中可以配置??

- ????????//??conf.set("mapred.job.tracker","192.168.75.130:9001");??

- ?????????//?conf.get("mapred.job.tracker");??

- ?????????System.out.println("模式:??"+conf.get("mapred.job.tracker"));??

- ????????//??conf.setJar("tt.jar");??

- ??????????FileSystem??fs=FileSystem.get(conf);??

- ??????????Path?pout=new?Path(outputPath);??

- ??????????if(fs.exists(pout)){??

- ??????????????fs.delete(pout,?true);??

- ??????????????System.out.println("存在此路径,?已经删除......");??

- ??????????}?????????????

- ??????????Job?job=new?Job(conf,?"sort123");???

- ??????????job.setJarByClass(TestSort.class);??

- ??????????job.setOutputKeyClass(IntWritable.class);//告诉map,reduce输出K,V的类型??

- ??????????FileInputFormat.setInputPaths(job,?new?Path(inputPath));??//输入路径??

- ??????????FileOutputFormat.setOutputPath(job,?new?Path(outputPath));//输出路径????

- ??????????job.setMapperClass(SMapper.class);//map类??

- ??????????job.setReducerClass(SReduce.class);//reduce类??

- ??????????job.setSortComparatorClass(SSort.class);//排序类??

- //??????????job.setInputFormatClass(org.apache.hadoop.mapreduce.lib.input.TextInputFormat.class);??

- //????????job.setOutputFormatClass(TextOutputFormat.class);??

- ??????????System.exit(job.waitForCompletion(true)???0?:?1);????

- ???????????

- ???????????

- ????}??

- ??????

- ??

- }??

package com.qin.sort;import java.io.IOException;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.FileSystem;import org.apache.hadoop.fs.Path;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.io.LongWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.io.WritableComparator;import org.apache.hadoop.mapred.JobConf;import org.apache.hadoop.mapreduce.Job;import org.apache.hadoop.mapreduce.Mapper;import org.apache.hadoop.mapreduce.Reducer;import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; /** * 测试排序的 * MR作业类 * * QQ技术交流群:324714439 * @author qindongliang * * * **/public class TestSort { /** * Map类 * * **/ private static class SMapper extends Mapper<LongWritable, Text, IntWritable, Text>{ private Text text=new Text();//输出 private static final IntWritable one=new IntWritable(); @Override protected void map(LongWritable key, Text value,Context context) throws IOException, InterruptedException { String s=value.toString(); //System.out.println("abc: "+s); // if((s.trim().indexOf(" ")!=-1)){ String ss[]=s.split(" "); one.set(Integer.parseInt(ss[1].trim()));// text.set(ss[0].trim()); context.write(one, text); } } /** * Reduce类 * * */ private static class SReduce extends Reducer<IntWritable, Text, Text, IntWritable>{ private Text text=new Text(); @Override protected void reduce(IntWritable arg0, Iterable<Text> arg1,Context context) throws IOException, InterruptedException { for(Text t:arg1){ text.set(t.toString()); context.write(text, arg0); } } } /** * 排序的类 * * **/ private static class SSort extends WritableComparator{ public SSort() { super(IntWritable.class,true);//注册排序组件 } @Override public int compare(byte[] arg0, int arg1, int arg2, byte[] arg3, int arg4, int arg5) { /** * 控制升降序的关键控制-号是降序 * */ return -super.compare(arg0, arg1, arg2, arg3, arg4, arg5);//注意使用负号来完成降序 } @Override public int compare(Object a, Object b) { return -super.compare(a, b);//注意使用负号来完成降序 } } /** * main方法 * */ public static void main(String[] args) throws Exception{ String inputPath="hdfs://192.168.75.130:9000/root/output"; String outputPath="hdfs://192.168.75.130:9000/root/outputsort"; JobConf conf=new JobConf(); //Configuration conf=new Configuration(); //在你的文件地址前自动添加:hdfs://master:9000/ // conf.set("fs.default.name", "hdfs://192.168.75.130:9000"); //指定jobtracker的ip和端口号,master在/etc/hosts中可以配置 // conf.set("mapred.job.tracker","192.168.75.130:9001"); // conf.get("mapred.job.tracker"); System.out.println("模式: "+conf.get("mapred.job.tracker")); // conf.setJar("tt.jar"); FileSystem fs=FileSystem.get(conf); Path pout=new Path(outputPath); if(fs.exists(pout)){ fs.delete(pout, true); System.out.println("存在此路径, 已经删除......"); } Job job=new Job(conf, "sort123"); job.setJarByClass(TestSort.class); job.setOutputKeyClass(IntWritable.class);//告诉map,reduce输出K,V的类型 FileInputFormat.setInputPaths(job, new Path(inputPath)); //输入路径 FileOutputFormat.setOutputPath(job, new Path(outputPath));//输出路径 job.setMapperClass(SMapper.class);//map类 job.setReducerClass(SReduce.class);//reduce类 job.setSortComparatorClass(SSort.class);//排序类// job.setInputFormatClass(org.apache.hadoop.mapreduce.lib.input.TextInputFormat.class);// job.setOutputFormatClass(TextOutputFormat.class); System.exit(job.waitForCompletion(true) ? 0 : 1); } }

打印结果如下:

- 模式:??local??

- 存在此路径,?已经删除......??

- WARN?-?NativeCodeLoader.<clinit>(52)?|?Unable?to?load?native-hadoop?library?for?your?platform...?using?builtin-java?classes?where?applicable??

- WARN?-?JobClient.copyAndConfigureFiles(746)?|?Use?GenericOptionsParser?for?parsing?the?arguments.?Applications?should?implement?Tool?for?the?same.??

- WARN?-?JobClient.copyAndConfigureFiles(870)?|?No?job?jar?file?set.??User?classes?may?not?be?found.?See?JobConf(Class)?or?JobConf#setJar(String).??

- INFO?-?FileInputFormat.listStatus(237)?|?Total?input?paths?to?process?:?1??

- WARN?-?LoadSnappy.<clinit>(46)?|?Snappy?native?library?not?loaded??

- INFO?-?JobClient.monitorAndPrintJob(1380)?|?Running?job:?job_local1242054158_0001??

- INFO?-?LocalJobRunner$Job.run(340)?|?Waiting?for?map?tasks??

- INFO?-?LocalJobRunner$Job$MapTaskRunnable.run(204)?|?Starting?task:?attempt_local1242054158_0001_m_000000_0??

- INFO?-?Task.initialize(534)?|??Using?ResourceCalculatorPlugin?:?null??

- INFO?-?MapTask.runNewMapper(729)?|?Processing?split:?hdfs://192.168.75.130:9000/root/output/sort.txt:0+28??

- INFO?-?MapTask$MapOutputBuffer.<init>(949)?|?io.sort.mb?=?100??

- INFO?-?MapTask$MapOutputBuffer.<init>(961)?|?data?buffer?=?79691776/99614720??

- INFO?-?MapTask$MapOutputBuffer.<init>(962)?|?record?buffer?=?262144/327680??

- INFO?-?MapTask$MapOutputBuffer.flush(1289)?|?Starting?flush?of?map?output??

- INFO?-?MapTask$MapOutputBuffer.sortAndSpill(1471)?|?Finished?spill?0??

- INFO?-?Task.done(858)?|?Task:attempt_local1242054158_0001_m_000000_0?is?done.?And?is?in?the?process?of?commiting??

- INFO?-?LocalJobRunner$Job.statusUpdate(466)?|???

- INFO?-?Task.sendDone(970)?|?Task?'attempt_local1242054158_0001_m_000000_0'?done.??

- INFO?-?LocalJobRunner$Job$MapTaskRunnable.run(229)?|?Finishing?task:?attempt_local1242054158_0001_m_000000_0??

- INFO?-?LocalJobRunner$Job.run(348)?|?Map?task?executor?complete.??

- INFO?-?Task.initialize(534)?|??Using?ResourceCalculatorPlugin?:?null??

- INFO?-?LocalJobRunner$Job.statusUpdate(466)?|???

- INFO?-?Merger$MergeQueue.merge(408)?|?Merging?1?sorted?segments??

- INFO?-?Merger$MergeQueue.merge(491)?|?Down?to?the?last?merge-pass,?with?1?segments?left?of?total?size:?35?bytes??

- INFO?-?LocalJobRunner$Job.statusUpdate(466)?|???

- INFO?-?Task.done(858)?|?Task:attempt_local1242054158_0001_r_000000_0?is?done.?And?is?in?the?process?of?commiting??

- INFO?-?LocalJobRunner$Job.statusUpdate(466)?|???

- INFO?-?Task.commit(1011)?|?Task?attempt_local1242054158_0001_r_000000_0?is?allowed?to?commit?now??

- INFO?-?FileOutputCommitter.commitTask(173)?|?Saved?output?of?task?'attempt_local1242054158_0001_r_000000_0'?to?hdfs://192.168.75.130:9000/root/outputsort??

- INFO?-?LocalJobRunner$Job.statusUpdate(466)?|?reduce?>?reduce??

- INFO?-?Task.sendDone(970)?|?Task?'attempt_local1242054158_0001_r_000000_0'?done.??

- INFO?-?JobClient.monitorAndPrintJob(1393)?|??map?100%?reduce?100%??

- INFO?-?JobClient.monitorAndPrintJob(1448)?|?Job?complete:?job_local1242054158_0001??

- INFO?-?Counters.log(585)?|?Counters:?19??

- INFO?-?Counters.log(587)?|???File?Output?Format?Counters???

- INFO?-?Counters.log(589)?|?????Bytes?Written=26??

- INFO?-?Counters.log(587)?|???File?Input?Format?Counters???

- INFO?-?Counters.log(589)?|?????Bytes?Read=28??

- INFO?-?Counters.log(587)?|???FileSystemCounters??

- INFO?-?Counters.log(589)?|?????FILE_BYTES_READ=393??

- INFO?-?Counters.log(589)?|?????HDFS_BYTES_READ=56??

- INFO?-?Counters.log(589)?|?????FILE_BYTES_WRITTEN=135742??

- INFO?-?Counters.log(589)?|?????HDFS_BYTES_WRITTEN=26??

- INFO?-?Counters.log(587)?|???Map-Reduce?Framework??

- INFO?-?Counters.log(589)?|?????Map?output?materialized?bytes=39??

- INFO?-?Counters.log(589)?|?????Map?input?records=4??

- INFO?-?Counters.log(589)?|?????Reduce?shuffle?bytes=0??

- INFO?-?Counters.log(589)?|?????Spilled?Records=8??

- INFO?-?Counters.log(589)?|?????Map?output?bytes=25??

- INFO?-?Counters.log(589)?|?????Total?committed?heap?usage?(bytes)=455475200??

- INFO?-?Counters.log(589)?|?????Combine?input?records=0??

- INFO?-?Counters.log(589)?|?????SPLIT_RAW_BYTES=112??

- INFO?-?Counters.log(589)?|?????Reduce?input?records=4??

- INFO?-?Counters.log(589)?|?????Reduce?input?groups=4??

- INFO?-?Counters.log(589)?|?????Combine?output?records=0??

- INFO?-?Counters.log(589)?|?????Reduce?output?records=4??

- INFO?-?Counters.log(589)?|?????Map?output?records=4??

模式: local存在此路径, 已经删除......WARN - NativeCodeLoader.<clinit>(52) | Unable to load native-hadoop library for your platform... using builtin-java classes where applicableWARN - JobClient.copyAndConfigureFiles(746) | Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.WARN - JobClient.copyAndConfigureFiles(870) | No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).INFO - FileInputFormat.listStatus(237) | Total input paths to process : 1WARN - LoadSnappy.<clinit>(46) | Snappy native library not loadedINFO - JobClient.monitorAndPrintJob(1380) | Running job: job_local1242054158_0001INFO - LocalJobRunner$Job.run(340) | Waiting for map tasksINFO - LocalJobRunner$Job$MapTaskRunnable.run(204) | Starting task: attempt_local1242054158_0001_m_000000_0INFO - Task.initialize(534) | Using ResourceCalculatorPlugin : nullINFO - MapTask.runNewMapper(729) | Processing split: hdfs://192.168.75.130:9000/root/output/sort.txt:0+28INFO - MapTask$MapOutputBuffer.<init>(949) | io.sort.mb = 100INFO - MapTask$MapOutputBuffer.<init>(961) | data buffer = 79691776/99614720INFO - MapTask$MapOutputBuffer.<init>(962) | record buffer = 262144/327680INFO - MapTask$MapOutputBuffer.flush(1289) | Starting flush of map outputINFO - MapTask$MapOutputBuffer.sortAndSpill(1471) | Finished spill 0INFO - Task.done(858) | Task:attempt_local1242054158_0001_m_000000_0 is done. And is in the process of commitingINFO - LocalJobRunner$Job.statusUpdate(466) | INFO - Task.sendDone(970) | Task 'attempt_local1242054158_0001_m_000000_0' done.INFO - LocalJobRunner$Job$MapTaskRunnable.run(229) | Finishing task: attempt_local1242054158_0001_m_000000_0INFO - LocalJobRunner$Job.run(348) | Map task executor complete.INFO - Task.initialize(534) | Using ResourceCalculatorPlugin : nullINFO - LocalJobRunner$Job.statusUpdate(466) | INFO - Merger$MergeQueue.merge(408) | Merging 1 sorted segmentsINFO - Merger$MergeQueue.merge(491) | Down to the last merge-pass, with 1 segments left of total size: 35 bytesINFO - LocalJobRunner$Job.statusUpdate(466) | INFO - Task.done(858) | Task:attempt_local1242054158_0001_r_000000_0 is done. And is in the process of commitingINFO - LocalJobRunner$Job.statusUpdate(466) | INFO - Task.commit(1011) | Task attempt_local1242054158_0001_r_000000_0 is allowed to commit nowINFO - FileOutputCommitter.commitTask(173) | Saved output of task 'attempt_local1242054158_0001_r_000000_0' to hdfs://192.168.75.130:9000/root/outputsortINFO - LocalJobRunner$Job.statusUpdate(466) | reduce > reduceINFO - Task.sendDone(970) | Task 'attempt_local1242054158_0001_r_000000_0' done.INFO - JobClient.monitorAndPrintJob(1393) | map 100% reduce 100%INFO - JobClient.monitorAndPrintJob(1448) | Job complete: job_local1242054158_0001INFO - Counters.log(585) | Counters: 19INFO - Counters.log(587) | File Output Format Counters INFO - Counters.log(589) | Bytes Written=26INFO - Counters.log(587) | File Input Format Counters INFO - Counters.log(589) | Bytes Read=28INFO - Counters.log(587) | FileSystemCountersINFO - Counters.log(589) | FILE_BYTES_READ=393INFO - Counters.log(589) | HDFS_BYTES_READ=56INFO - Counters.log(589) | FILE_BYTES_WRITTEN=135742INFO - Counters.log(589) | HDFS_BYTES_WRITTEN=26INFO - Counters.log(587) | Map-Reduce FrameworkINFO - Counters.log(589) | Map output materialized bytes=39INFO - Counters.log(589) | Map input records=4INFO - Counters.log(589) | Reduce shuffle bytes=0INFO - Counters.log(589) | Spilled Records=8INFO - Counters.log(589) | Map output bytes=25INFO - Counters.log(589) | Total committed heap usage (bytes)=455475200INFO - Counters.log(589) | Combine input records=0INFO - Counters.log(589) | SPLIT_RAW_BYTES=112INFO - Counters.log(589) | Reduce input records=4INFO - Counters.log(589) | Reduce input groups=4INFO - Counters.log(589) | Combine output records=0INFO - Counters.log(589) | Reduce output records=4INFO - Counters.log(589) | Map output records=4

排序结果如下:

- dd??99999??

- a???784??

- b???12??

- c???-11??

dd 99999a 784b 12c -11

单机模式调试通过之后,我们就可以考虑采用hadoop集群的模式来跑,这时候有2种方式,可以来完成这件事,第一是,为了方便将整个项目打成一个jar包,上传到Linux上,然后执行shell命令:

bin/hadoop jar tt.jar com.qin.sort.TestSort

来进行测试,注意,散仙是为了方便,路径是写死在程序里面所以后面不用输入,输入和输出路径,正式的开发,为了灵活性,一般会通过外部传产来指定输入和输出路径。

第二种方式,也比较方便,直接在eclipse中提交到hadoop集群作业中,不过即使是使用eclipse来提交作业,还是需要将整个项目打成一个jar包,只不过这时是eclipse帮我们提交作业的,这样我们就可以Win平台上直接提交运行hadoop作业了,但是主流的还是使用上传jar包的方式。关于把整个项目打成一个jar包,散仙在后面会上传一个ant脚本,直接执行它就可以了,这样就可以把有依赖关系的类打在一起,把一整个项目做为一个整体,在hadoop上,只需要指定jar,指定类的全名称,和输入,输出路径即可。ant的脚本内容如下:

- <project?name="${component.name}"?basedir="."?default="jar">??

- ????<property?environment="env"/>??

- ????<!--?

- ????<property?name="hadoop.home"?value="${env.HADOOP_HOME}"/>?

- ????-->??

- ????<property?name="hadoop.home"?value="D:/hadoop-1.2.0"/>??

- ????<!--?指定jar包的名字?-->??

- ????<property?name="jar.name"?value="tt.jar"/>??

- ????<path?id="project.classpath">??

- ????????<fileset?dir="lib">??

- ????????????<include?name="*.jar"?/>??

- ????????</fileset>??

- ????????<fileset?dir="${hadoop.home}">??

- ????????????<include?name="**/*.jar"?/>??

- ????????</fileset>??

- ????</path>??

- ????<target?name="clean"?>??

- ????????<delete?dir="bin"?failonerror="false"?/>??

- ????????<mkdir?dir="bin"/>??

- ????</target>???

- ????<target?name="build"?depends="clean">??

- ????????<echo?message="${ant.project.name}:?${ant.file}"/>??

- ????????<javac?destdir="bin"?encoding="utf-8"?debug="true"?includeantruntime="false"?debuglevel="lines,vars,source">??

- ????????????<src?path="src"/>??

- ????????????<exclude?name="**/.svn"?/>??

- ????????????<classpath?refid="project.classpath"/>??

- ????????</javac>??

- ????????<copy?todir="bin">??

- ????????????<fileset?dir="src">??

- ????????????????<include?name="*config*"/>??

- ????????????</fileset>??

- ????????</copy>??

- ????</target>??

- ??????

- ????<target?name="jar"?depends="build">??

- ????????<copy?todir="bin/lib">??

- ????????????<fileset?dir="lib">??

- ????????????????<include?name="**/*.*"/>??

- ????????????</fileset>??

- ????????</copy>??

- ??????????

- ????????<path?id="lib-classpath">??

- ????????????<fileset?dir="lib"?includes="**/*.jar"?/>??

- ????????</path>??

- ??????????

- ????????<pathconvert?property="my.classpath"?pathsep="?"?>??

- ????????????<mapper>??

- ????????????????<chainedmapper>??

- ????????????????????<!--?移除绝对路径?-->??

- ????????????????????<flattenmapper?/>??

- ????????????????????<!--?加上lib前缀?-->??

- ????????????????????<globmapper?from="*"?to="lib/*"?/>??

- ???????????????</chainedmapper>??

- ?????????????</mapper>??

- ?????????????<path?refid="lib-classpath"?/>??

- ????????</pathconvert>??

- ??????????

- ????????<jar?basedir="bin"?destfile="${jar.name}"?>??

- ????????????<include?name="**/*"/>??

- ????????????<!--?define?MANIFEST.MF?-->??

- ????????????<manifest>??

- ????????????????<attribute?name="Class-Path"?value="${my.classpath}"?/>??

- ????????????</manifest>??

- ????????</jar>??

- ????</target>??

- </project>??

<project name="${component.name}" basedir="." default="jar"> <property environment="env"/> <!-- <property name="hadoop.home" value="${env.HADOOP_HOME}"/> --> <property name="hadoop.home" value="D:/hadoop-1.2.0"/> <!-- 指定jar包的名字 --> <property name="jar.name" value="tt.jar"/> <path id="project.classpath"> <fileset dir="lib"> <include name="*.jar" /> </fileset> <fileset dir="${hadoop.home}"> <include name="**/*.jar" /> </fileset> </path> <target name="clean" > <delete dir="bin" failonerror="false" /> <mkdir dir="bin"/> </target> <target name="build" depends="clean"> <echo message="${ant.project.name}: ${ant.file}"/> <javac destdir="bin" encoding="utf-8" debug="true" includeantruntime="false" debuglevel="lines,vars,source"> <src path="src"/> <exclude name="**/.svn" /> <classpath refid="project.classpath"/> </javac> <copy todir="bin"> <fileset dir="src"> <include name="*config*"/> </fileset> </copy> </target> <target name="jar" depends="build"> <copy todir="bin/lib"> <fileset dir="lib"> <include name="**/*.*"/> </fileset> </copy> <path id="lib-classpath"> <fileset dir="lib" includes="**/*.jar" /> </path> <pathconvert property="my.classpath" pathsep=" " > <mapper> <chainedmapper> <!-- 移除绝对路径 --> <flattenmapper /> <!-- 加上lib前缀 --> <globmapper from="*" to="lib/*" /> </chainedmapper> </mapper> <path refid="lib-classpath" /> </pathconvert> <jar basedir="bin" destfile="${jar.name}" > <include name="**/*"/> <!-- define MANIFEST.MF --> <manifest> <attribute name="Class-Path" value="${my.classpath}" /> </manifest> </jar> </target></project>

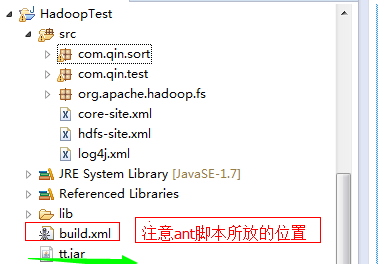

运行上面的这个ant脚本之后,我们的项目就会被打成一个jar包,截图如下:

jar包有了之后,我们先测试在eclipse上如何把作业提交到hadoop集群上,只要把main方面的代码,稍加改动即可:

- ?/**?

- ??????*?main方法?

- ??????*?*/??

- ?????public?static?void?main(String[]?args)?throws?Exception{??

- ?????????String?inputPath="hdfs://192.168.75.130:9000/root/output";???????

- ??????????String?outputPath="hdfs://192.168.75.130:9000/root/outputsort";??

- ??????????JobConf?conf=new?JobConf();??

- ?????????//Configuration?conf=new?Configuration();//可以使用这个conf来测试Local模式??

- ?????????//如果在src目录下有,mapred-site.xml文件,就不要此行代码??

- ?????????//注意此行代码也是在非Local模式下才使用??

- ?????????conf.set("mapred.job.tracker","192.168.75.130:9001");??

- ?????????//?conf.get("mapred.job.tracker");??

- ?????????System.out.println("模式:??"+conf.get("mapred.job.tracker"));??

- ?????????//?conf.setJar("tt.jar");?非Local模式下使用??

- ??????????FileSystem??fs=FileSystem.get(conf);??

- ??????????Path?pout=new?Path(outputPath);??

- ??????????if(fs.exists(pout)){??

- ??????????????fs.delete(pout,?true);??

- ??????????????System.out.println("存在此路径,?已经删除......");??

- ??????????}?????????????

- ??????????Job?job=new?Job(conf,?"sort123");???

- ??????????job.setJarByClass(TestSort.class);??

- ??????????job.setOutputKeyClass(IntWritable.class);//告诉map,reduce输出K,V的类型??

- ??????????FileInputFormat.setInputPaths(job,?new?Path(inputPath));??//输入路径??

- ??????????FileOutputFormat.setOutputPath(job,?new?Path(outputPath));//输出路径????

- ??????????job.setMapperClass(SMapper.class);//map类??

- ??????????job.setReducerClass(SReduce.class);//reduce类??

- ??????????job.setSortComparatorClass(SSort.class);//排序类??

- //??????????job.setInputFormatClass(org.apache.hadoop.mapreduce.lib.input.TextInputFormat.class);??

- //????????job.setOutputFormatClass(TextOutputFormat.class);??

- ??????????System.exit(job.waitForCompletion(true)???0?:?1);????

- ???????????

- ???????????

- ????}??

/** * main方法 * */ public static void main(String[] args) throws Exception{ String inputPath="hdfs://192.168.75.130:9000/root/output"; String outputPath="hdfs://192.168.75.130:9000/root/outputsort"; JobConf conf=new JobConf(); //Configuration conf=new Configuration();//可以使用这个conf来测试Local模式 //如果在src目录下有,mapred-site.xml文件,就不要此行代码 //注意此行代码也是在非Local模式下才使用 conf.set("mapred.job.tracker","192.168.75.130:9001"); // conf.get("mapred.job.tracker"); System.out.println("模式: "+conf.get("mapred.job.tracker")); // conf.setJar("tt.jar"); 非Local模式下使用 FileSystem fs=FileSystem.get(conf); Path pout=new Path(outputPath); if(fs.exists(pout)){ fs.delete(pout, true); System.out.println("存在此路径, 已经删除......"); } Job job=new Job(conf, "sort123"); job.setJarByClass(TestSort.class); job.setOutputKeyClass(IntWritable.class);//告诉map,reduce输出K,V的类型 FileInputFormat.setInputPaths(job, new Path(inputPath)); //输入路径 FileOutputFormat.setOutputPath(job, new Path(outputPath));//输出路径 job.setMapperClass(SMapper.class);//map类 job.setReducerClass(SReduce.class);//reduce类 job.setSortComparatorClass(SSort.class);//排序类// job.setInputFormatClass(org.apache.hadoop.mapreduce.lib.input.TextInputFormat.class);// job.setOutputFormatClass(TextOutputFormat.class); System.exit(job.waitForCompletion(true) ? 0 : 1); }

运行程序,输出,如下:

- 模式:??192.168.75.130:9001??

- 存在此路径,?已经删除......??

- WARN?-?JobClient.copyAndConfigureFiles(746)?|?Use?GenericOptionsParser?for?parsing?the?arguments.?Applications?should?implement?Tool?for?the?same.??

- INFO?-?FileInputFormat.listStatus(237)?|?Total?input?paths?to?process?:?1??

- WARN?-?NativeCodeLoader.<clinit>(52)?|?Unable?to?load?native-hadoop?library?for?your?platform...?using?builtin-java?classes?where?applicable??

- WARN?-?LoadSnappy.<clinit>(46)?|?Snappy?native?library?not?loaded??

- INFO?-?JobClient.monitorAndPrintJob(1380)?|?Running?job:?job_201403252058_0035??

- INFO?-?JobClient.monitorAndPrintJob(1393)?|??map?0%?reduce?0%??

- INFO?-?JobClient.monitorAndPrintJob(1393)?|??map?100%?reduce?0%??

- INFO?-?JobClient.monitorAndPrintJob(1393)?|??map?100%?reduce?33%??

- INFO?-?JobClient.monitorAndPrintJob(1393)?|??map?100%?reduce?100%??

- INFO?-?JobClient.monitorAndPrintJob(1448)?|?Job?complete:?job_201403252058_0035??

- INFO?-?Counters.log(585)?|?Counters:?29??

- INFO?-?Counters.log(587)?|???Job?Counters???

- INFO?-?Counters.log(589)?|?????Launched?reduce?tasks=1??

- INFO?-?Counters.log(589)?|?????SLOTS_MILLIS_MAPS=8498??

- INFO?-?Counters.log(589)?|?????Total?time?spent?by?all?reduces?waiting?after?reserving?slots?(ms)=0??

- INFO?-?Counters.log(589)?|?????Total?time?spent?by?all?maps?waiting?after?reserving?slots?(ms)=0??

- INFO?-?Counters.log(589)?|?????Launched?map?tasks=1??

- INFO?-?Counters.log(589)?|?????Data-local?map?tasks=1??

- INFO?-?Counters.log(589)?|?????SLOTS_MILLIS_REDUCES=9667??

- INFO?-?Counters.log(587)?|???File?Output?Format?Counters???

- INFO?-?Counters.log(589)?|?????Bytes?Written=26??

- INFO?-?Counters.log(587)?|???FileSystemCounters??

- INFO?-?Counters.log(589)?|?????FILE_BYTES_READ=39??

- INFO?-?Counters.log(589)?|?????HDFS_BYTES_READ=140??

- INFO?-?Counters.log(589)?|?????FILE_BYTES_WRITTEN=117654??

- INFO?-?Counters.log(589)?|?????HDFS_BYTES_WRITTEN=26??

- INFO?-?Counters.log(587)?|???File?Input?Format?Counters???

- INFO?-?Counters.log(589)?|?????Bytes?Read=28??

- INFO?-?Counters.log(587)?|???Map-Reduce?Framework??

- INFO?-?Counters.log(589)?|?????Map?output?materialized?bytes=39??

- INFO?-?Counters.log(589)?|?????Map?input?records=4??

- INFO?-?Counters.log(589)?|?????Reduce?shuffle?bytes=39??

- INFO?-?Counters.log(589)?|?????Spilled?Records=8??

- INFO?-?Counters.log(589)?|?????Map?output?bytes=25??

- INFO?-?Counters.log(589)?|?????Total?committed?heap?usage?(bytes)=176033792??

- INFO?-?Counters.log(589)?|?????CPU?time?spent?(ms)=1140??

- INFO?-?Counters.log(589)?|?????Combine?input?records=0??

- INFO?-?Counters.log(589)?|?????SPLIT_RAW_BYTES=112??

- INFO?-?Counters.log(589)?|?????Reduce?input?records=4??

- INFO?-?Counters.log(589)?|?????Reduce?input?groups=4??

- INFO?-?Counters.log(589)?|?????Combine?output?records=0??

- INFO?-?Counters.log(589)?|?????Physical?memory?(bytes)?snapshot=259264512??

- INFO?-?Counters.log(589)?|?????Reduce?output?records=4??

- INFO?-?Counters.log(589)?|?????Virtual?memory?(bytes)?snapshot=1460555776??

- INFO?-?Counters.log(589)?|?????Map?output?records=4??

模式: 192.168.75.130:9001存在此路径, 已经删除......WARN - JobClient.copyAndConfigureFiles(746) | Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.INFO - FileInputFormat.listStatus(237) | Total input paths to process : 1WARN - NativeCodeLoader.<clinit>(52) | Unable to load native-hadoop library for your platform... using builtin-java classes where applicableWARN - LoadSnappy.<clinit>(46) | Snappy native library not loadedINFO - JobClient.monitorAndPrintJob(1380) | Running job: job_201403252058_0035INFO - JobClient.monitorAndPrintJob(1393) | map 0% reduce 0%INFO - JobClient.monitorAndPrintJob(1393) | map 100% reduce 0%INFO - JobClient.monitorAndPrintJob(1393) | map 100% reduce 33%INFO - JobClient.monitorAndPrintJob(1393) | map 100% reduce 100%INFO - JobClient.monitorAndPrintJob(1448) | Job complete: job_201403252058_0035INFO - Counters.log(585) | Counters: 29INFO - Counters.log(587) | Job Counters INFO - Counters.log(589) | Launched reduce tasks=1INFO - Counters.log(589) | SLOTS_MILLIS_MAPS=8498INFO - Counters.log(589) | Total time spent by all reduces waiting after reserving slots (ms)=0INFO - Counters.log(589) | Total time spent by all maps waiting after reserving slots (ms)=0INFO - Counters.log(589) | Launched map tasks=1INFO - Counters.log(589) | Data-local map tasks=1INFO - Counters.log(589) | SLOTS_MILLIS_REDUCES=9667INFO - Counters.log(587) | File Output Format Counters INFO - Counters.log(589) | Bytes Written=26INFO - Counters.log(587) | FileSystemCountersINFO - Counters.log(589) | FILE_BYTES_READ=39INFO - Counters.log(589) | HDFS_BYTES_READ=140INFO - Counters.log(589) | FILE_BYTES_WRITTEN=117654INFO - Counters.log(589) | HDFS_BYTES_WRITTEN=26INFO - Counters.log(587) | File Input Format Counters INFO - Counters.log(589) | Bytes Read=28INFO - Counters.log(587) | Map-Reduce FrameworkINFO - Counters.log(589) | Map output materialized bytes=39INFO - Counters.log(589) | Map input records=4INFO - Counters.log(589) | Reduce shuffle bytes=39INFO - Counters.log(589) | Spilled Records=8INFO - Counters.log(589) | Map output bytes=25INFO - Counters.log(589) | Total committed heap usage (bytes)=176033792INFO - Counters.log(589) | CPU time spent (ms)=1140INFO - Counters.log(589) | Combine input records=0INFO - Counters.log(589) | SPLIT_RAW_BYTES=112INFO - Counters.log(589) | Reduce input records=4INFO - Counters.log(589) | Reduce input groups=4INFO - Counters.log(589) | Combine output records=0INFO - Counters.log(589) | Physical memory (bytes) snapshot=259264512INFO - Counters.log(589) | Reduce output records=4INFO - Counters.log(589) | Virtual memory (bytes) snapshot=1460555776INFO - Counters.log(589) | Map output records=4

我们可以看出,运行正常,排序的内容如下:

- dd??99999??

- a???784??

- b???12??

- c???-11??

dd 99999a 784b 12c -11

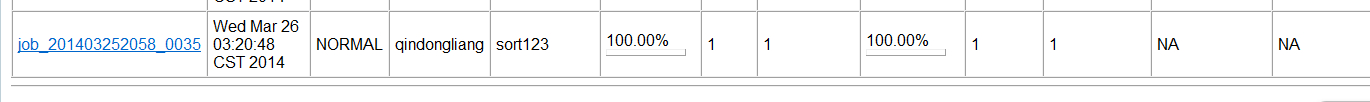

结果和local模式下的一样,还有一个与local模式不同的地方是,我们可以在http://192.168.75.130:50030/jobtracker.jsp的任务页面上找到刚才执行的任务状况,这一点在Local模式下运行程序,是没有的。/size]

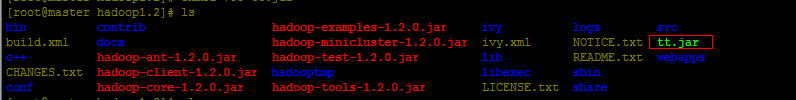

[size=large]最后,散仙再来看下,如何将jar包,上传到Linux提交作业到hadoop集群上。刚才,我们已经把jar给打好了,现在只需上传到linux上即可:

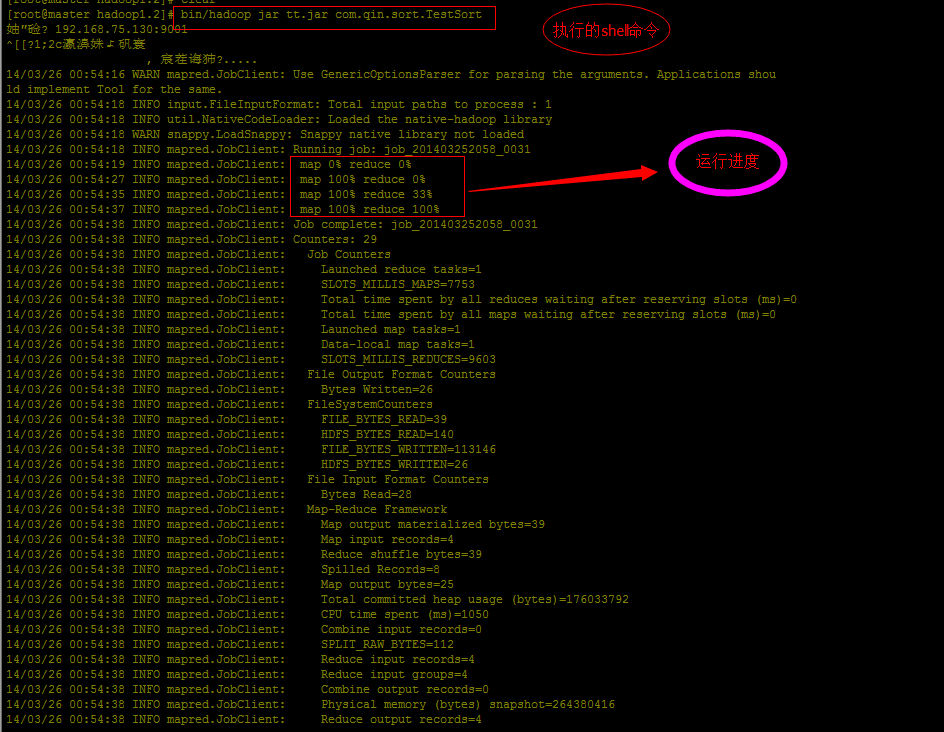

然后开始执行shell命令运行程序:

到此,我们已经完美的执行成功,最后一点需要注意的是,在执行排序任务时,如果出现异常:

- java.lang.Exception:?java.io.IOException:?Type?mismatch?in?key?from?map:?expected?org.apache.hadoop.io.LongWritable,?recieved?org.apache.hadoop.io.IntWritable??

- ????at?org.apache.hadoop.mapred.LocalJobRunner$Job.run(LocalJobRunner.java:354)??

- Caused?by:?java.io.IOException:?Type?mismatch?in?key?from?map:?expected?org.apache.hadoop.io.LongWritable,?recieved?org.apache.hadoop.io.IntWritable??

- ????at?org.apache.hadoop.mapred.MapTask$MapOutputBuffer.collect(MapTask.java:1019)??

- ????at?org.apache.hadoop.mapred.MapTask$NewOutputCollector.write(MapTask.java:690)??

- ????at?org.apache.hadoop.mapreduce.TaskInputOutputContext.write(TaskInputOutputContext.java:80)??

- ????at?com.qin.sort.TestSort$SMapper.map(TestSort.java:51)??

- ????at?com.qin.sort.TestSort$SMapper.map(TestSort.java:1)??

- ????at?org.apache.hadoop.mapreduce.Mapper.run(Mapper.java:145)??

- ????at?org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:764)??

- ????at?org.apache.hadoop.mapred.MapTask.run(MapTask.java:364)??

- ????at?org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:223)??

- ????at?java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471)??

- ????at?java.util.concurrent.FutureTask$Sync.innerRun(FutureTask.java:334)??

- ????at?java.util.concurrent.FutureTask.run(FutureTask.java:166)??

- ????at?java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110)??

- ????at?java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603)??

- ????at?java.lang.Thread.run(Thread.java:722)??

java.lang.Exception: java.io.IOException: Type mismatch in key from map: expected org.apache.hadoop.io.LongWritable, recieved org.apache.hadoop.io.IntWritable at org.apache.hadoop.mapred.LocalJobRunner$Job.run(LocalJobRunner.java:354)Caused by: java.io.IOException: Type mismatch in key from map: expected org.apache.hadoop.io.LongWritable, recieved org.apache.hadoop.io.IntWritable at org.apache.hadoop.mapred.MapTask$MapOutputBuffer.collect(MapTask.java:1019) at org.apache.hadoop.mapred.MapTask$NewOutputCollector.write(MapTask.java:690) at org.apache.hadoop.mapreduce.TaskInputOutputContext.write(TaskInputOutputContext.java:80) at com.qin.sort.TestSort$SMapper.map(TestSort.java:51) at com.qin.sort.TestSort$SMapper.map(TestSort.java:1) at org.apache.hadoop.mapreduce.Mapper.run(Mapper.java:145) at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:764) at org.apache.hadoop.mapred.MapTask.run(MapTask.java:364) at org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:223) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471) at java.util.concurrent.FutureTask$Sync.innerRun(FutureTask.java:334) at java.util.concurrent.FutureTask.run(FutureTask.java:166) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1110) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:603) at java.lang.Thread.run(Thread.java:722)

这个异常的出现,多半是因为,我们没有指定输出的Key,或者Value,或者指定的类型不一致,导致,我们只需要正确的设置输出的Key或者Value的类型即可.

- job.setOutputKeyClass(IntWritable.class);??

- ???????????job.setOutputValueClass(Text.class);??

job.setOutputKeyClass(IntWritable.class); job.setOutputValueClass(Text.class);

设置完后,就可以正常测试运行了。

?

?