文章目录

- 文章说明

- 导入需要的库

- image_preprocess

- load_weights

- postprocess_boxes

- nms

- bboxes_iou

- draw_bbox

- read_class_names

- get_anchors

- 完整代码

文章说明

本系列文章旨在对 Github 上 malin9402 提供的代码进行说明,在这篇文章中,我们会对 YOLOv3 项目中的 utils.py 文件进行说明。

如果只是想运行 Github 上的代码,可以参考对 YOLOv3 代码的说明一文。

导入需要的库

import cv2

import random

import colorsys

import numpy as np

from core.config import cfg

image_preprocess

输入:

-

image:要处理的原始图片;

-

target_size:处理后的图片尺寸;

-

gt_boxes:是否加入预测框(预测框位置由其左上角两坐标和右下角两坐标确定):

- gt_boxes[0]:x1

- gt_boxes[1]:y1

- gt_boxes[2]:x2

- gt_boxes[3]:y2

步骤:

- 得到目标图像的高(ih)和宽(iw)以及原始图像的高(h)和宽(w);

- 得到缩放系数(scale),即 iw/w 和 ih/h 的最小值;

- 将原始图像 resize 成(scale x h, scale x w);

- 将缺少的像素用 128 填充;

- 归一化;

- 如果需要加入预测框则画在图上。

def image_preprocess(image, target_size, gt_boxes=None):ih, iw = target_size # ih=416, iw=416h, w, _ = image.shape # h=900, w=1352scale = min(iw/w, ih/h) # scale=0.3077nw, nh = int(scale * w), int(scale * h) # nw=416, nh=276image_resized = cv2.resize(image, (nw, nh) # image_resized.shape=(276, 416)image_paded = np.full(shape=[ih, iw, 3], fill_value=128.0) # image_paded.shape=(416, 416)dw, dh = (iw - nw) // 2, (ih-nh) // 2 # dw=0, dh=70image_paded[dh:nh+dh, dw:nw+dw, :] = image_resizedimage_paded = image_paded / 255.if gt_boxes is None:return image_padedelse:gt_boxes[:, [0, 2]] = gt_boxes[:, [0, 2]] * scale + dwgt_boxes[:, [1, 3]] = gt_boxes[:, [1, 3]] * scale + dhreturn image_paded, gt_boxes

在上面的代码中,cv2.resize 的高放在后面,宽放在前面,所以是 (nw, nh) 而不是 (nh, nw)。

load_weights

load_weights 函数的作用是将预训练好的权值文件 yolov3.weights 加载到模型中。

首先,我们需要知道模型中一共有多少个卷积层:

count = 0

for layer in model.layers:if layer.name[0] == 'c':count += 1

得到 count=75,说明 model 中一共有 75 个卷积层,另外,通过打印所有层的名称,我们得知 conv2d_58,conv2d_66 和 conv2d_74 这三层卷积层后面是不跟 BN 层的(但是这三层的卷积核上有阈值)。

然后,分别设置卷积层和 BN 层的权值,这个 model 就可以直接使用了。

def load_weights(model, weights_file):wf = open(weights_file, 'rb')major, minor, revision, seen, _ = np.fromfile(wf, dtype=np.int32, count=5)j = 0for i in range(75): # 模型中一共有75个卷积层conv_layer_name = 'conv2d_%d' %i if i > 0 else 'conv2d'bn_layer_name = 'batch_normalization_%d' %j if j > 0 else 'batch_normalization'conv_layer = model.get_layer(conv_layer_name)filters = conv_layer.filtersk_size = conv_layer.kernel_size[0]in_dim = conv_layer.input_shape[-1]if i not in [58, 66, 74]:# darknet weights: [beta, gamma, mean, variance]bn_weights = np.fromfile(wf, dtype=np.float32, count=4 * filters)# tf weights: [gamma, beta, mean, variance]bn_weights = bn_weights.reshape((4, filters))[[1, 0, 2, 3]] # 第一行和第二行对调bn_layer = model.get_layer(bn_layer_name)j += 1else:conv_bias = np.fromfile(wf, dtype=np.float32, count=filters)# darknet shape (out_dim, in_dim, height, width)conv_shape = (filters, in_dim, k_size, k_size)conv_weights = np.fromfile(wf, dtype=np.float32, count=np.product(conv_shape))# tf shape (height, width, in_dim, out_dim)conv_weights = conv_weights.reshape(conv_shape).transpose([2, 3, 1, 0])if i not in [58, 66, 74]:conv_layer.set_weights([conv_weights])bn_layer.set_weights(bn_weights)else:conv_layer.set_weights([conv_weights, conv_bias])assert len(wf.read()) == 0, 'failed to read all data' # len(wf.read()) == 0 时不会报错wf.close()

在以上代码中有一些函数需要被说明:

- model.get_layer(conv_layer_name):得到 model 中的名称为 conv_layer_name 的层。

- conv_layer.filters:conv_layer 层的卷积核个数。

- conv_layer.kernel_size:conv_layer 层的卷积核大小。

- conv_layer.input_shape:conv_layer 层的输入的形状。

- np.fromfile(wf, dtype, count):读取 wf 文件中 count 个值,类似于一个迭代器,每次运行得到的值会往下顺延。(wf 文件中的前5个数字似乎不是权值,给模型赋权值时没有用到)

- conv_layer.set_weights([conv_weights]):将 conv_weights 权值赋给模型的 conv_layer 层。

postprocess_boxes

postprocess_boxes 函数用来确定预测框的信息,即预测框位置、置信概率(判断其中有检测物体的概率)和类别。

输入:

- pred_bbox:图片经过 YOLOv3 网络后得到的所有检测框信息的整合,因为经过 YOLOv3 网络后得到三个特征图中对应的检测框,其形状分别为 (1, 52, 52, 3, 15),(1, 26, 26, 3, 15),(1, 13, 13, 3, 15),所以 pred_bbox 的形状为 (13x13x3+26x26x3+52x52x3, 15)。

- org_img_shape:原始图片的尺寸大小。

- input_size:输入网络时的图片大小。

- score_threshold:分数阈值,我们只留下分数大于分数阈值的检测框。

流程:

- 因为在通过 YOLOv3 网络后我们得到的检测框信息是宽度、高度和中心点坐标,所以首先要将它们转化成检测框左上角和右下角的坐标,即 (x, y, w, h) →\rightarrow→ (xmin, ymin, xmax, ymax)。

- 现在我们得到的预测框信息是在 feature map 上的,所以要将它们转化为在原图上的信息,即 (xmin, ymin, xmax, ymax) →\rightarrow→ (xmin_org, ymin_org, xmax_org, ymax_org)。

- 如果某检测框超出原始图片边界,即整个检测框在图像外面,则将这个检测框的四个角的坐标都设为 0,那么它的面积也是 0。

- 计算所有检测框的面积,如果面积为 0,表示此检测框超出原始图片边界,则弃之。

- 计算每个检测框的分数(类别概率*置信概率),如果某检测框分数小于分数阈值,则弃之。

输出:所有符合要求的检测框在原始图像上的信息,格式为 [预测框的数量,预测框位置+分数+类别],形状为 [-1, 6]。

def postprocess_boxes(pred_bbox, org_img_shape, input_size, score_threshold):valid_scale = [0, np.inf]pred_bbox = np.array(pred_bbox)pred_xywh = pred_bbox[:, 0:4]pred_conf = pred_bbox[:, 4]pred_prob = pred_bbox[:, 5:]# # 流程1:转换检测框在 feature map 上的信息:(x, y, w, h) --> (xmin, ymin, xmax, ymax)pred_coor = np.concatenate([pred_xywh[:, :2] - pred_xywh[:, 2:] * 0.5,pred_xywh[:, :2] + pred_xywh[:, 2:] * 0.5], axis=-1)# # 流程2:求解预测框在原图上的位置:(xmin, ymin, xmax, ymax) -> (xmin_org, ymin_org, xmax_org, ymax_org)org_h, org_w = org_img_shaperesize_ratio = min(input_size / org_w, input_size / org_h) # 尺寸转换率# dw 和 dh 中有一个是 0 (这取决于原始图片是宽长还是高长)# 假设 dh 不是 0,那么它代表着乘以尺寸转换率后的高与 input_size 之间距离的一半dw = (input_size - resize_ratio * org_w) / 2dh = (input_size - resize_ratio * org_h) / 2# 减去 dw 和 dh 是为了去除填充的部分pred_coor[:, 0::2] = 1.0 * (pred_coor[:, 0::2] - dw) / resize_ratio # 转换xmin和xmaxpred_coor[:, 1::2] = 1.0 * (pred_coor[:, 1::2] - dh) / resize_ratio # 转换ymin和ymax# # 流程3:超出图片边界的预测框的坐标变为0# 下面将每个检测框坐标与边界坐标进行比较# 对xmin和ymin来说,如果它们中有小于0的,就用0替换它;# 对xmax和ymax来说,如果它们中有大于原图右下角坐标的,就用右下角坐标替换它。pred_coor = np.concatenate([np.maximum(pred_coor[:, :2], [0, 0]),np.minimum(pred_coor[:, 2:], [org_w - 1, org_h - 1])],axis=-1)# 假设有检测框的坐标为 [-3, -2, -1, -2],那么经过上一步后会变成 [0, 0, -1, -2],所以 invalid_mask 将被标记为 Trueinvalid_mask = np.logical_or((pred_coor[:, 0] > pred_coor[:, 2]), (pred_coor[:, 1] > pred_coor[:, 3]))pred_coor[invalid_mask] = 0# # 流程4:计算每个预测框的面积,找出面积为0的,也就是找出超出边界的检测框的索引bboxes_scale = np.sqrt(np.multiply.reduce(pred_coor[:, 2:4] - pred_coor[:, 0:2], axis=-1)) # 计算面积scale_mask = np.logical_and((valid_scale[0] < bboxes_scale), (bboxes_scale < valid_scale[1])) # 符合条件的索引的列表# # 流程5:计算每个预测框的分数,确定高于分数阈值的预测框索引# 分数 = 类别概率*置信概率 置信表示该预测框内有目标的概率,类别概率指的是概率最大下所对应的类别下的概率classes = np.argmax(pred_prob, axis=-1)scores = pred_conf * pred_prob[np.arange(len(pred_coor)), classes]score_mask = scores > score_thresholdmask = np.logical_and(scale_mask, score_mask)coors, scores, classes = pred_coor[mask], scores[mask], classes[mask]# np.concatenate 将 coors, scores, classes 三列拼接在一起return np.concatenate([coors, scores[:, np.newaxis], classes[:, np.newaxis]], axis=-1)

nms

nms 函数用来清除冗余的预测框,也就是极大值抑制处理。

输入:

- bboxes:原图上的检测框信息;

- iou_threshold:IOU 阈值,如果某检测框与同一类别下得分最高检测框的 IOU 值大于此阈值,我们即认为此检测框是冗余的,应弃之;

- method:极大值抑制的两种方法,‘nms’ 和 ‘soft-nms’;

- sigma:用于 ‘soft-nms’ 方法的参数。

流程(以 ‘nms’ 方法为例):

-

确定每个检测框检测到的类别;

-

对每个类别来说:

-

找出相同类别的预测框;

-

根据 IOU 阈值清除指定类别下冗余的预测框,其流程为:

- 取出此类别下所有预测框中得分最高的一个,并将这个预测框跟其他的同类别的预测框进行 IOU 计算;

- 将 IOU 值大于阈值的预测框视为与刚取出的得分最高的预测框表示了同一个检测物,故去掉;

- 重复以上操作,直到在此类别下取不到其他的预测框为止。

-

如果使用 ‘soft-nms’ 方法,其原理可以参考论文和代码。

输出:去除了冗余检测框的检测框集合。

def nms(bboxes, iou_threshold, sigma=0.3, method='nms'):# 确定预测框的类别classes_in_img = list(set(bboxes[:, 5])) # 如果有5类,那么classes_in_img应该是[0, 1, 2, 3, 4]best_bboxes = [] # 存放最终需要的检测框for cls in classes_in_img: # 对于每个类别来说:# 1. 找出相同类别的预测框cls_mask = (bboxes[:, 5] == cls)cls_bboxes = bboxes[cls_mask]# 2. 清除指定类别下冗余的预测框while len(cls_bboxes) > 0:max_ind = np.argmax(cls_bboxes[:, 4]) # 指定类别下预测框的最大分数的索引best_bbox = cls_bboxes[max_ind] # 指定类别下拥有最大分数的预测框best_bboxes.append(best_bbox) # 存储该预测框cls_bboxes = np.concatenate([cls_bboxes[: max_ind], cls_bboxes[max_ind + 1:]]) # 将其他的预测框重新组合# 计算该预测框与其他预测框的iouiou = bboxes_iou(best_bbox[np.newaxis, :4], cls_bboxes[:, :4])# 确定符合条件的预测框# 针对每个预测框建立数值标签weight,符合条件的数值为1,不符合条件为0weight = np.ones((len(iou),), dtype=np.float32)assert method in ['nms', 'soft-nms']# 'nms'表示Iou大于阈值的检测框被删除,'soft-nms'表示Iou经过计算小于0的检测框被删除if method == 'nms':iou_mask = iou > iou_thresholdweight[iou_mask] = 0.0if method == 'soft-nms':weight = np.exp(-(1.0 * iou ** 2 / sigma))cls_bboxes[:, 4] = cls_bboxes[:, 4] * weightscore_mask = cls_bboxes[:, 4] > 0.cls_bboxes = cls_bboxes[score_mask]return best_bboxes

bboxes_iou

bboxes_iou 函数被用来计算两个检测框之间的 IOU 值。

输入:两个(或一个对多个)检测框的坐标信息(左上角+右下角)。

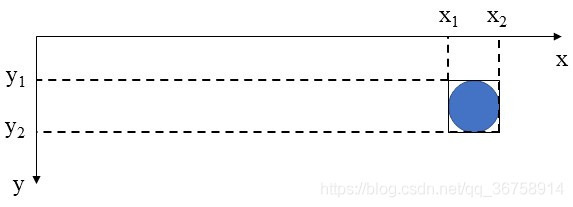

IOU 值其实就是两个框的交集面积比上它们的并集面积,这个值越大,代表这两个框的位置越接近。用下面这个图片表示:

def bboxes_iou(boxes1, boxes2):boxes1 = np.array(boxes1) # 第一个检测框的坐标数据boxes2 = np.array(boxes2) # 第二个检测框的坐标数据boxes1_area = (boxes1[..., 2] - boxes1[..., 0]) * (boxes1[..., 3] - boxes1[..., 1]) # 第一个检测框的面积boxes2_area = (boxes2[..., 2] - boxes2[..., 0]) * (boxes2[..., 3] - boxes2[..., 1]) # 第二个检测框的面积left_up = np.maximum(boxes1[..., :2], boxes2[..., :2]) # 对上图来说,left_up=[xmin2, ymin2]right_down = np.minimum(boxes1[..., 2:], boxes2[..., 2:]) # 对上图来说,right_down=[xmax1, ymax1]inter_section = np.maximum(right_down - left_up, 0.0) # 交集区域inter_area = inter_section[..., 0] * inter_section[..., 1] # 交集面积union_area = boxes1_area + boxes2_area - inter_area # 并集面积ious = np.maximum(1.0 * inter_area / union_area, np.finfo(np.float32).eps)return ious

draw_bbox

draw_bbox 函数被用来在图片上绘制检测框。

输入:

- image:被绘制检测框的图片;

- bboxes:检测框,其格式为 [x_min, y_min, x_max, y_max, probability, cls_id];

- classes:是一个字典,包含着所有种类的索引,由 read_class_names 函数给出;

- show_label:是否将种类写在检测框上。

def draw_bbox(image, bboxes, classes=read_class_names(cfg.YOLO.CLASSES), show_label=True):num_classes = len(classes)image_h, image_w, _ = image.shape# 为每个类别设置颜色(当然自己设置颜色也行)hsv_tuples = [(1.0 * x / num_classes, 1., 1.) for x in range(num_classes)]colors = list(map(lambda x: colorsys.hsv_to_rgb(*x), hsv_tuples))colors = list(map(lambda x: (int(x[0] * 255), int(x[1] * 255), int(x[2] * 255)), colors))random.seed(0)random.shuffle(colors)random.seed(None)for i, bbox in enumerate(bboxes):coor = np.array(bbox[:4], dtype=np.int32)fontScale = 0.5score = bbox[4]class_ind = int(bbox[5])# 检测框颜色bbox_color = colors[class_ind]# 检测框厚度bbox_thick = int(0.6 * (image_h + image_w) / 600)# 检测框的左上和右下坐标c1, c2 = (coor[0], coor[1]), (coor[2], coor[3])# 绘制检测框cv2.rectangle(image, c1, c2, bbox_color, bbox_thick)# 如果要在框上增加类别信息if show_label:bbox_mess = '%s: %.2f' % (classes[class_ind], score)# 计算文本字符串的高度与宽度t_size = cv2.getTextSize(bbox_mess, 0, fontScale, thickness=bbox_thick//2)[0]# 绘制标注信息的框cv2.rectangle(image, c1, (c1[0] + t_size[0], c1[1] - t_size[1] - 3), bbox_color, -1)# 在图片上增加标注信息,cv2.putText 的格式为 [图像,文字内容, 坐标 ,字体,大小,颜色,字体厚度]cv2.putText(image, bbox_mess, (c1[0], c1[1]-2), cv2.FONT_HERSHEY_SIMPLEX,fontScale, (0, 0, 0), bbox_thick//2, lineType=cv2.LINE_AA)return image

read_class_names

read_class_names 函数将 yymnist.names 文件中的类别索引读取出来并放在一个字典中。

def read_class_names(class_file_name):names = {

}with open(class_file_name, 'r') as data:for ID, name in enumerate(data):names[ID] = name.strip('\n')return names

将 “./data/classes/yymnist.names” 输入将返回:

{

0: '0',1: '1',2: '2',3: '3',4: '4',5: '5',6: '6',7: '7',8: '8',9: '9'}

get_anchors

get_anchors 函数的作用是将 basline_anchors.txt 中的先验框读取出来。

def get_anchors(anchors_path):'''loads the anchors from a file'''with open(anchors_path) as f:anchors = f.readline()anchors = np.array(anchors.split(','), dtype=np.float32)return anchors.reshape(3, 3, 2)

将 “./data/anchors/basline_anchors.txt” 输入后得到:

array([[[ 1.25 , 1.625 ],[ 2. , 3.75 ],[ 4.125 , 2.875 ]],[[ 1.875 , 3.8125 ],[ 3.875 , 2.8125 ],[ 3.6875 , 7.4375 ]],[[ 3.625 , 2.8125 ],[ 4.875 , 6.1875 ],[11.65625, 10.1875 ]]], dtype=float32)

这个数组表示先验框的宽度和高度,三个 feature map 上各有三个先验框,所以一共有九个先验框的宽度和高度。

完整代码

import cv2

import random

import colorsys

import numpy as np

from core.config import cfgdef load_weights(model, weights_file):"""I agree that this code is very ugly, but I don’t know any better way of doing it."""wf = open(weights_file, 'rb')major, minor, revision, seen, _ = np.fromfile(wf, dtype=np.int32, count=5)j = 0for i in range(75):conv_layer_name = 'conv2d_%d' %i if i > 0 else 'conv2d'bn_layer_name = 'batch_normalization_%d' %j if j > 0 else 'batch_normalization'conv_layer = model.get_layer(conv_layer_name)filters = conv_layer.filtersk_size = conv_layer.kernel_size[0]in_dim = conv_layer.input_shape[-1]if i not in [58, 66, 74]:# darknet weights: [beta, gamma, mean, variance]bn_weights = np.fromfile(wf, dtype=np.float32, count=4 * filters)# tf weights: [gamma, beta, mean, variance]bn_weights = bn_weights.reshape((4, filters))[[1, 0, 2, 3]]bn_layer = model.get_layer(bn_layer_name)j += 1else:conv_bias = np.fromfile(wf, dtype=np.float32, count=filters)# darknet shape (out_dim, in_dim, height, width)conv_shape = (filters, in_dim, k_size, k_size)conv_weights = np.fromfile(wf, dtype=np.float32, count=np.product(conv_shape))# tf shape (height, width, in_dim, out_dim)conv_weights = conv_weights.reshape(conv_shape).transpose([2, 3, 1, 0])if i not in [58, 66, 74]:conv_layer.set_weights([conv_weights])bn_layer.set_weights(bn_weights)else:conv_layer.set_weights([conv_weights, conv_bias])assert len(wf.read()) == 0, 'failed to read all data'wf.close()def read_class_names(class_file_name):'''loads class name from a file'''names = {

}with open(class_file_name, 'r') as data:for ID, name in enumerate(data):names[ID] = name.strip('\n')return namesdef get_anchors(anchors_path):'''loads the anchors from a file'''with open(anchors_path) as f:anchors = f.readline()anchors = np.array(anchors.split(','), dtype=np.float32)return anchors.reshape(3, 3, 2)def image_preprocess(image, target_size, gt_boxes=None):ih, iw = target_sizeh, w, _ = image.shapescale = min(iw/w, ih/h)nw, nh = int(scale * w), int(scale * h)image_resized = cv2.resize(image, (nw, nh))image_paded = np.full(shape=[ih, iw, 3], fill_value=128.0)dw, dh = (iw - nw) // 2, (ih-nh) // 2image_paded[dh:nh+dh, dw:nw+dw, :] = image_resizedimage_paded = image_paded / 255.if gt_boxes is None:return image_padedelse:gt_boxes[:, [0, 2]] = gt_boxes[:, [0, 2]] * scale + dwgt_boxes[:, [1, 3]] = gt_boxes[:, [1, 3]] * scale + dhreturn image_paded, gt_boxesdef draw_bbox(image, bboxes, classes=read_class_names(cfg.YOLO.CLASSES), show_label=True):"""bboxes: [x_min, y_min, x_max, y_max, probability, cls_id] format coordinates."""num_classes = len(classes)image_h, image_w, _ = image.shapehsv_tuples = [(1.0 * x / num_classes, 1., 1.) for x in range(num_classes)]colors = list(map(lambda x: colorsys.hsv_to_rgb(*x), hsv_tuples))colors = list(map(lambda x: (int(x[0] * 255), int(x[1] * 255), int(x[2] * 255)), colors))random.seed(0)random.shuffle(colors)random.seed(None)for i, bbox in enumerate(bboxes):coor = np.array(bbox[:4], dtype=np.int32)fontScale = 0.5score = bbox[4]class_ind = int(bbox[5])bbox_color = colors[class_ind]bbox_thick = int(0.6 * (image_h + image_w) / 600)c1, c2 = (coor[0], coor[1]), (coor[2], coor[3])cv2.rectangle(image, c1, c2, bbox_color, bbox_thick)if show_label:bbox_mess = '%s: %.2f' % (classes[class_ind], score)t_size = cv2.getTextSize(bbox_mess, 0, fontScale, thickness=bbox_thick//2)[0]cv2.rectangle(image, c1, (c1[0] + t_size[0], c1[1] - t_size[1] - 3), bbox_color, -1) # filledcv2.putText(image, bbox_mess, (c1[0], c1[1]-2), cv2.FONT_HERSHEY_SIMPLEX,fontScale, (0, 0, 0), bbox_thick//2, lineType=cv2.LINE_AA)return imagedef bboxes_iou(boxes1, boxes2):boxes1 = np.array(boxes1)boxes2 = np.array(boxes2)boxes1_area = (boxes1[..., 2] - boxes1[..., 0]) * (boxes1[..., 3] - boxes1[..., 1])boxes2_area = (boxes2[..., 2] - boxes2[..., 0]) * (boxes2[..., 3] - boxes2[..., 1])left_up = np.maximum(boxes1[..., :2], boxes2[..., :2])right_down = np.minimum(boxes1[..., 2:], boxes2[..., 2:])inter_section = np.maximum(right_down - left_up, 0.0)inter_area = inter_section[..., 0] * inter_section[..., 1]union_area = boxes1_area + boxes2_area - inter_areaious = np.maximum(1.0 * inter_area / union_area, np.finfo(np.float32).eps)return iousdef nms(bboxes, iou_threshold, sigma=0.3, method='nms'):""":param bboxes: (xmin, ymin, xmax, ymax, score, class)Note: soft-nms, https://arxiv.org/pdf/1704.04503.pdfhttps://github.com/bharatsingh430/soft-nms"""classes_in_img = list(set(bboxes[:, 5]))best_bboxes = []for cls in classes_in_img:cls_mask = (bboxes[:, 5] == cls)cls_bboxes = bboxes[cls_mask]while len(cls_bboxes) > 0:max_ind = np.argmax(cls_bboxes[:, 4])best_bbox = cls_bboxes[max_ind]best_bboxes.append(best_bbox)cls_bboxes = np.concatenate([cls_bboxes[: max_ind], cls_bboxes[max_ind + 1:]])iou = bboxes_iou(best_bbox[np.newaxis, :4], cls_bboxes[:, :4])weight = np.ones((len(iou),), dtype=np.float32)assert method in ['nms', 'soft-nms']if method == 'nms':iou_mask = iou > iou_thresholdweight[iou_mask] = 0.0if method == 'soft-nms':weight = np.exp(-(1.0 * iou ** 2 / sigma))cls_bboxes[:, 4] = cls_bboxes[:, 4] * weightscore_mask = cls_bboxes[:, 4] > 0.cls_bboxes = cls_bboxes[score_mask]return best_bboxesdef postprocess_boxes(pred_bbox, org_img_shape, input_size, score_threshold):valid_scale=[0, np.inf]pred_bbox = np.array(pred_bbox)pred_xywh = pred_bbox[:, 0:4]pred_conf = pred_bbox[:, 4]pred_prob = pred_bbox[:, 5:]# # (1) (x, y, w, h) --> (xmin, ymin, xmax, ymax)pred_coor = np.concatenate([pred_xywh[:, :2] - pred_xywh[:, 2:] * 0.5,pred_xywh[:, :2] + pred_xywh[:, 2:] * 0.5], axis=-1)# # (2) (xmin, ymin, xmax, ymax) -> (xmin_org, ymin_org, xmax_org, ymax_org)org_h, org_w = org_img_shaperesize_ratio = min(input_size / org_w, input_size / org_h)dw = (input_size - resize_ratio * org_w) / 2dh = (input_size - resize_ratio * org_h) / 2pred_coor[:, 0::2] = 1.0 * (pred_coor[:, 0::2] - dw) / resize_ratiopred_coor[:, 1::2] = 1.0 * (pred_coor[:, 1::2] - dh) / resize_ratio# # (3) clip some boxes those are out of rangepred_coor = np.concatenate([np.maximum(pred_coor[:, :2], [0, 0]),np.minimum(pred_coor[:, 2:], [org_w - 1, org_h - 1])], axis=-1)invalid_mask = np.logical_or((pred_coor[:, 0] > pred_coor[:, 2]), (pred_coor[:, 1] > pred_coor[:, 3]))pred_coor[invalid_mask] = 0# # (4) discard some invalid boxesbboxes_scale = np.sqrt(np.multiply.reduce(pred_coor[:, 2:4] - pred_coor[:, 0:2], axis=-1))scale_mask = np.logical_and((valid_scale[0] < bboxes_scale), (bboxes_scale < valid_scale[1]))# # (5) discard some boxes with low scoresclasses = np.argmax(pred_prob, axis=-1)scores = pred_conf * pred_prob[np.arange(len(pred_coor)), classes]score_mask = scores > score_thresholdmask = np.logical_and(scale_mask, score_mask)coors, scores, classes = pred_coor[mask], scores[mask], classes[mask]return np.concatenate([coors, scores[:, np.newaxis], classes[:, np.newaxis]], axis=-1)