1、卸载centos自带java,并安装java8

查询

rpm -qa |egrep 'java|jdk'卸载

rpm -qa |egrep 'java|jdk'|awk '{print $0}'|xargs rpm -e --nodeps从https://www.oracle.com/java/technologies/javase/javase-jdk8-downloads.html登录下载java8的rpm包(jdk-8uXXX-linux-x64.rpm)并安装

rpm -ivh jdk-8u261-linux-x64.rpm

添加java_home的环境变量(java版本跟我不一样的,根据根据你本地java路径更改)

echo -e '\nexport JAVA_HOME=/usr/java/jdk1.8.0_261-amd64/' >> /etc/profile

source /etc/profile2、关闭SELINUX

执行以下命令,并重启机器

sed -i /SELINUX=enforcing/d /etc/selinux/config

echo "SELINUX=disabled" >> /etc/selinux/config

setenforce 0

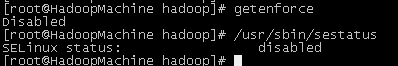

检查getenforce 或者 /usr/sbin/sestatus 输出diabled即可

3、关闭防火墙并关闭自启动

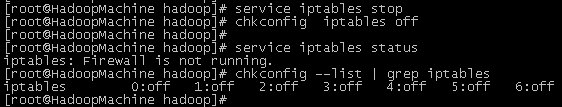

service iptables stop ——停止防火墙运行

chkconfig iptables off ——停止防火墙服务开机启动

检查方法:

service iptables status ——查看防火墙的运行状态

chkconfig --list | grep iptables ——查看防火墙服务开机启动是否关闭

4、设置ssh免密

ssh-keygen -t dsa -P '' -f /root/.ssh/id_dsa

cat /root/.ssh/id_dsa.pub >> /root/.ssh/authorized_keys验证 ssh localhost,不用输入密码即成功

![]()

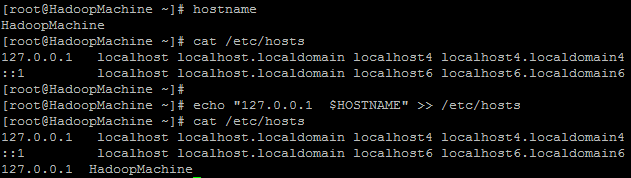

5、修改主机名

hostname查看本机主机名,如果输出localhost或者localhost.localdomain则不用修改

如果不是localhost,把主机名与127.0.0.1绑定写入到/etc/hosts

6、下载hadoop-2.6.0-cdh5.7.0的tar包,并解压到/opt

wget http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.7.0.tar.gz

tar -xf hadoop-2.6.0-cdh5.7.0.tar.gz -C /opt7、配置hadoop

添加hadoop环境变量

echo 'export HADOOP_HOME=/opt/hadoop-2.6.0-cdh5.7.0' >> /etc/profile

echo 'export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin::$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH' >> /etc/profile

source /etc/profile

echo -e '\nexport JAVA_HOME=/usr/java/jdk1.8.0_261-amd64/' >> /opt/hadoop-2.6.0-cdh5.7.0/etc/hadoop/hadoop-env.sh/opt/hadoop-2.6.0-cdh5.7.0/etc/hadoop/core-site.xml配置如下

<configuration><property><name>fs.defaultFS</name><value>hdfs://localhost:9000</value></property>

</configuration>/opt/hadoop-2.6.0-cdh5.7.0/etc/hadoop/hdfs-site.xml

<configuration><property><name>dfs.replication</name><value>1</value></property>

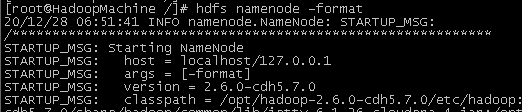

</configuration>8、格式化文件系统

hdfs namenode -format

9、启动NameNode守护程序和DataNode守护程序

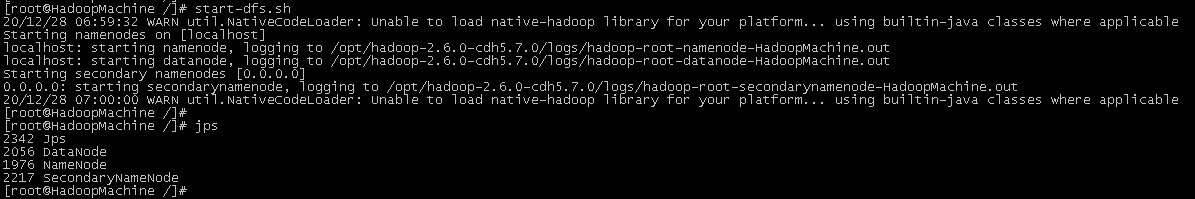

start-dfs.sh

jps

检查:jps输入有datanode,namenode,SecondaryNameNode即成功

http://IP:50070可以查看hadoop