目录

- 前言

- 0、导入需要的包和基本配置

- 1、文件入口

- 2、parse_opt

- 3、main

- 4、run

- 5、使用

- 总结

前言

源码: YOLOv5源码.

导航: 【YOLOV5-5.x 源码讲解】整体项目文件导航.

注释版全部项目文件已上传至GitHub: yolov5-5.x-annotations.

这个部分是模型的转换部分,将模型转换为torchscript、 onnx、coreml等格式,用于后面的应用中,方便将模型加载到各种设备上。

0、导入需要的包和基本配置

import argparse # 解析命令行参数模块

import sys # sys系统模块 包含了与Python解释器和它的环境有关的函数

import time # 时间模块 更底层

from pathlib import Path # Path将str转换为Path对象 使字符串路径易于操作的模块import torch # PyTorch深度学习模块

import torch.nn as nn # 对torch.nn.functional的类的封装 有很多和torch.nn.functional相同的函数

from torch.utils.mobile_optimizer import optimize_for_mobile # 对模型进行移动端优化模块FILE = Path(__file__).absolute() # FILE = WindowsPath 'F:\yolo_v5\yolov5-U\export.py'

# 将'F:/yolo_v5/yolov5-U'加入系统的环境变量 该脚本结束后失效

sys.path.append(FILE.parents[0].as_posix()) # add yolov5/ to pathfrom models.common import Conv

from models.yolo import Detect

from models.experimental import attempt_load

from models.activations import Hardswish, SiLU

from utils.general import colorstr, check_img_size, check_requirements, file_size, set_logging

from utils.torch_utils import select_device

1、文件入口

脚本执行入口

if __name__ == "__main__":opt = parse_opt()main(opt)

2、parse_opt

设置opt参数。

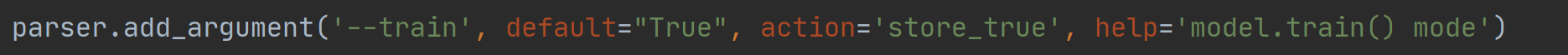

def parse_opt():"""weights: 要转换的权重文件pt地址 默认='../weights/best.pt'img-size: 输入模型的图片size=(height, width) 默认=[640, 640]batch-size: batch大小 默认=1device: 模型运行设备 cuda device, i.e. 0 or 0,1,2,3 or cpu 默认=cpuinclude: 要将pt文件转为什么格式 可以为单个原始也可以为list 默认=['torchscript', 'onnx', 'coreml']half: 是否使用半精度FP16export转换 默认=Falseinplace: 是否set YOLOv5 Detect() inplace=True 默认=Falsetrain: 是否开启model.train() mode 默认=True coreml转换必须为Trueoptimize: TorchScript转化参数 是否进行移动端优化 默认=Falsedynamic: ONNX转换参数 dynamic_axes ONNX转换是否要进行批处理变量 默认=Falsesimplify: ONNX转换参数 是否简化onnx模型 默认=Falseopset-version: ONNX转换参数 设置版本 默认=10"""parser = argparse.ArgumentParser()parser.add_argument('--weights', type=str, default='../weights/best.pt', help='weights path')parser.add_argument('--img-size', nargs='+', type=int, default=[640, 640], help='image (height, width)')parser.add_argument('--batch-size', type=int, default=1, help='batch size')parser.add_argument('--device', default='cpu', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')parser.add_argument('--include', nargs='+', default=['torchscript', 'onnx', 'coreml'], help='include formats')parser.add_argument('--half', action='store_true', help='FP16 half-precision export')parser.add_argument('--inplace', action='store_true', help='set YOLOv5 Detect() inplace=True')parser.add_argument('--train', default="True", action='store_true', help='model.train() mode')parser.add_argument('--optimize', action='store_true', help='TorchScript: optimize for mobile')parser.add_argument('--dynamic', action='store_true', help='ONNX: dynamic axes')parser.add_argument('--simplify', action='store_true', help='ONNX: simplify model')parser.add_argument('--opset-version', type=int, default=10, help='ONNX: opset version')opt = parser.parse_args()return opt

3、main

def main(opt):# 初始化日志set_logging()print(colorstr('export: ') + ', '.join(f'{

k}={

v}' for k, v in vars(opt).items())) # 彩色打印# 脚本主体run(**vars(opt))

4、run

脚本主体,可将pt权重文件转化为[‘torchscript’, ‘onnx’, ‘coreml’]三种格式权重文件。

def run(weights='../weights/yolov5s.pt', img_size=(640, 640), batch_size=1, device='cpu',include=('torchscript', 'onnx', 'coreml'), half=False, inplace=False, train=False,optimize=False, dynamic=False, simplify=False, opset_version=12,):"""weights: 要转换的权重文件pt地址 默认='../weights/best.pt'img-size: 输入模型的图片size=(height, width) 默认=[640, 640]batch-size: batch大小 默认=1device: 模型运行设备 cuda device, i.e. 0 or 0,1,2,3 or cpu 默认=cpuinclude: 要将pt文件转为什么格式 可以为单个原始也可以为list 默认=['torchscript', 'onnx', 'coreml']half: 是否使用半精度FP16export转换 默认=Falseinplace: 是否set YOLOv5 Detect() inplace=True 默认=Falsetrain: 是否开启model.train() mode 默认=True coreml转换必须为Trueoptimize: TorchScript转化参数 是否进行移动端优化 默认=Falsedynamic: ONNX转换参数 dynamic_axes ONNX转换是否要进行批处理变量 默认=Falsesimplify: ONNX转换参数 是否简化onnx模型 默认=Falseopset-version: ONNX转换参数 设置版本 默认=10"""t = time.time() # 获取当前时间include = [x.lower() for x in include] # pt文件要转化的格式包括哪些img_size *= 2 if len(img_size) == 1 else 1 # expand# Load PyTorch modeldevice = select_device(device) # 选择设备assert not (device.type == 'cpu' and half), '--half only compatible with GPU export, i.e. use --device 0'model = attempt_load(weights, map_location=device) # load FP32 modellabels = model.names # 载入数据集name# Inputgs = int(max(model.stride)) # grid size (max stride)img_size = [check_img_size(x, gs) for x in img_size] # verify img_size are gs-multiplesimg = torch.zeros(batch_size, 3, *img_size).to(device) # 自定义一张图片 输入model# Update model# 是否采样半精度FP16训练or推理if half:img, model = img.half(), model.half() # to FP16# 是否开启train模式model.train() if train else model.eval() # training mode = no Detect() layer grid construction# 调整模型配置for k, m in model.named_modules():# pytorch 1.6.0 compatibility(关于版本兼容的设置) 使模型兼容pytorch 1.6.0m._non_persistent_buffers_set = set()# assign export-friendly activations(有些导出的格式是不兼容系统自带的nn.Hardswish、nn.SiLU的)if isinstance(m, Conv):if isinstance(m.act, nn.Hardswish):m.act = Hardswish()elif isinstance(m.act, nn.SiLU):m.act = SiLU()# 模型相关设置: Detect类的inplace参数和onnx_dynamic参数elif isinstance(m, Detect):m.inplace = inplacem.onnx_dynamic = dynamic # 设置Detect的onnx_dynamic参数为dynamic# m.forward = m.forward_export # assign forward (optional)for _ in range(2):y = model(img) # dry runs 前向推理2次print(f"\n{

colorstr('PyTorch:')} starting from {

weights} ({

file_size(weights):.1f} MB)")# ================================ 转换模型 ====================================# TorchScript export -----------------------------------------------------------------------------------------------if 'torchscript' in include or 'coreml' in include:prefix = colorstr('TorchScript:')try:print(f'\n{

prefix} starting export with torch {

torch.__version__}...')f = weights.replace('.pt', '.torchscript.pt') # export torchscript filenamets = torch.jit.trace(model, img, strict=False) # convert# optimize_for_mobile: 移动端优化(optimize_for_mobile(ts) if optimize else ts).save(f) # saveprint(f'{

prefix} export success, saved as {

f} ({

file_size(f):.1f} MB)')except Exception as e:print(f'{

prefix} export failure: {

e}')# ONNX export ------------------------------------------------------------------------------------------------------if 'onnx' in include:prefix = colorstr('ONNX:')try:import onnxprint(f'{

prefix} starting export with onnx {

onnx.__version__}...') # 日志f = weights.replace('.pt', '.onnx') # export filename# converttorch.onnx.export(model, img, f, verbose=False, opset_version=opset_version,training=torch.onnx.TrainingMode.TRAINING if train else torch.onnx.TrainingMode.EVAL,do_constant_folding=not train, # 是否执行常量折叠优化input_names=['images'], # 输入名output_names=['output'], # 输出名# 批处理变量 若不想支持批处理或固定批处理大小,移除dynamic_axes字段即可dynamic_axes={

'images': {

0: 'batch', 2: 'height', 3: 'width'}, # shape(1,3,640,640)'output': {

0: 'batch', 1: 'anchors'} # shape(1,25200,85)} if dynamic else None)# Checksmodel_onnx = onnx.load(f) # load onnx modelonnx.checker.check_model(model_onnx) # check onnx model# print(onnx.helper.printable_graph(model_onnx.graph)) # print# Simplifyif simplify:try:check_requirements(['onnx-simplifier'])import onnxsimprint(f'{

prefix} simplifying with onnx-simplifier {

onnxsim.__version__}...')# simplify 简化model_onnx, check = onnxsim.simplify(model_onnx,dynamic_input_shape=dynamic,input_shapes={

'images': list(img.shape)} if dynamic else None)assert check, 'assert check failed'onnx.save(model_onnx, f) # saveexcept Exception as e:print(f'{

prefix} simplifier failure: {

e}')print(f'{

prefix} export success, saved as {

f} ({

file_size(f):.1f} MB)')except Exception as e:print(f'{

prefix} export failure: {

e}')# CoreML export ----------------------------------------------------------------------------------------------------# 注意: 转换CoreML时必须设置model.train 即opt参数train为Trueif 'coreml' in include:prefix = colorstr('CoreML:')try:import coremltools as ctprint(f'{

prefix} starting export with coremltools {

ct.__version__}...')assert train, 'CoreML exports should be placed in model.train() mode with `python export.py --train`'model = ct.convert(ts, inputs=[ct.ImageType('image', shape=img.shape, scale=1 / 255.0, bias=[0, 0, 0])]) # convertf = weights.replace('.pt', '.mlmodel') # export coreml filenamemodel.save(f) # saveprint(f'{

prefix} export success, saved as {

f} ({

file_size(f):.1f} MB)')except Exception as e:print(f'{

prefix} export failure: {

e}')# Finishprint(f'\nExport complete ({

time.time() - t:.2f}s). Visualize with https://github.com/lutzroeder/netron.')

5、使用

三种格式想要用哪种就要下载相应的包:

- torchscript 不需要下载对应的包 有Torch就可以

- onnx: pip install onnx

- coreml: pip install coremltools

然后想要转换哪张格式在opt参数include参数list中中加入对应名字就可以,如:

转换效果

转换前:

转换后:

发现报错(CoreML转换错误):

需要将Opt参数train设为True(model.train模式才能转换CoreML):

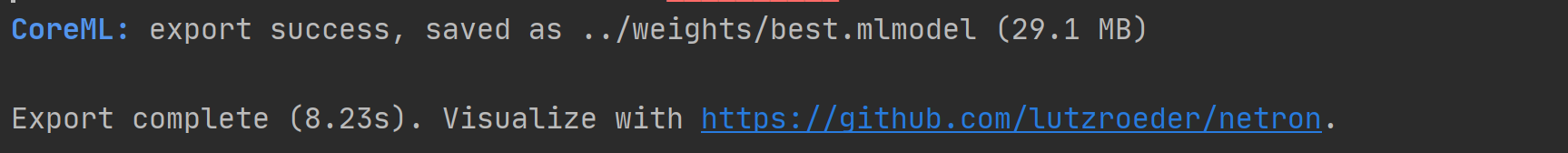

执行代码,转换成功:

总结

难度不大,都是各种掉包实现的。

–2021.08.24