2019 Interspeech

-

- 1. Improved End-to-End Speech Emotion Recognition Using Self Attention Mechanism and Multitask Learning

-

- 实验

- 2. Self-attention for Speech Emotion Recognition

-

- 实验

- 3. Deep Learning of Segment-Level Feature Representation with Multiple Instance Learning for Utterance-Level Speech Emotion Recognition

-

- Abstract

-

- 1. Introduction

- 实验

1. Improved End-to-End Speech Emotion Recognition Using Self Attention Mechanism and Multitask Learning

- 东京大学

- 端到端多任务学习with self attention,辅助任务是gender。

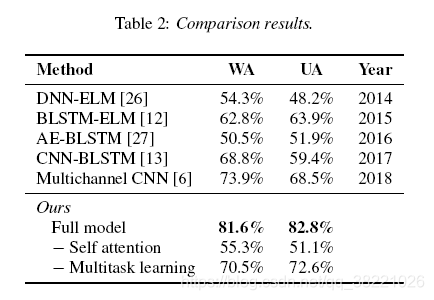

首先从语谱图提取特征speech spectrogram,而不是用手工特征。然后CNN-BLSTM E2E网络。随后用self attention mechanism聚焦到情感 salient periods。最后考虑到emotion and gender classification tasks之间的相互特征,结合了性别分类作为附加task,与主要任务emotion classification share有用的信息。 - 摘要从人机交互应用说明SER has attracted great attention,更有画面感。介绍,分别叙述了特征、语谱图的优越性 、HMM GMM SVM等traditional machine learning approaches, CNN RNN traditional machine learning approaches。

- multi-headed self attention

- 提取语谱图:长度归一化到7.5s,不足的补零,长的cut。Hanning windows 800。sampling rate 16000Hz.

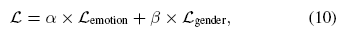

短时傅里叶变换 - α\alphaα和β\betaβ 是1

实验

IEMOCAP combine EXCITED and HAPPY into HAPPY 四类 一共5531samples。

实验结果对比有5-fold cross-validation(2018),也有leave-one-session-out。

2. Self-attention for Speech Emotion Recognition

- “Attention is all you need”2017 Available

based on an encoder-decoder structure that 没有使用任何 recurrence, but instead uses weighted

correlations between the elements of the input sequence

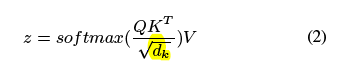

Transformer:把input sequence映射成a query, a key and a value

介绍了各种attention。 - 提出了 a global windowing system that works works on top of the local windows.

- classification and regression.

实验

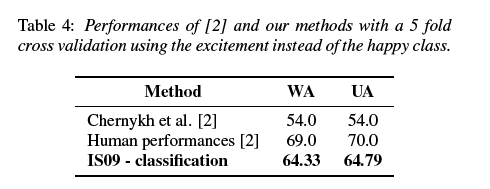

5 fold cross validation.

因为happy少,换成了excited,这样balance。不知道这老哥比较的对不对,[2]也是excited 5折吗?

3. Deep Learning of Segment-Level Feature Representation with Multiple Instance Learning for Utterance-Level Speech Emotion Recognition

Deep Learning of Segment-Level Feature Representation with Multiple Instance Learning for Utterance-Level Speech Emotion Recognition

香港中文大学 Shuiyang Mao

Abstract

结合多示例学习和深度学习SER。

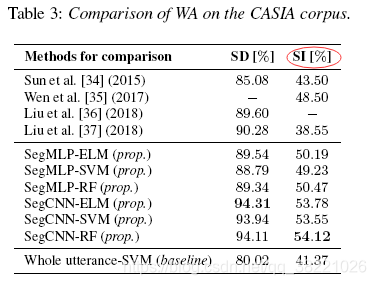

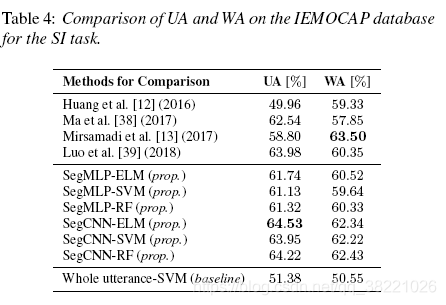

先给每个segment分类情感状态,Utterance-Level 分类结果是Segment-Level决策的aggregation。两个不同的DNN:SegMLP(多层感知机提取手工特征的高纬特征) and SegCNN(从log Mel filterbanks中自动提取情感相关fea)。两个库 CASIA, IEMOCAP。

发现:上述设计提供了 richer information,automatic feature learning优于手工特征。结果与state-of- the-art methods相较量。

1. Introduction

识别model大致分成两类:

dynamic modeling approach where frame-based low-level descriptors (LLDs) 例如MFCCs ,然后利用HMM。

二是利用 statistics of the fundamental frequency (pitch), spectral envelop and energy contour。这些统计函数是作用在suprasegment (超音段)或者 whole utterance, 因此被称作global features。然后输入全局model SVM KNN等。

这些传统方法的缺点:对于处在非线性(或近似)的mainfold来说statistically inefficient。

- Segment-Level

首先给每一个segment分类,utterance-level 的分类结果是segment-level的 aggregation

of the segment-level decisions

发现了(1) the aggregation of segment-level decisions provides richer information than the statistics over the low-level descriptors (LLDs) across the whole utterance;

(2)automatic feature learning outperforms manual features

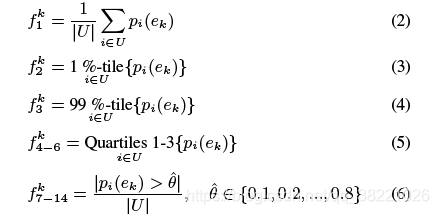

其中SegMLP的输入是IS09(manually designed perceptual features),SegCNN的输入是 log Mel filterbanks.再分别接ELM SVM RF 一共6组实验

automatic feature learning outperforms manually designed perceptual features

aggregation的方法,把f matrix送入到三种分类网络

- multiple instance learning (MIL)

实验

两个库 CASIA IEMOCAP