1、简要介绍

zookeeper是一个分布式的应用程序协调服务,是Hadoop和Hbase的重要组件,是一个树型的目录服务,支持变更推送。除此还可以用作dubbo服务的注册中心。

2、安装

2.1 下载安装

wget http://mirrors.cnnic.cn/apache/zookeeper/zookeeper-3.4.6/zookeeper-3.4.6.tar.gztar -zxvf zookeeper-3.4.6.tar.gzcd zookeeper-3.4.6cp conf/zoo_sample.cfg conf/zoo.cfg

2.2 配置

2.2.1 单点方式

(1)修改zoo.cfg,如果没有特殊要求,全部默认也可以,主要修改的地方就是dataDir 和 clientPort,如下:

tickTime=2000initLimit=10syncLimit=5dataDir=/app/soft/zookeeper-3.4.6/data (换成真实输出目录)clientPort=2181

#tickTime:这个时间是作为 Zookeeper 服务器之间或客户端与服务器之间维持心跳的时间间隔,也就是每个 tickTime 时间就会发送一个心跳,以毫秒为单位。

#initLimit:LF初始通信时限,集群中的follower服务器(F)与leader服务器(L)之间初始连接时能容忍的最多心跳数(tickTime的数量)

#syncLimit:集群中的follower服务器与leader服务器之间请求和应答之间能容忍的最多心跳数(tickTime的数量)。

#dataDir:顾名思义就是 Zookeeper 保存数据的目录,默认情况下,Zookeeper 将写数据的日志文件也保存在这个目录里。

#clientPort:这个端口就是客户端连接 Zookeeper 服务器的端口,Zookeeper 会监听这个端口,接受客户端的访问请求。

#dataLogDir:日志文件目录,Zookeeper保存日志文件的目录

#服务器名称与地址:集群信息(服务器编号,服务器地址,LF通信端口,选举端口),规则如:server.N=yyy:A:B

#其中N表示服务器编号,YYY表示服务器的IP地址,A为LF通信端口,表示该服务器与集群中的leader交换的信息的端口。B为选举端口,表示选举新leader时服务器间相互通信的端口(当leader挂掉时,其余服务器会相互通信,选择出新的leader)。一般来说,集群中每个服务器的A端口都是一样,每个服务器的B端口也是一样。但是当所采用的为伪集群时,IP地址都一样,只能时A端口和B端口不一样。

(2)启动:bin/zkServer.sh start

(3)查看是否成功:bin/zkServer.sh status

(4)查看日志:vi zooKeeper.out

2.2.2 集群方式(单IP多节点)

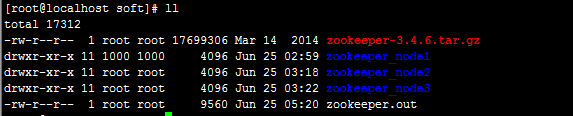

(1)拷贝3份zookeeper-3.4.6.tar.gz,如zookeeper_node1、zookeeper_node2、zookeeper_node3,结构如下:

(2)进入zookeeper_node1--->conf,修改zoo.cfg,如下:

tickTime=2000initLimit=10syncLimit=5dataDir=/app/soft/zookeeper_node1/data (换成真实输出目录)clientPort=2181server.1=127.0.0.1:2888:3888server.2=127.0.0.1:2889:3889

server.3=127.0.0.1:2890:3890

(3)然后在上面dataDir对应的目录下创建myid文件,如下:

mkdir datavi myid

myid指明自己的id,对应上面zoo.cfg中"server."后的数字,第一台的内容为1,第二台的内容为2,第三台的内容为3,内容如下:

1(4)依次类推,调整2、3节点的地址及端口,如下:

节点2:

tickTime=2000initLimit=10syncLimit=5dataDir=/app/soft/zookeeper_node2/data (换成真实输出目录)clientPort=2182server.1=127.0.0.1:2888:3888server.2=127.0.0.1:2889:3889server.3=127.0.0.1:2890:3890

节点3:

tickTime=2000initLimit=10syncLimit=5dataDir=/app/soft/zookeeper_node3/data (换成真实输出目录)clientPort=2183server.1=127.0.0.1:2888:3888server.2=127.0.0.1:2889:3889server.3=127.0.0.1:2890:389

(5),修改节点2、3的myid;

(6)启动服务,如下:

[root@localhost soft]# zookeeper_node1/bin/zkServer.sh startJMX enabled by defaultUsing config: /app/soft/zookeeper_node1/bin/../conf/zoo.cfgStarting zookeeper ... STARTED[root@localhost soft]# vi zookeeper.out 2015-06-25 05:43:13,252 [myid:] - INFO [main:QuorumPeerConfig@103] - Reading configuration from: /app/soft/zookeeper_nod2015-06-25 05:43:13,257 [myid:] - INFO [main:QuorumPeerConfig@340] - Defaulting to majority quorums2015-06-25 05:43:13,260 [myid:1] - INFO [main:DatadirCleanupManager@78] - autopurge.snapRetainCount set to 32015-06-25 05:43:13,260 [myid:1] - INFO [main:DatadirCleanupManager@79] - autopurge.purgeInterval set to 02015-06-25 05:43:13,262 [myid:1] - INFO [main:DatadirCleanupManager@101] - Purge task is not scheduled.2015-06-25 05:43:13,273 [myid:1] - INFO [main:QuorumPeerMain@127] - Starting quorum peer2015-06-25 05:43:13,285 [myid:1] - INFO [main:NIOServerCnxnFactory@94] - binding to port 0.0.0.0/0.0.0.0:21812015-06-25 05:43:13,312 [myid:1] - INFO [main:QuorumPeer@959] - tickTime set to 20002015-06-25 05:43:13,312 [myid:1] - INFO [main:QuorumPeer@979] - minSessionTimeout set to -12015-06-25 05:43:13,312 [myid:1] - INFO [main:QuorumPeer@990] - maxSessionTimeout set to -12015-06-25 05:43:13,315 [myid:1] - INFO [main:QuorumPeer@1005] - initLimit set to 102015-06-25 05:43:13,359 [myid:1] - INFO [Thread-1:QuorumCnxManager$Listener@504] - My election bind port: /127.0.0.1:3882015-06-25 05:43:13,371 [myid:1] - INFO [QuorumPeer[myid=1]/0:0:0:0:0:0:0:0:2181:QuorumPeer@714] - LOOKING2015-06-25 05:43:13,374 [myid:1] - INFO [QuorumPeer[myid=1]/0:0:0:0:0:0:0:0:2181:FastLeaderElection@815] - New election.2015-06-25 05:43:13,252 [myid:] - INFO [main:QuorumPeerConfig@103] - Reading configuration from: /app/soft/zookeeper_node1/bin/../conf/zoo.cfg2015-06-25 05:43:13,257 [myid:] - INFO [main:QuorumPeerConfig@340] - Defaulting to majority quorums2015-06-25 05:43:13,260 [myid:1] - INFO [main:DatadirCleanupManager@78] - autopurge.snapRetainCount set to 32015-06-25 05:43:13,260 [myid:1] - INFO [main:DatadirCleanupManager@79] - autopurge.purgeInterval set to 02015-06-25 05:43:13,262 [myid:1] - INFO [main:DatadirCleanupManager@101] - Purge task is not scheduled.2015-06-25 05:43:13,273 [myid:1] - INFO [main:QuorumPeerMain@127] - Starting quorum peer2015-06-25 05:43:13,285 [myid:1] - INFO [main:NIOServerCnxnFactory@94] - binding to port 0.0.0.0/0.0.0.0:21812015-06-25 05:43:13,312 [myid:1] - INFO [main:QuorumPeer@959] - tickTime set to 20002015-06-25 05:43:13,312 [myid:1] - INFO [main:QuorumPeer@979] - minSessionTimeout set to -12015-06-25 05:43:13,312 [myid:1] - INFO [main:QuorumPeer@990] - maxSessionTimeout set to -12015-06-25 05:43:13,315 [myid:1] - INFO [main:QuorumPeer@1005] - initLimit set to 102015-06-25 05:43:13,359 [myid:1] - INFO [Thread-1:QuorumCnxManager$Listener@504] - My election bind port: /127.0.0.1:38882015-06-25 05:43:13,371 [myid:1] - INFO [QuorumPeer[myid=1]/0:0:0:0:0:0:0:0:2181:QuorumPeer@714] - LOOKING2015-06-25 05:43:13,374 [myid:1] - INFO [QuorumPeer[myid=1]/0:0:0:0:0:0:0:0:2181:FastLeaderElection@815] - New election. My id = 1, proposed zxid=0x22015-06-25 05:43:13,376 [myid:1] - INFO [WorkerReceiver[myid=1]:FastLeaderElection@597] - Notification: 1 (message format version), 1 (n.leader), 0x2 (n.zxid), 0x1 (n.round), LOOKING (n.state), 1 (n.sid), 0x1 (n.peerEpoch) LOOKING (my state)2015-06-25 05:43:13,379 [myid:1] - WARN [WorkerSender[myid=1]:QuorumCnxManager@382] - Cannot open channel to 2 at election address /127.0.0.1:3889java.net.ConnectException: Connection refused at java.net.PlainSocketImpl.socketConnect(Native Method) at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:339) at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:200) at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:182) at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) at java.net.Socket.connect(Socket.java:579) at org.apache.zookeeper.server.quorum.QuorumCnxManager.connectOne(QuorumCnxManager.java:368) at org.apache.zookeeper.server.quorum.QuorumCnxManager.toSend(QuorumCnxManager.java:341) at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.process(FastLeaderElection.java:449) at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$WorkerSender.run(FastLeaderElection.java:430) at java.lang.Thread.run(Thread.java:745)2015-06-25 05:43:13,385 [myid:1] - WARN [WorkerSender[myid=1]:QuorumCnxManager@382] - Cannot open channel to 3 at election address /127.0.0.1:3890java.net.ConnectException: Connection refused at java.net.PlainSocketImpl.socketConnect(Native Method) at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:339) at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:200)

发现报错,是因为2、3节点服务还未启动。按照节点1的启动方式,依次启动2、3节点,再次查看日志,发现服务正常。

查看日志命令:vi zookeeper.out

(7)查看服务状态,如下:

[root@localhost soft]# zookeeper_node1/bin/zkServer.sh statusJMX enabled by defaultUsing config: /app/soft/zookeeper_node1/bin/../conf/zoo.cfgMode: follower[root@localhost soft]# zookeeper_node2/bin/zkServer.sh statusJMX enabled by defaultUsing config: /app/soft/zookeeper_node2/bin/../conf/zoo.cfgMode: leader[root@localhost soft]# zookeeper_node3/bin/zkServer.sh statusJMX enabled by defaultUsing config: /app/soft/zookeeper_node3/bin/../conf/zoo.cfgMode: follower

可以看出1是follower,2是leader,3是follower。