下面是主Activity.package com.example.hellojni;import android.app.Activity;import android.os.Bundle;import android.util.Log;import android.view.View;import android.view.View.OnClickListener;import android.widget.Button;import java.io.BufferedOutputStream;import java.io.File;import java.io.FileNotFoundException;import java.io.FileOutputStream;import java.io.IOException;public class HelloJni extends Activity{ Button startRecord; Button stopRecord; Button play; static BufferedOutputStream bos; static { System.loadLibrary("hello-jni"); } /** Called when the activity is first created. */ @Override public void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.main); startRecord = (Button)findViewById(R.id.start); stopRecord = (Button)findViewById(R.id.stop); play =(Button)findViewById(R.id.play); startRecord.setOnClickListener(new OnClickListener() { @Override public void onClick(View v) { new Thread(){ public void run(){ initOutputStream(); startRecord(); } }.start(); } }); stopRecord.setOnClickListener(new OnClickListener() { @Override public void onClick(View v) { new Thread(){ public void run(){ stopRecord(); try{ Thread.sleep(1000*2); if(bos!=null){ try { bos.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } }catch(Exception e){ } } }.start(); } }); play.setOnClickListener(new OnClickListener() { @Override public void onClick(View v) { new Thread(){public void run(){play();}}.start(); } }); } public void initOutputStream(){ File file = new File("/sdcard/temp.pcm"); try { bos = new BufferedOutputStream(new FileOutputStream(file)); } catch (FileNotFoundException e) { e.printStackTrace(); } } public static void receiveAudioData(byte[] data,int size){ try { bos.write(data); bos.flush(); } catch (IOException e) { e.printStackTrace(); } } public native void startRecord(); public native void stopRecord(); public native void play();}布局文件:

<?xml version="1.0" encoding="utf-8"?><LinearLayout xmlns:android="http://schemas.android.com/apk/res/android" android:layout_width="fill_parent" android:layout_height="fill_parent" android:orientation="vertical" > <Button android:id="@+id/start" android:layout_width="wrap_content" android:layout_height="wrap_content" android:text="用AudioRecord录音"/> <Button android:id="@+id/stop" android:layout_width="wrap_content" android:layout_height="wrap_content" android:text="停止录音"/> <Button android:id="@+id/play" android:layout_width="wrap_content" android:layout_height="wrap_content" android:text="用AudioTrack播放"/> <Button android:id="@+id/stop" android:layout_width="wrap_content" android:layout_height="wrap_content" android:text="底层在录音哦,请点击我,我反应很快的耶,没有阻塞UI线程"/></LinearLayout>

接下来是hello-jni.c:

#include <malloc.h>#include <string.h>#include <jni.h>#include <android/log.h>#include <stdio.h>static JNIEnv* (*jni_env);static jbyteArray buffer;static jobject audio_track;static jint buffer_size;static jmethodID method_write;#define AUDIO_SOURCE_VOICE_COMMUNICATION (7)#define AUDIO_SOURCE_MIC (1)#define SAMPLE_RATE_IN_HZ (11025)#define CHANNEL_CONFIGURATION_MONO (16)#define ENCODING_PCM_16BIT (2)#define LOG_TAG "test"#define LOGI(f,v) __android_log_print(ANDROID_LOG_INFO,LOG_TAG,f,v)#define LOGI2(a) __android_log_print(ANDROID_LOG_INFO,LOG_TAG,a)static int run= 1;void Java_com_example_hellojni_HelloJni_stopRecord(JNIEnv* jni_env, jobject thiz){ run = 0;}void Java_com_example_hellojni_HelloJni_startRecord(JNIEnv* jni_env, jobject thiz){ jclass audio_record_class =(*jni_env)->FindClass(jni_env,"android/media/AudioRecord"); jmethodID constructor_id = (*jni_env)->GetMethodID(jni_env,audio_record_class, "<init>", "(IIIII)V"); jmethodID min_buff_size_id = (*jni_env)->GetStaticMethodID(jni_env,audio_record_class, "getMinBufferSize", "(III)I"); jint buff_size = (*jni_env)->CallStaticIntMethod(jni_env,audio_record_class, min_buff_size_id, SAMPLE_RATE_IN_HZ, CHANNEL_CONFIGURATION_MONO, ENCODING_PCM_16BIT); jobject audioRecord = (*jni_env)->NewObject(jni_env,audio_record_class, constructor_id, // AUDIO_SOURCE_MIC, AUDIO_SOURCE_VOICE_COMMUNICATION, SAMPLE_RATE_IN_HZ, CHANNEL_CONFIGURATION_MONO, ENCODING_PCM_16BIT, buff_size); LOGI2("startRecording"); jmethodID record_id = (*jni_env)->GetMethodID(jni_env,audio_record_class, "startRecording", "()V"); //start recording (*jni_env)->CallVoidMethod(jni_env,audioRecord, record_id); LOGI2("after call startRecording"); jmethodID read_id = (*jni_env)->GetMethodID(jni_env,audio_record_class, "read", "([BII)I"); int nread = 0; int blockSize = 100; jbyteArray read_buff = (*jni_env)->NewByteArray(jni_env,blockSize); jbyteArray aes_bytes = (*jni_env)->NewByteArray(jni_env,blockSize); jbyte* audio_bytes; FILE* fp = fopen("/sdcard/temp.pcm","ab"); LOGI2("after fopen"); // jclass HelloJniCls =(*jni_env)->FindClass(jni_env,"com/example/hellojni/HelloJni"); jmethodID receiveAudioData = (*jni_env)->GetStaticMethodID(jni_env,HelloJniCls,"receiveAudioData", "([BI)V"); while (run) { nread = (*jni_env)->CallIntMethod(jni_env,audioRecord,read_id, read_buff, 0, blockSize); if(nread<=0){ break; } audio_bytes = (jbyte*)calloc(nread,1); (*jni_env)->GetByteArrayRegion(jni_env,read_buff, 0, nread,audio_bytes);// fwrite(audio_bytes, 1, nread, fp); (*jni_env)->CallStaticVoidMethod(jni_env,HelloJniCls, receiveAudioData, read_buff,nread); usleep(50); }}void Java_com_example_hellojni_HelloJni_play(JNIEnv* jni_env, jobject thiz){ LOGI2("after Java_com_example_hellojni_HelloJni_play");// (*jni_env) = jni_env; jclass audio_track_cls = (*jni_env)->FindClass(jni_env,"android/media/AudioTrack"); jmethodID min_buff_size_id = (*jni_env)->GetStaticMethodID( jni_env, audio_track_cls, "getMinBufferSize", "(III)I"); buffer_size = (*jni_env)->CallStaticIntMethod(jni_env,audio_track_cls,min_buff_size_id, 11025, 2, /*CHANNEL_CONFIGURATION_MONO*/ 2); /*ENCODING_PCM_16BIT*/ LOGI("buffer_size=%i",buffer_size); buffer = (*jni_env)->NewByteArray(jni_env,buffer_size/4); char buf[buffer_size/4]; jmethodID constructor_id = (*jni_env)->GetMethodID(jni_env,audio_track_cls, "<init>", "(IIIIII)V"); audio_track = (*jni_env)->NewObject(jni_env,audio_track_cls, constructor_id, 3, /*AudioManager.STREAM_MUSIC*/ 11025, /*sampleRateInHz*/ 2, /*CHANNEL_CONFIGURATION_MONO*/ 2, /*ENCODING_PCM_16BIT*/ buffer_size, /*bufferSizeInBytes*/ 1 /*AudioTrack.MODE_STREAM*/ ); //setvolume LOGI2("setStereoVolume 1"); jmethodID setStereoVolume = (*jni_env)->GetMethodID(jni_env,audio_track_cls,"setStereoVolume","(FF)I"); (*jni_env)->CallIntMethod(jni_env,audio_track,setStereoVolume,1.0,1.0); LOGI2("setStereoVolume 2"); //play jmethodID method_play = (*jni_env)->GetMethodID(jni_env,audio_track_cls, "play", "()V"); (*jni_env)->CallVoidMethod(jni_env,audio_track, method_play); //write method_write = (*jni_env)->GetMethodID(jni_env,audio_track_cls,"write","([BII)I"); FILE* fp = fopen("/sdcard/temp.pcm","rb"); LOGI2("after open"); int i=0; while(!feof(fp)){ jint read= fread(buf,sizeof(char),200,fp); (*jni_env)->SetByteArrayRegion(jni_env,buffer, 0,read, (jbyte *)buf); (*jni_env)->CallVoidMethod(jni_env,audio_track,method_write,buffer,0,read); }}编译文件:Android.mk:LOCAL_PATH := $(call my-dir)include $(CLEAR_VARS)LOCAL_MODULE := hello-jniLOCAL_SRC_FILES := hello-jni.cLOCAL_LDLIBS := -L$(SYSROOT)/usr/lib -lloginclude $(BUILD_SHARED_LIBRARY)

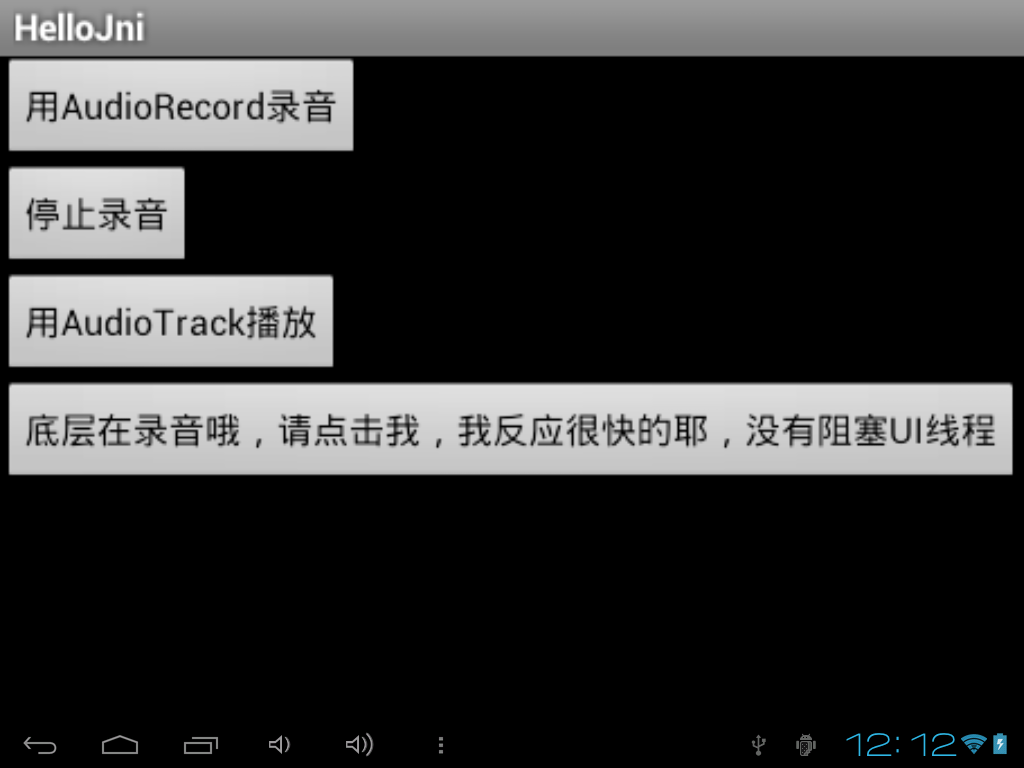

贴上效果图:

例子中的调用思路: Activity->JNI API ->c c->JNI API->Activity

点击"用AudioRecord录音",启动了一条线程,该线程调用native方法startRecord,开始在native层启动录音。在录音的过程中,再将读取到的音频字节数组丢到java层。方法是:->(*jni_env)->CallStaticVoidMethod(jni_env,HelloJniCls, receiveAudioData, read_buff,nread); 然后在Activity中的静态方法 public static void receiveAudioData(byte[] data,int size){

try {

bos.write(data);

bos.flush();

} catch (IOException e) {

e.printStackTrace();

}

}

中将数据写到文件.

至于用AudioTrack来播放的流程差不多,就不废话了。

非常要注意的是在:

startRecord.setOnClickListener(new OnClickListener() {

@Override

public void onClick(View v) {

new Thread(){

public void run(){

initOutputStream();

startRecord();

}

}.start();

}

});

这里要写个线程,很重要!!!! ,不然会阻塞UI线程的!!!

做这个例子是做一个远程会议产品中要用到底层录音,由于项目中开启录音导致界面很卡,所以通过这个例子找到了原因,那就是录音模块没有在一个非UI线程中运行,导致阻塞UI.

【代码全部已经全部贴上】