识别身份证信息需要用到图像处理的知识,无奈水平不够,于是寻找第三方接口,但是找到的都是收费的,后来找到一个叫云脉的产品http://ocr.ccyunmai.com/,发现他可以免费使用15天,但是15天后就是按识别次数收费的,其价格十分昂贵,0.3元/次,对于苦逼的穷屌来说,这真是天价啊。

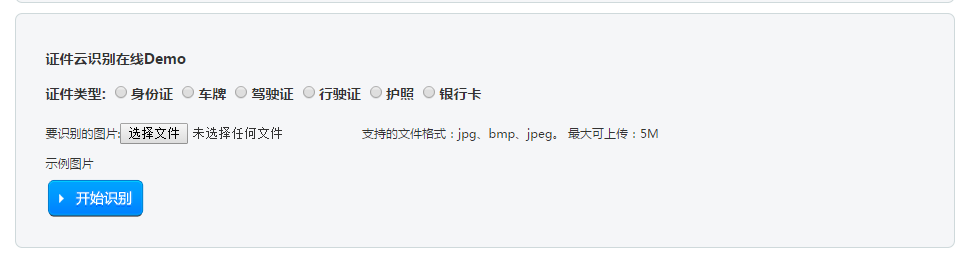

但是皇天不负有心人,云脉提供了一个在线识别的demo,其地址为http://ocr.ccyunmai.com/idcard/,但是这个地址有什么用呢,别急,作为专业抓数据出身的来说,我们可以利用该地址进行识别,而且不费一分钱。先打开该网址看看长什么样

我们利用云脉提供的一张测试图片上传进行测试

在上传前,记得打开开发者模式,Chrome里按F12,切换到NetWork,点击上传,上传完成后会返回识别结果,找到UploadImg.action

点击查看其请求体

我们着重看红色方框里的内容,只有我们将这些信息提供给该接口http://ocr.ccyunmai.com/UploadImg.action,只要身份证图片正确,它便会给我们返回识别信息。我们要做的就是用程序模拟这个过程。

请求体里需要传递Host,Origin,Referer,User-Agent,其直直接从浏览器得到的信息中复制即可,请求方式是POST,POST的内容分为三部分,一个是callbackurl,其值为/idcard/,一个是action,其值为idcard,还有一个就是上传的文件了,叫做img,其文件名就是我们上传的文件名,这里我的文件是test-idcard.jpg,然后其Content-Type是image/jpeg,接下来我们来模拟这个过程。

我们使用OkHttp作为网络层,结合之前的文章Android OkHttp文件上传与下载的进度监听扩展进行扩展。

增加gradle依赖

compile 'com.squareup.okhttp:okhttp:2.5.0'compile 'cn.edu.zafu:coreprogress:0.0.1'compile 'org.jsoup:jsoup:1.8.3'我们看到还依赖了jsoup,其实后续会用到它进行解析返回结果。

需要使用网络进行上传,并且需要文件的读取,增加这两个权限

<uses-permission android:name="android.permission.INTERNET"/> <uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE"/>初始化OkHttp,避免超时,设置超时时间

OkHttpClient mOkHttpClient=new OkHttpClient();private void initClient() { mOkHttpClient.setConnectTimeout(1000, TimeUnit.MINUTES); mOkHttpClient.setReadTimeout(1000, TimeUnit.MINUTES); mOkHttpClient.setWriteTimeout(1000, TimeUnit.MINUTES);}然后我们需要对一个变量进行赋值,让它存储本地的身份证图片,其值为文件路径

private String mPhotoPath="文件路径";接下来开始构造请求头和POST的信息,上传文件的过程中需要监听进度,所以这里使用了前文所说的库

private void uploadAndRecognize() { if (!TextUtils.isEmpty(mPhotoPath)){ File file=new File(mPhotoPath); //构造请求体 RequestBody requestBody = new MultipartBuilder().type(MultipartBuilder.FORM) .addPart(Headers.of("Content-Disposition", "form-data; name=\"callbackurl\""), RequestBody.create(null, "/idcard/")) .addPart(Headers.of("Content-Disposition", "form-data; name=\"action\""), RequestBody.create(null, "idcard")) .addPart(Headers.of("Content-Disposition", "form-data; name=\"img\"; filename=\"idcardFront_user.jpg\""), RequestBody.create(MediaType.parse("image/jpeg"), file)) .build(); //这个是ui线程回调,可直接操作UI final UIProgressRequestListener uiProgressRequestListener = new UIProgressRequestListener() { @Override public void onUIRequestProgress(long bytesWrite, long contentLength, boolean done) { Log.e("TAG", "bytesWrite:" + bytesWrite); Log.e("TAG", "contentLength" + contentLength); Log.e("TAG", (100 * bytesWrite) / contentLength + " % done "); Log.e("TAG", "done:" + done); Log.e("TAG", "================================"); //ui层回调 mProgressBar.setProgress((int) ((100 * bytesWrite) / contentLength)); } }; //构造请求头 final Request request = new Request.Builder() .header("Host", "ocr.ccyunmai.com") .header("Origin", "http://ocr.ccyunmai.com") .header("Referer", "http://ocr.ccyunmai.com/idcard/") .header("User-Agent", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/44.0.2398.0 Safari/537.36") .url("http://ocr.ccyunmai.com/UploadImg.action") .post(ProgressHelper.addProgressRequestListener(requestBody, uiProgressRequestListener)) .build(); //开始请求 mOkHttpClient.newCall(request).enqueue(new Callback() { @Override public void onFailure(Request request, IOException e) { Log.e("TAG", "error"); } @Override public void onResponse(Response response) throws IOException { String result=response.body().string(); } }); }}请求成功后在onResponse里会进行回调,局部变量拿到的就是最终的返回结果。

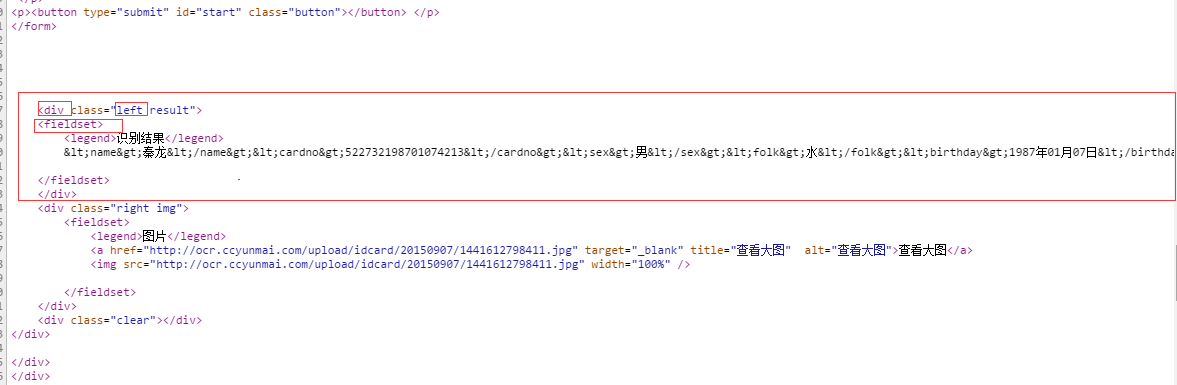

我们在浏览器中查看下返回的信息的源代码,以进一步便于解析识别结果

返回结果被包含在一个div中,其class为left,在div中还包含了一层fieldset,识别结果就在这里面,于是,我们对result进行解析,使用的就是前面加入的依赖Jsoup

String result=response.body().string();Document parse = Jsoup.parse(result);Elements select = parse.select("div.left fieldset");Log.e("TAG",select.text());Document parse1 = Jsoup.parse(select.text());StringBuilder builder=new StringBuilder();String name=parse1.select("name").text();String cardno=parse1.select("cardno").text();String sex=parse1.select("sex").text();String folk=parse1.select("folk").text();String birthday=parse1.select("birthday").text();String address=parse1.select("address").text();String issue_authority=parse1.select("issue_authority").text();String valid_period=parse1.select("valid_period").text();builder.append("name:"+name) .append("\n") .append("cardno:" + cardno) .append("\n") .append("sex:" + sex) .append("\n") .append("folk:" + folk) .append("\n") .append("birthday:" + birthday) .append("\n") .append("address:" + address) .append("\n") .append("issue_authority:" + issue_authority) .append("\n") .append("valid_period:" + valid_period) .append("\n");Log.e("TAG", "name:" + name);Log.e("TAG","cardno:"+cardno);Log.e("TAG","sex:"+sex);Log.e("TAG","folk:"+folk);Log.e("TAG","birthday:"+birthday);Log.e("TAG","address:"+address);Log.e("TAG","issue_authority:"+issue_authority);Log.e("TAG","valid_period:"+valid_period);很简单有木有,所有信息都被抽取出来了,看下Log,看下识别结果是什么。

其实识别的还是挺准的。识别是可以识别了,但是我们希望能够自己拍摄照片然后上传识别,就像这样子。

这个就涉及到Android Camera和SurfaceView的知识了。在这之前,我们先编写一个自动对焦的管理类。

/** * 自动对焦 * User:lizhangqu(513163535@qq.com) * Date:2015-09-05 * Time: 11:11 */public class AutoFocusManager implements Camera.AutoFocusCallback{ private static final String TAG = AutoFocusManager.class.getSimpleName(); private static final long AUTO_FOCUS_INTERVAL_MS = 2000L; private static final Collection<String> FOCUS_MODES_CALLING_AF; static { FOCUS_MODES_CALLING_AF = new ArrayList<String>(2); FOCUS_MODES_CALLING_AF.add(Camera.Parameters.FOCUS_MODE_AUTO); FOCUS_MODES_CALLING_AF.add(Camera.Parameters.FOCUS_MODE_MACRO); } private boolean stopped; private boolean focusing; private final boolean useAutoFocus; private final Camera camera; private AsyncTask<?,?,?> outstandingTask; public AutoFocusManager(Camera camera) { this.camera = camera; String currentFocusMode = camera.getParameters().getFocusMode(); useAutoFocus = FOCUS_MODES_CALLING_AF.contains(currentFocusMode); Log.e(TAG, "Current focus mode '" + currentFocusMode + "'; use auto focus? " + useAutoFocus); start(); } @Override public synchronized void onAutoFocus(boolean success, Camera theCamera) { focusing = false; autoFocusAgainLater(); } private synchronized void autoFocusAgainLater() { if (!stopped && outstandingTask == null) { AutoFocusTask newTask = new AutoFocusTask(); try { newTask.executeOnExecutor(AsyncTask.THREAD_POOL_EXECUTOR); outstandingTask = newTask; } catch (RejectedExecutionException ree) { Log.e(TAG, "Could not request auto focus", ree); } } } /** * 开始自动对焦 */ public synchronized void start() { if (useAutoFocus) { outstandingTask = null; if (!stopped && !focusing) { try { camera.autoFocus(this); focusing = true; } catch (RuntimeException re) { // Have heard RuntimeException reported in Android 4.0.x+; continue? Log.e(TAG, "Unexpected exception while focusing", re); // Try again later to keep cycle going autoFocusAgainLater(); } } } } private synchronized void cancelOutstandingTask() { if (outstandingTask != null) { if (outstandingTask.getStatus() != AsyncTask.Status.FINISHED) { outstandingTask.cancel(true); } outstandingTask = null; } } /** * 停止自动对焦 */ public synchronized void stop() { stopped = true; if (useAutoFocus) { cancelOutstandingTask(); // Doesn't hurt to call this even if not focusing try { camera.cancelAutoFocus(); } catch (RuntimeException re) { // Have heard RuntimeException reported in Android 4.0.x+; continue? Log.e(TAG, "Unexpected exception while cancelling focusing", re); } } } private final class AutoFocusTask extends AsyncTask<Object,Object,Object> { @Override protected Object doInBackground(Object... voids) { try { Thread.sleep(AUTO_FOCUS_INTERVAL_MS); } catch (InterruptedException e) { // continue } start(); return null; } }}其实这个类是从Zxing中提取出来的,其功能就是每隔一段时间进行自动对焦,看代码就能看懂,这里不再累赘。

接下来就是和Camera相关的管理类,这个类也是从Zxing中提取出来进行了精简

/** * Camera管理类 * User:lizhangqu([email protected]) * Date:2015-09-05 * Time: 10:56 */public class CameraManager { private static final String TAG = CameraManager.class.getName(); private Camera camera; private Camera.Parameters parameters; private AutoFocusManager autoFocusManager; private int requestedCameraId = -1; private boolean initialized; private boolean previewing; /** * 打开摄像头 * * @param cameraId 摄像头id * @return Camera */ public Camera open(int cameraId) { int numCameras = Camera.getNumberOfCameras(); if (numCameras == 0) { Log.e(TAG, "No cameras!"); return null; } boolean explicitRequest = cameraId >= 0; if (!explicitRequest) { // Select a camera if no explicit camera requested int index = 0; while (index < numCameras) { Camera.CameraInfo cameraInfo = new Camera.CameraInfo(); Camera.getCameraInfo(index, cameraInfo); if (cameraInfo.facing == Camera.CameraInfo.CAMERA_FACING_BACK) { break; } index++; } cameraId = index; } Camera camera; if (cameraId < numCameras) { Log.e(TAG, "Opening camera #" + cameraId); camera = Camera.open(cameraId); } else { if (explicitRequest) { Log.e(TAG, "Requested camera does not exist: " + cameraId); camera = null; } else { Log.e(TAG, "No camera facing back; returning camera #0"); camera = Camera.open(0); } } return camera; } /** * 打开camera * * @param holder SurfaceHolder * @throws IOException IOException */ public synchronized void openDriver(SurfaceHolder holder) throws IOException { Log.e(TAG, "openDriver"); Camera theCamera = camera; if (theCamera == null) { theCamera = open(requestedCameraId); if (theCamera == null) { throw new IOException(); } camera = theCamera; } theCamera.setPreviewDisplay(holder); if (!initialized) { initialized = true; parameters = camera.getParameters(); parameters.setPreviewSize(800, 600); parameters.setPictureFormat(ImageFormat.JPEG); parameters.setJpegQuality(100); parameters.setPictureSize(800, 600); theCamera.setParameters(parameters); } } /** * camera是否打开 * * @return camera是否打开 */ public synchronized boolean isOpen() { return camera != null; } /** * 关闭camera */ public synchronized void closeDriver() { Log.e(TAG, "closeDriver"); if (camera != null) { camera.release(); camera = null; } } /** * 开始预览 */ public synchronized void startPreview() { Log.e(TAG, "startPreview"); Camera theCamera = camera; if (theCamera != null && !previewing) { theCamera.startPreview(); previewing = true; autoFocusManager = new AutoFocusManager(camera); } } /** * 关闭预览 */ public synchronized void stopPreview() { Log.e(TAG, "stopPreview"); if (autoFocusManager != null) { autoFocusManager.stop(); autoFocusManager = null; } if (camera != null && previewing) { camera.stopPreview(); previewing = false; } } /** * 打开闪光灯 */ public synchronized void openLight() { Log.e(TAG, "openLight"); if (camera != null) { parameters = camera.getParameters(); parameters.setFlashMode(Camera.Parameters.FLASH_MODE_TORCH); camera.setParameters(parameters); } } /** * 关闭闪光灯 */ public synchronized void offLight() { Log.e(TAG, "offLight"); if (camera != null) { parameters = camera.getParameters(); parameters.setFlashMode(Camera.Parameters.FLASH_MODE_OFF); camera.setParameters(parameters); } } /** * 拍照 * * @param shutter ShutterCallback * @param raw PictureCallback * @param jpeg PictureCallback */ public synchronized void takePicture(final Camera.ShutterCallback shutter, final Camera.PictureCallback raw, final Camera.PictureCallback jpeg) { camera.takePicture(shutter, raw, jpeg); }}我们看到上面的截图的取景框是蓝色边框,上面还有一行提示的字,这是个自定义的SurfaceView,我们需要自己去实现绘制逻辑

/** * 边框绘制 * User:lizhangqu([email protected]) * Date:2015-09-04 * Time: 18:03 */public class PreviewBorderView extends SurfaceView implements SurfaceHolder.Callback, Runnable { private int mScreenH; private int mScreenW; private Canvas mCanvas; private Paint mPaint; private Paint mPaintLine; private SurfaceHolder mHolder; private Thread mThread; private static final String DEFAULT_TIPS_TEXT = "请将方框对准证件拍摄"; private static final int DEFAULT_TIPS_TEXT_SIZE = 16; private static final int DEFAULT_TIPS_TEXT_COLOR = Color.GREEN; /** * 自定义属性 */ private float tipTextSize; private int tipTextColor; private String tipText; public PreviewBorderView(Context context) { this(context, null); } public PreviewBorderView(Context context, AttributeSet attrs) { this(context, attrs, 0); } public PreviewBorderView(Context context, AttributeSet attrs, int defStyleAttr) { super(context, attrs, defStyleAttr); initAttrs(context, attrs); init(); } /** * 初始化自定义属性 * * @param context Context * @param attrs AttributeSet */ private void initAttrs(Context context, AttributeSet attrs) { TypedArray a = context.obtainStyledAttributes(attrs, R.styleable.PreviewBorderView); try { tipTextSize = a.getDimension(R.styleable.PreviewBorderView_tipTextSize, TypedValue.applyDimension(TypedValue.COMPLEX_UNIT_DIP, DEFAULT_TIPS_TEXT_SIZE, getResources().getDisplayMetrics())); tipTextColor = a.getColor(R.styleable.PreviewBorderView_tipTextColor, DEFAULT_TIPS_TEXT_COLOR); tipText = a.getString(R.styleable.PreviewBorderView_tipText); if (tipText == null) { tipText = DEFAULT_TIPS_TEXT; } } finally { a.recycle(); } } /** * 初始化绘图变量 */ private void init() { this.mHolder = getHolder(); this.mHolder.addCallback(this); this.mHolder.setFormat(PixelFormat.TRANSPARENT); setZOrderOnTop(true); this.mPaint = new Paint(); this.mPaint.setAntiAlias(true); this.mPaint.setColor(Color.WHITE); this.mPaint.setStyle(Paint.Style.FILL_AND_STROKE); this.mPaint.setXfermode(new PorterDuffXfermode(PorterDuff.Mode.CLEAR)); this.mPaintLine = new Paint(); this.mPaintLine.setColor(tipTextColor); this.mPaintLine.setStrokeWidth(3.0F); setKeepScreenOn(true); } /** * 绘制取景框 */ private void draw() { try { this.mCanvas = this.mHolder.lockCanvas(); this.mCanvas.drawARGB(100, 0, 0, 0); this.mScreenW = (this.mScreenH * 4 / 3); Log.e("TAG","mScreenW:"+mScreenW+" mScreenH:"+mScreenH); this.mCanvas.drawRect(new RectF(this.mScreenW / 2 - this.mScreenH * 2 / 3 + this.mScreenH * 1 / 6, this.mScreenH * 1 / 6, this.mScreenW / 2 + this.mScreenH * 2 / 3 - this.mScreenH * 1 / 6, this.mScreenH - this.mScreenH * 1 / 6), this.mPaint); this.mCanvas.drawLine(this.mScreenW / 2 - this.mScreenH * 2 / 3 + this.mScreenH * 1 / 6, this.mScreenH * 1 / 6, this.mScreenW / 2 - this.mScreenH * 2 / 3 + this.mScreenH * 1 / 6, this.mScreenH * 1 / 6 + 50, this.mPaintLine); this.mCanvas.drawLine(this.mScreenW / 2 - this.mScreenH * 2 / 3 + this.mScreenH * 1 / 6, this.mScreenH * 1 / 6, this.mScreenW / 2 - this.mScreenH * 2 / 3 + this.mScreenH * 1 / 6 + 50, this.mScreenH * 1 / 6, this.mPaintLine); this.mCanvas.drawLine(this.mScreenW / 2 + this.mScreenH * 2 / 3 - this.mScreenH * 1 / 6, this.mScreenH * 1 / 6, this.mScreenW / 2 + this.mScreenH * 2 / 3 - this.mScreenH * 1 / 6, this.mScreenH * 1 / 6 + 50, this.mPaintLine); this.mCanvas.drawLine(this.mScreenW / 2 + this.mScreenH * 2 / 3 - this.mScreenH * 1 / 6, this.mScreenH * 1 / 6, this.mScreenW / 2 + this.mScreenH * 2 / 3 - this.mScreenH * 1 / 6 - 50, this.mScreenH * 1 / 6, this.mPaintLine); this.mCanvas.drawLine(this.mScreenW / 2 - this.mScreenH * 2 / 3 + this.mScreenH * 1 / 6, this.mScreenH - this.mScreenH * 1 / 6, this.mScreenW / 2 - this.mScreenH * 2 / 3 + this.mScreenH * 1 / 6, this.mScreenH - this.mScreenH * 1 / 6 - 50, this.mPaintLine); this.mCanvas.drawLine(this.mScreenW / 2 - this.mScreenH * 2 / 3 + this.mScreenH * 1 / 6, this.mScreenH - this.mScreenH * 1 / 6, this.mScreenW / 2 - this.mScreenH * 2 / 3 + this.mScreenH * 1 / 6 + 50, this.mScreenH - this.mScreenH * 1 / 6, this.mPaintLine); this.mCanvas.drawLine(this.mScreenW / 2 + this.mScreenH * 2 / 3 - this.mScreenH * 1 / 6, this.mScreenH - this.mScreenH * 1 / 6, this.mScreenW / 2 + this.mScreenH * 2 / 3 - this.mScreenH * 1 / 6, this.mScreenH - this.mScreenH * 1 / 6 - 50, this.mPaintLine); this.mCanvas.drawLine(this.mScreenW / 2 + this.mScreenH * 2 / 3 - this.mScreenH * 1 / 6, this.mScreenH - this.mScreenH * 1 / 6, this.mScreenW / 2 + this.mScreenH * 2 / 3 - this.mScreenH * 1 / 6 - 50, this.mScreenH - this.mScreenH * 1 / 6, this.mPaintLine); mPaintLine.setTextSize(tipTextSize); mPaintLine.setAntiAlias(true); mPaintLine.setDither(true); float length = mPaintLine.measureText(tipText); this.mCanvas.drawText(tipText, this.mScreenW / 2 - this.mScreenH * 2 / 3 + this.mScreenH * 1 / 6 + this.mScreenH / 2 - length / 2, this.mScreenH * 1 / 6 - tipTextSize, mPaintLine); Log.e("TAG", "left:" + (this.mScreenW / 2 - this.mScreenH * 2 / 3 + this.mScreenH * 1 / 6)); Log.e("TAG", "top:" + (this.mScreenH * 1 / 6)); Log.e("TAG", "right:" + (this.mScreenW / 2 + this.mScreenH * 2 / 3 - this.mScreenH * 1 / 6)); Log.e("TAG", "bottom:" + (this.mScreenH - this.mScreenH * 1 / 6)); } catch (Exception e) { e.printStackTrace(); } finally { if (this.mCanvas != null) { this.mHolder.unlockCanvasAndPost(this.mCanvas); } } } @Override public void surfaceCreated(SurfaceHolder holder) { //获得宽高,开启子线程绘图 this.mScreenW = getWidth(); this.mScreenH = getHeight(); this.mThread = new Thread(this); this.mThread.start(); } @Override public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) { } @Override public void surfaceDestroyed(SurfaceHolder holder) { //停止线程 try { mThread.interrupt(); mThread = null; } catch (Exception e) { e.printStackTrace(); } } @Override public void run() { //子线程绘图 draw(); }}这里面用到了图形的混合模式PorterDuff.Mode.CLEAR,注意SurfeceView的绘制是可以在子线程中进行的,还有一点就是取景宽外围的整个SurfaceView的宽高比例是4:3,这个和Camera的预览和图像的比例设置的一样,避免图形预览变形。这个类的绘制逻辑并不复杂,只不过绘制的长度等信息需要测量过。

还有几个自定义属性

<resources> <declare-styleable name="PreviewBorderView"> <attr name="tipText" format="string"/> <attr name="tipTextColor" format="color|reference"/> <attr name="tipTextSize" format="dimension"/> </declare-styleable></resources>最后剩下的就是预览并拍照的Activity了,里面有几个辅助方法用于获取长宽,然后重置了布局文件里的控件的长宽比例为4:3,并且这个Activity需要给调用者返回结果,返回的数据可能有点大,Bundle传递数据最大不能超过1M,于是这里直接传递保存的文件的路径回去。在onCreate里进行了Intent的获取,获取调用方传来的参数,如果没有传过来,则使用默认值。

里面有两个按钮,一个是拍照的,一个是打开或关闭闪光灯的,设置了事件监听并调用对应的方法,拍照需要传递一个回调,这个回调里面进行了数据的存储与返回调用方结果。其他的都是一些初始化和销毁的动作了,看下源码就知道了。

public class CameraActivity extends AppCompatActivity implements SurfaceHolder.Callback { private LinearLayout mLinearLayout; private PreviewBorderView mPreviewBorderView; private SurfaceView mSurfaceView; private CameraManager cameraManager; private boolean hasSurface; private Intent mIntent; private static final String DEFAULT_PATH = "/sdcard/"; private static final String DEFAULT_NAME = "default.jpg"; private static final String DEFAULT_TYPE = "default"; private String filePath; private String fileName; private String type; private Button take, light; private boolean toggleLight; @Override protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_camera); initIntent(); initLayoutParams(); } private void initIntent() { mIntent = getIntent(); filePath = mIntent.getStringExtra("path"); fileName = mIntent.getStringExtra("name"); type = mIntent.getStringExtra("type"); if (filePath == null) { filePath = DEFAULT_PATH; } if (fileName == null) { fileName = DEFAULT_NAME; } if (type == null) { type = DEFAULT_TYPE; } Log.e("TAG", filePath + "/" + fileName + "_" + type); } /** * 重置surface宽高比例为3:4,不重置的话图形会拉伸变形 */ private void initLayoutParams() { take = (Button) findViewById(R.id.take); light = (Button) findViewById(R.id.light); take.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { cameraManager.takePicture(null, null, myjpegCallback); } }); light.setOnClickListener(new View.OnClickListener() { @Override public void onClick(View v) { if (!toggleLight) { toggleLight = true; cameraManager.openLight(); } else { toggleLight = false; cameraManager.offLight(); } } }); //重置宽高,3:4 int widthPixels = getScreenWidth(this); int heightPixels = getScreenHeight(this); mLinearLayout = (LinearLayout) findViewById(R.id.linearlaout); mPreviewBorderView = (PreviewBorderView) findViewById(R.id.borderview); mSurfaceView = (SurfaceView) findViewById(R.id.surfaceview); RelativeLayout.LayoutParams surfaceviewParams = (RelativeLayout.LayoutParams) mSurfaceView.getLayoutParams(); surfaceviewParams.width = heightPixels * 4 / 3; surfaceviewParams.height = heightPixels; mSurfaceView.setLayoutParams(surfaceviewParams); RelativeLayout.LayoutParams borderViewParams = (RelativeLayout.LayoutParams) mPreviewBorderView.getLayoutParams(); borderViewParams.width = heightPixels * 4 / 3; borderViewParams.height = heightPixels; mPreviewBorderView.setLayoutParams(borderViewParams); RelativeLayout.LayoutParams linearLayoutParams = (RelativeLayout.LayoutParams) mLinearLayout.getLayoutParams(); linearLayoutParams.width = widthPixels - heightPixels * 4 / 3; linearLayoutParams.height = heightPixels; mLinearLayout.setLayoutParams(linearLayoutParams); Log.e("TAG","Screen width:"+heightPixels * 4 / 3); Log.e("TAG","Screen height:"+heightPixels); } @Override protected void onResume() { super.onResume(); /** * 初始化camera */ cameraManager = new CameraManager(); SurfaceView surfaceView = (SurfaceView) findViewById(R.id.surfaceview); SurfaceHolder surfaceHolder = surfaceView.getHolder(); if (hasSurface) { // activity在paused时但不会stopped,因此surface仍旧存在; // surfaceCreated()不会调用,因此在这里初始化camera initCamera(surfaceHolder); } else { // 重置callback,等待surfaceCreated()来初始化camera surfaceHolder.addCallback(this); } } @Override public void surfaceCreated(SurfaceHolder holder) { if (!hasSurface) { hasSurface = true; initCamera(holder); } } @Override public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) { } @Override public void surfaceDestroyed(SurfaceHolder holder) { hasSurface = false; } /** * 初始camera * * @param surfaceHolder SurfaceHolder */ private void initCamera(SurfaceHolder surfaceHolder) { if (surfaceHolder == null) { throw new IllegalStateException("No SurfaceHolder provided"); } if (cameraManager.isOpen()) { return; } try { // 打开Camera硬件设备 cameraManager.openDriver(surfaceHolder); // 创建一个handler来打开预览,并抛出一个运行时异常 cameraManager.startPreview(); } catch (Exception ioe) { } } @Override protected void onPause() { /** * 停止camera,是否资源操作 */ cameraManager.stopPreview(); cameraManager.closeDriver(); if (!hasSurface) { SurfaceView surfaceView = (SurfaceView) findViewById(R.id.surfaceview); SurfaceHolder surfaceHolder = surfaceView.getHolder(); surfaceHolder.removeCallback(this); } super.onPause(); } /** * 拍照回调 */ Camera.PictureCallback myjpegCallback = new Camera.PictureCallback() { @Override public void onPictureTaken(final byte[] data, Camera camera) { // 根据拍照所得的数据创建位图 final Bitmap bitmap = BitmapFactory.decodeByteArray(data, 0, data.length); int height = bitmap.getHeight(); int width = bitmap.getWidth(); final Bitmap bitmap1 = Bitmap.createBitmap(bitmap, (width - height) / 2, height / 6, height, height * 2 / 3); Log.e("TAG","width:"+width+" height:"+height); Log.e("TAG","x:"+(width - height) / 2+" y:"+height / 6+" width:"+height+" height:"+height * 2 / 3); // 创建一个位于SD卡上的文件 File path=new File(filePath); if (!path.exists()){ path.mkdirs(); } File file = new File(path, type+"_"+fileName); FileOutputStream outStream = null; try { // 打开指定文件对应的输出流 outStream = new FileOutputStream(file); // 把位图输出到指定文件中 bitmap1.compress(Bitmap.CompressFormat.JPEG, 100, outStream); outStream.close(); } catch (Exception e) { e.printStackTrace(); } Intent intent = new Intent(); Bundle bundle = new Bundle(); bundle.putString("path", file.getAbsolutePath()); bundle.putString("type", type); intent.putExtras(bundle); setResult(RESULT_OK, intent); CameraActivity.this.finish(); } }; /** * 获得屏幕宽度,单位px * * @param context 上下文 * @return 屏幕宽度 */ public int getScreenWidth(Context context) { DisplayMetrics dm = context.getResources().getDisplayMetrics(); return dm.widthPixels; } /** * 获得屏幕高度 * * @param context 上下文 * @return 屏幕除去通知栏的高度 */ public int getScreenHeight(Context context) { DisplayMetrics dm = context.getResources().getDisplayMetrics(); return dm.heightPixels-getStatusBarHeight(context); } /** * 获取通知栏高度 * * @param context 上下文 * @return 通知栏高度 */ public int getStatusBarHeight(Context context) { int statusBarHeight = 0; try { Class<?> clazz = Class.forName("com.android.internal.R$dimen"); Object obj = clazz.newInstance(); Field field = clazz.getField("status_bar_height"); int temp = Integer.parseInt(field.get(obj).toString()); statusBarHeight = context.getResources().getDimensionPixelSize(temp); } catch (Exception e) { e.printStackTrace(); } return statusBarHeight; }}申明Activity为横屏模式以及拍照的权限等相关信息

<uses-permission android:name="android.permission.CAMERA"/> <uses-feature android:name="android.hardware.camera"/> <uses-feature android:name="android.hardware.camera.autofocus"/> <activity android:name="cn.edu.zafu.camera.activity.CameraActivity" android:screenOrientation="landscape" />在其他的Activity中直接调用即可

Intent intent = new Intent(MainActivity.this, CameraActivity.class);String pathStr = mPath.getText().toString();String nameStr = mName.getText().toString();String typeStr = mType.getText().toString();if (!TextUtils.isEmpty(pathStr)) { intent.putExtra("path", pathStr);}if (!TextUtils.isEmpty(nameStr)) { intent.putExtra("name", nameStr);}if (!TextUtils.isEmpty(typeStr)) { intent.putExtra("type", typeStr);}startActivityForResult(intent, 100);获得返回结果并显示在ImageView上

@Overrideprotected void onActivityResult(int requestCode, int resultCode, Intent data) { Log.e("TAG","onActivityResult"); if (requestCode == 100) { if (resultCode == RESULT_OK) { Bundle extras = data.getExtras(); String path=extras.getString("path"); String type=extras.getString("type"); Toast.makeText(getApplicationContext(),"path:"+ path + " type:" + type, Toast.LENGTH_LONG).show(); File file = new File(path); FileInputStream inStream = null; try { inStream = new FileInputStream(file); Bitmap bitmap = BitmapFactory.decodeStream(inStream); mPhoto.setImageBitmap(bitmap); inStream.close(); } catch (Exception e) { e.printStackTrace(); } } } super.onActivityResult(requestCode, resultCode, data);}最终的U如下所示

具体细节见源码吧,由于csdn抽了,文件上传不了,所以把代码传github了

- https://github.com/lizhangqu/Camera

版权声明:本文为博主原创文章,未经博主允许不得转载。

- 2楼soledadzz昨天 15:55

- 注意打上马赛克

- Re: sbsujjbcy昨天 15:56

- 回复soledadzzn证件信息并非真实,云脉官网提供的

- 1楼tuhaihe昨天 14:21

- 太勇敢了,直接暴露自己的身份证件信息了。。。

- Re: sbsujjbcy昨天 15:25

- 回复tuhaihen证件信息并非真实,云脉官网提供的